As AI continues to transform industries at an exponential pace, large enterprises are facing a critical decision: Should they opt for off-the-shelf LLMs or invest in custom-built solutions? The rapid growth of artificial intelligence offers companies the opportunity to streamline operations, enhance customer experiences, and unlock new insights. For instance, about 67% of organizations worldwide are using generative AI powered by LLMs to improve operations and processes across different industries.

Off-the-shelf models, while offering speed and cost-effectiveness, often fall short when it comes to handling specific, industry-related challenges or ensuring the control and privacy of proprietary data. Custom LLMs, on the other hand, are built to meet the unique needs of each business, providing more flexibility and control but requiring a more considerable investment in time and resources.

At Intellivon, we specialize in guiding enterprises through this decision-making process. Our hands-on expertise in custom LLM development empowers businesses to choose the best AI solution tailored to their long-term goals. With a focus on scalability, data privacy, and future-proofing, we help enterprises make data-driven decisions that support both immediate needs and future growth. In this blog, we will walk you through which LLM version you should pick for your enterprise needs and how we build and deploy custom LLMs from scratch.

Understanding the Need For LLMs in Modern Enterprises

According to a report by Research and Markets , the LLM market was valued at $5.72 billion in late 2024 and could grow to over $123 billion by 2034, with an annual growth rate of around 36%. LLMs are dominating almost every sphere of business operations and will continue to escalate in the coming years, which makes their adoption in large enterprises vital.

Key Market Takeaways:

- About 67% of businesses worldwide are using large language models (LLMs) or generative AI to improve their operations.

- 72% of companies plan to increase their investment in LLMs this year, showing a strong commitment to the technology.

- Nearly 40% of companies are already spending over $250,000 annually on LLM adoption.

- By 2026, more than 80% of companies are expected to use generative AI or related APIs, a huge jump from just 5% in 2023.

- Over 70% of global businesses are using AI in at least one part of their operations, with generative AI playing a key role in areas like marketing, sales, IT, and customer service.

- Google’s LLMs are used by 69% of companies, while OpenAI’s models are used by 55%, according to recent surveys.

The Role of LLMs in Enterprise AI

As businesses adopt AI, LLMs are becoming essential tools for innovation and efficiency. These models can understand and generate human-like text, making them powerful for various enterprise functions. From automating customer support to analyzing data, LLMs help businesses improve processes and scale operations. As AI adoption grows, LLMs are becoming key to staying competitive.

1. Automating Customer Support

LLMs are transforming customer support by automating responses and offering real-time assistance. AI-driven chatbots and virtual assistants can handle both simple and complex customer queries, reducing the workload on human agents. For example, Salesforce uses LLM-powered chatbots to manage customer interactions, speeding up response times and boosting customer satisfaction.

2. Enhancing Data Analysis and Insights

LLMs can analyze massive amounts of unstructured data, such as reports and social media posts, to provide valuable insights. For example, a retail company can use LLMs to analyze customer feedback and identify emerging product trends. This helps businesses make quicker, more informed decisions and improves their data-driven strategies.

3. Scaling Operations Efficiently with LLMs

LLMs help businesses scale by automating tasks that traditionally require human effort. For instance, large enterprises can use LLMs to automate data entry, organize documents, and manage internal communications. This leads to fewer errors and increased productivity, making it easier to scale operations without significantly raising costs.

4. LLMs in Predictive Analytics

LLMs enhance decision-making by analyzing past data and providing predictions based on identified patterns. For example, financial institutions use LLMs for fraud detection, identifying suspicious activity by analyzing transaction trends. In healthcare, LLMs can predict patient outcomes, allowing providers to optimize treatment plans and improve care.

Understanding Off-the-Shelf LLMs

Off-the-shelf LLMs are pre-trained, general-purpose AI models like GPT, BERT, and Llama. These models are widely used because they are readily available and have been trained on massive amounts of data.

They can generate human-like text, making them suitable for many basic tasks, such as chatbots, content generation, and customer support automation. Their popularity comes from their quick deployment and the ability to handle general use cases without needing specialized training.

Advantages of Off-The-Shelf LLMs

1. Quick Deployment:

Off-the-shelf LLMs are designed to be ready-to-use right out of the box. This means businesses can deploy them quickly with minimal setup time, allowing them to start benefiting from AI immediately.

2. Cost-Efficiency:

Compared to building a custom model from scratch, using an off-the-shelf LLM is more affordable. These models offer a low-cost entry point for businesses experimenting with AI, without requiring a large upfront investment.

3. Proven Technology:

Since these models have been used by countless organizations and tested in various scenarios, they are reliable for standard applications. Many well-documented resources and communities exist to help enterprises integrate and troubleshoot these tools.

Limitations of Off-the-Shelf LLMs for Enterprises

1. Lack of Customization:

While off-the-shelf models are powerful, they are general-purpose and not tailored to specific industries or business needs. For example, a legal firm using a general LLM might face challenges with context-specific language or regulations, leading to inaccurate responses. This can result in higher error rates and increased reliance on human oversight to correct mistakes.

2. Data Privacy and Security Concerns:

Using third-party APIs for these models means sending sensitive, proprietary data to external providers. This can create significant security risks, especially if the provider doesn’t meet your organization’s privacy standards. For industries governed by strict regulations, such as finance (GDPR), healthcare (HIPAA), or consumer protection (CCPA), this can lead to non-compliance issues and expose your business to legal risks.

3. Scalability Issues:

As your business grows, so do its needs. Off-the-shelf models can become bottlenecks because they are not designed for the specific, evolving demands of large enterprises. Whether it’s adapting to new products, handling complex data, or scaling up, these models often struggle to keep pace.

4. Vendor Lock-in and Lack of Control:

By relying on third-party providers, you become dependent on their product updates, pricing models, and API changes. This vendor lock-in can limit your flexibility and strategic autonomy. If a vendor changes its pricing or decides to discontinue a feature, your business may be forced to adjust to these changes or find an alternative, disrupting your operations.

Custom LLMs: The Strategic Choice for Enterprise-Scale Needs

Custom LLMs are AI models that are built or fine-tuned specifically for an enterprise using its own proprietary data. Unlike off-the-shelf solutions, custom LLMs are tailored to solve unique business challenges, understanding the organization’s industry, workflows, and specialized language.

These models are trained to meet the exact needs of a business, providing a more effective and personalized solution for tasks such as customer support, data analysis, and automation.

Advantages of Custom LLMs

1. Complete Control Over Data:

When you opt for a custom LLM, all development and deployment occur within your secure environment. This guarantees that your sensitive data stays private and under your control. It also ensures compliance with crucial regulations like GDPR, HIPAA, and CCPA, which can be difficult to achieve with off-the-shelf models.

With a custom LLM, you don’t have to worry about sending proprietary data to third-party providers, reducing the risk of data breaches and legal issues.

2. Tailored Features for Competitive Advantage:

A custom LLM is designed to understand your business’s unique needs. It learns your proprietary terminology, workflows, and specific challenges, giving you a competitive edge.

For instance, if you’re in the legal or healthcare field, a custom LLM can be fine-tuned to understand industry-specific language and regulations, something generic models struggle with. This allows for more accurate results, fewer errors, and greater efficiency, enabling your enterprise to operate at a higher level than competitors using generic AI solutions.

3. Unparalleled Scalability:

Custom LLMs are built on a robust architecture designed to grow with your business. As your enterprise evolves and your data needs expand, your custom solution can easily adapt to new data sources, products, or user volumes.

Unlike off-the-shelf models that often hit a scalability ceiling, custom LLMs allow your business to maintain performance as you scale, ensuring long-term sustainability and reducing the need for costly upgrades or replacements down the line.

Disadvantages of Custom LLMs

1. Higher Initial Investment:

One of the key drawbacks of custom LLMs is the higher upfront cost. Developing and fine-tuning a model to meet your specific needs requires significant resources, including time, expertise, and computational power.

2. Longer Development Time:

Custom LLMs take more time to build and fine-tune. Depending on the complexity of your business and the amount of data needed to train the model, this process can take weeks or even months. This delay in deployment may not be ideal for enterprises looking for quick results or those in fast-moving industries.

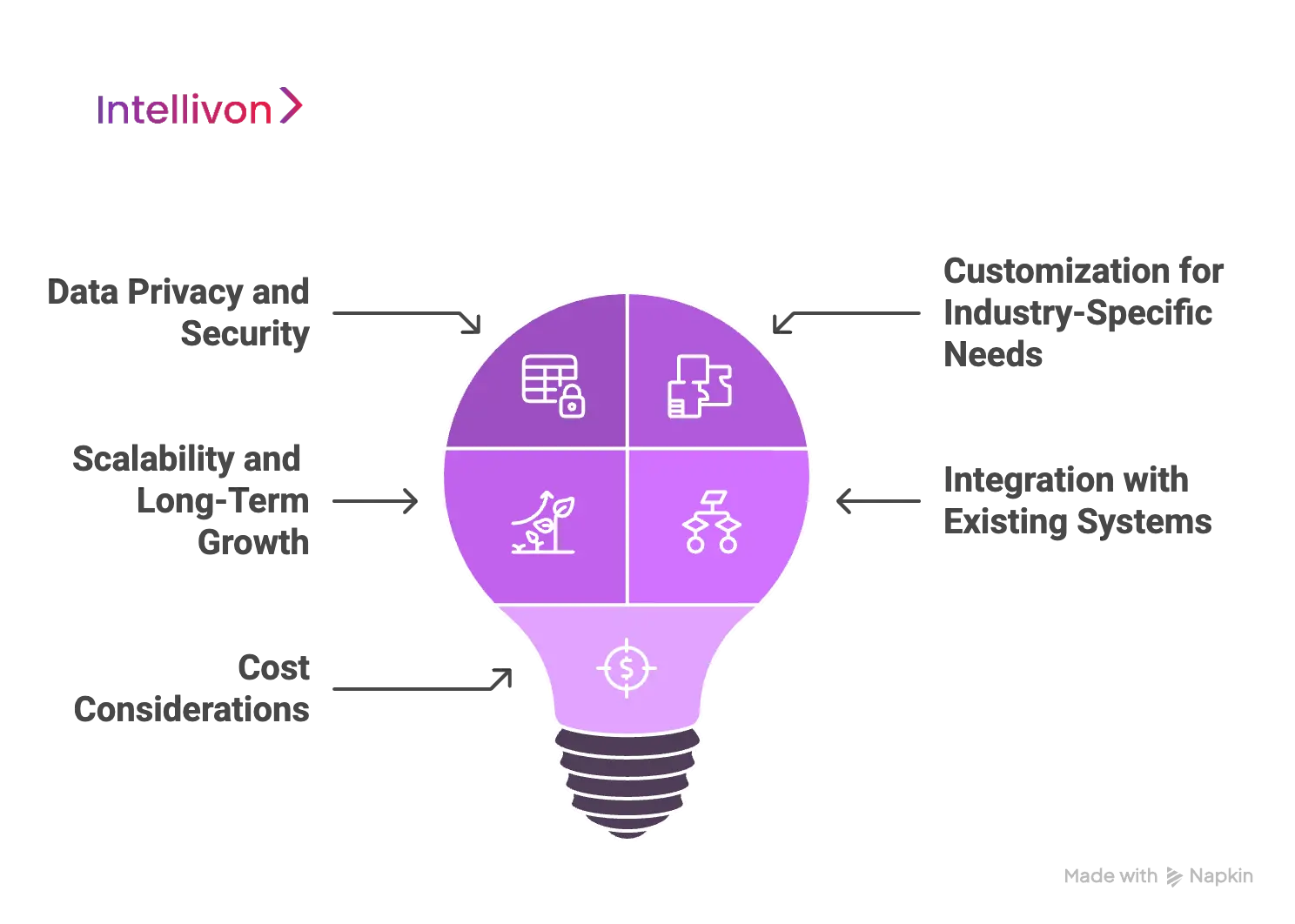

Key Considerations for Enterprise LLM Selection

When choosing between off-the-shelf and custom LLM solutions, enterprises need to consider key factors. These decisions will shape their long-term success, particularly if scaling and data security are priorities.

Custom LLMs are a better fit for large enterprises because they offer flexibility, privacy, and integration capabilities that off-the-shelf models cannot match. Here is why:

1. Data Privacy and Security

For large enterprises, protecting data is crucial. Off-the-shelf LLMs often require data to be processed by third-party servers. This poses a risk for sensitive or proprietary data. Industries like healthcare, finance, and law face strict regulations, such as GDPR, HIPAA, or CCPA.

A custom LLM, however, is built within your secure environment, ensuring complete control over your data and full regulatory compliance.

Example: A bank deploying AI for fraud detection needs to keep customer data private. A custom LLM ensures this data never leaves their secure systems, reducing risk and maintaining compliance.

2. Customization for Industry-Specific Needs

Off-the-shelf LLMs are designed for general tasks. They may struggle with specialized language or unique workflows.

Custom LLMs are built to understand specific business needs. They can be tailored to recognize industry-specific terminology and handle complex tasks unique to your enterprise.

Example: A law firm needs an LLM that understands legal language and can accurately analyze contracts. A custom LLM ensures it works with case-specific data, unlike a general model that might misinterpret legal terms.

3. Scalability and Long-Term Growth

Off-the-shelf models are a quick solution but struggle with growth. As enterprises expand, their needs evolve. Generic LLMs may fail to scale with new data sources or increased demand.

Custom LLMs, however, are designed for scalability. They adapt easily to new data, products, and growing business needs.

Example: A retail company expanding globally will need an LLM capable of handling multiple languages and diverse customer behaviors. A custom LLM can grow with the company, accommodating new languages and increasing customer interactions.

4. Integration with Existing Systems

Large enterprises rely on established platforms. Off-the-shelf LLMs may not integrate well with your existing systems. They often require significant adjustments or may not fit into your current workflows.

On the other hand, custom LLMs are built to integrate seamlessly with your CRM systems, ERP software, and other tools.

Example: A software company might need an LLM integrated with its help desk system for automated customer support. A custom LLM can be designed to work directly with their existing platforms, while an off-the-shelf model may not align perfectly.

5. Cost Considerations

While off-the-shelf LLMs have lower upfront costs, they often require frequent updates and third-party fees.

Custom LLMs come with a higher initial investment but deliver better long-term value. They reduce the need for constant upgrades and are designed to scale, leading to lower operating costs over time and higher ROI.

Comparison Table: Off-the-Shelf vs. Custom LLM Solutions

| Factor | Off-the-Shelf LLMs | Custom LLMs |

| Data Privacy & Security | Data sent to third-party servers, potential risks | Data stays within the enterprise, full control |

| Customization | Limited to general-purpose functionality | Tailored to industry-specific needs and terminology |

| Scalability | Struggles to scale with growing enterprise needs | Built for scalability, adapts as the business grows |

| Integration | Limited integration options | Seamless integration with existing systems and platforms |

| Upfront Investment | Low initial cost | Higher initial cost but better long-term ROI |

| Long-Term Growth | May need replacement or frequent reconfiguration | Can be continuously updated and fine-tuned |

| Accuracy | May lack precision for complex tasks | High accuracy, tailored to business requirements |

| Compliance | May struggle with industry-specific regulations | Designed to meet regulatory and compliance standards |

For large enterprises focused on growth and maintaining control over their proprietary data, custom LLMs offer a clear advantage.

They provide tailored solutions, ensure compliance, and are built to scale as your business expands. Although custom LLMs come with a higher upfront cost, they deliver long-term value by providing flexibility, accuracy, and security that off-the-shelf models simply cannot match.

Real World Use Cases of Custom Enterprise LLMs

LLMs are transforming industries by making processes more efficient, accurate, and scalable. Here are some real-world use cases across different industries showing how enterprises are harnessing the power of LLMs:

1. Healthcare

- Automated Medical Documentation & Transcription: LLMs help transcribe doctor-patient conversations, reducing administrative work. This leads to quicker and more accurate medical record-keeping.

- Virtual Health Assistants: Provide 24/7 support for patients. They handle tasks like answering questions, scheduling appointments, and guiding patients, which reduces staff workload.

- Predictive Analytics for Patient Outcomes: LLMs analyze patient data to spot risks and suggest early interventions. This improves both care quality and operational efficiency.

Example: Nuance Communications developed Dragon Medical, an AI-powered transcription tool. This tool uses LLMs to transcribe doctor-patient conversations in real time. It saves time for doctors and improves the accuracy of medical records.

2. Retail and E-commerce

- Personalized Product Recommendations: LLMs analyze customer behavior and offer personalized product suggestions, boosting sales.

- Customer Service Chatbots: Handle routine inquiries like order tracking and returns. This improves response times and reduces the need for human support.

- Inventory Management and Demand Forecasting: Predict product demand to optimize stock levels, cutting down on waste and stockouts.

Example: Amazon uses LLMs to recommend products to customers. By analyzing browsing and purchase data, the system suggests relevant items, which leads to higher conversion rates and sales.

3. Manufacturing and Industrial

- Proposal and RFP Automation: LLMs automate the creation of sales proposals and technical documents, reducing development time and increasing the chances of winning bids.

- Technical Documentation Generation: Automatically create and update manuals, compliance documents, and more.

Example: Siemens uses LLMs to automate the generation of sales proposals. This has cut proposal development time by 45%, helping Siemens respond faster to RFPs and win more business.

4. B2B Distribution and Supply Chain

- Customer Support Automation: LLM-powered chatbots reduce customer service ticket volumes, improving resolution times and boosting satisfaction scores.

- Supply Chain Intelligence: LLMs help forecast demand, manage inventory, and optimize logistics, improving overall supply chain efficiency.

Example: Walmart uses LLMs to forecast demand and optimize stock levels. This helps ensure products are available when needed while reducing waste in the supply chain.

5. Financial Services and Fintech

- Fraud Detection and Transaction Monitoring: LLMs analyze transaction data in real-time, flagging high-risk transactions and reducing manual monitoring.

- Compliance Reporting: LLMs automate the creation of regulatory documents, streamlining compliance processes.

Example: JP Morgan Chase uses LLMs for fraud detection. The AI system analyzes transaction data in real time, reducing manual effort by 60% and detecting anomalies 82% faster than manual processes.

6. Legal and Professional Services

- Document Summarization: LLMs quickly summarize lengthy legal documents, making it easier for professionals to review and analyze case files.

- Research and Due Diligence: LLMs extract key insights from large volumes of legal text, saving time on legal research.

Example: Ross Intelligence uses LLMs to automate legal research. This helps law firms analyze case files and identify relevant precedents more quickly, improving research efficiency and saving valuable time.

These real-world examples showcase how LLMs are transforming industries by improving efficiency, enabling better decision-making, and enhancing customer experiences. Whether it’s healthcare, retail, manufacturing, or financial services, LLMs are driving meaningful change and creating substantial value for businesses.

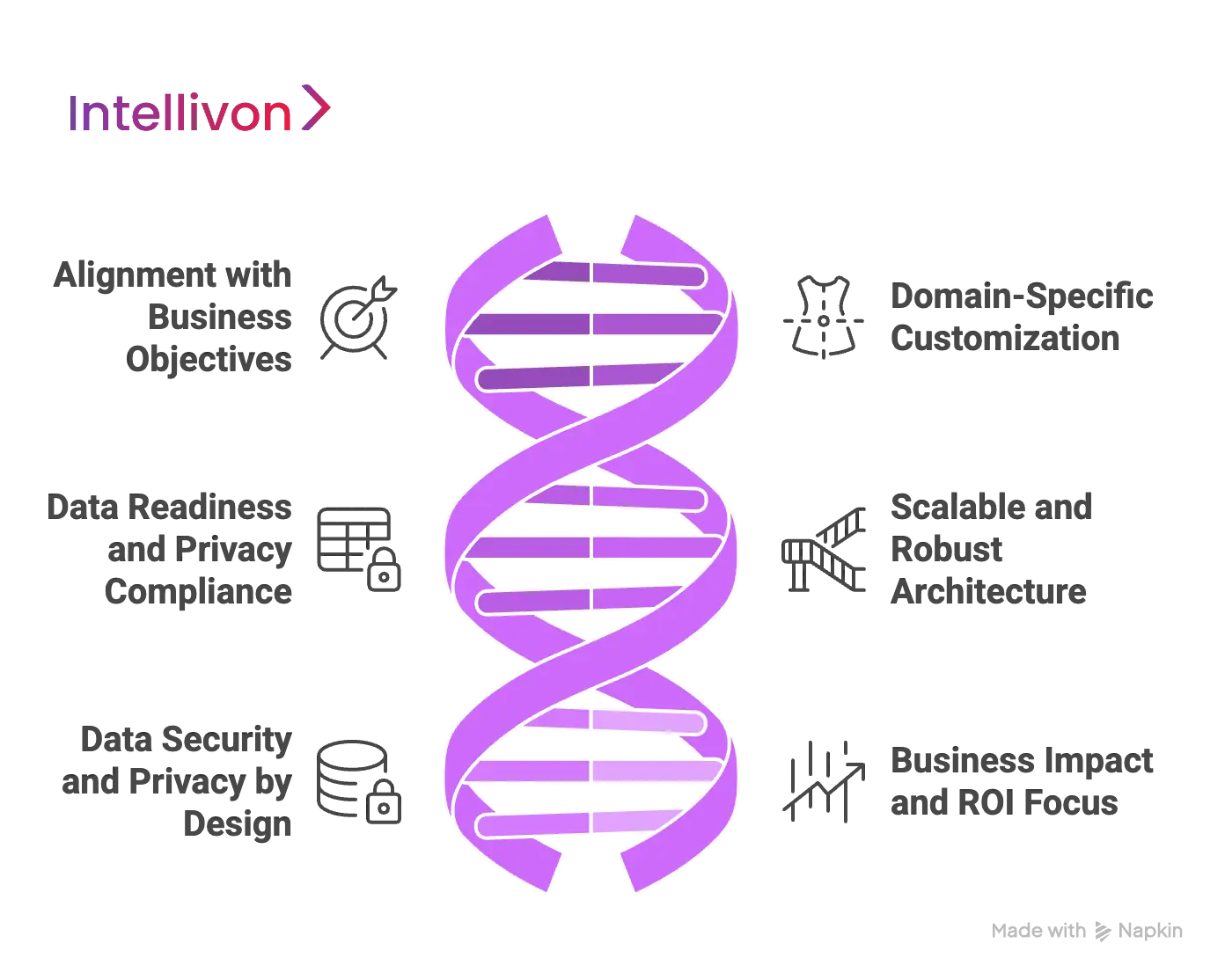

Core Pillars of Our Custom LLM Development for Enterprises

At Intellivon, we approach custom LLM development with a strategic focus on aligning technology with your business objectives. Our goal is to create AI solutions that not only solve your immediate needs but also scale as your business grows. Here’s how we make sure every custom LLM solution we build is a perfect fit for your enterprise.

1. Alignment with Business Objectives

We start by gaining a deep understanding of your enterprise’s strategic goals. Our custom LLM solutions are developed to drive key outcomes that matter most to your business.

Whether it’s improving operational efficiency, enhancing customer experience, or accelerating revenue growth, we align every development initiative with measurable business impact.

2. Domain-Specific Customization

Unlike off-the-shelf LLMs that offer general-purpose capabilities, Intellivon’s custom LLMs are fine-tuned using your enterprise’s proprietary data, industry-specific terminology, and unique workflows.

This level of domain-specific customization allows the model to deliver highly accurate, context-aware results that address your challenges head-on. Whether you’re in finance, healthcare, manufacturing, or any other industry, our custom LLMs are built to handle the specific nuances of your business.

3. Data Readiness and Privacy Compliance

Data quality and privacy are critical in any AI development process. At Intellivon, we go beyond simply collecting data. We rigorously prepare and curate high-quality, trustworthy datasets, ensuring they meet the needs of your AI project while adhering to all relevant privacy regulations like GDPR, HIPAA, and CCPA.

Our approach guarantees that data protection is baked into every phase of development, from data collection to deployment. We take every step to ensure your data is handled securely through practices such as data encryption, anonymization, and secure handling.

4. Scalable and Robust Architecture

Our LLM solutions are built on scalable infrastructures that can evolve with your business. Whether your organization opts for cloud, on-premises, or a hybrid setup, we design our models to maximize resource use and ensure seamless scaling.

We employ model optimization techniques like quantization, distributed training, and container orchestration to ensure that your LLM solution delivers reliable performance at all development stages.

5. Data Security and Privacy by Design

We prioritize data security and privacy in every phase of development. Our LLM solutions are designed with privacy by design, meaning that data protection is integrated into the architecture from the start.

We implement encryption for data at rest and in transit, as well as strict access controls to prevent unauthorized access.

Additionally, we utilize advanced privacy-preserving methods such as federated learning and differential privacy, which help ensure that your sensitive data remains protected throughout the AI lifecycle. Our continuous security monitoring and compliance auditing ensure that your data stays secure, and your AI application remains trustworthy.

6. Business Impact and ROI Focus

Here, every custom LLM solution is designed with a focus on business impact and ROI. Our solutions help drive measurable returns through automation, smarter decision-making, and innovation enablement. We establish clear KPIs from the start, so we can track success and ensure your investment delivers the results you’re aiming for.

Whether it’s improving customer service efficiency, reducing operational costs, or gaining deeper business insights, we design LLMs that help you maintain a competitive advantage in the long run.

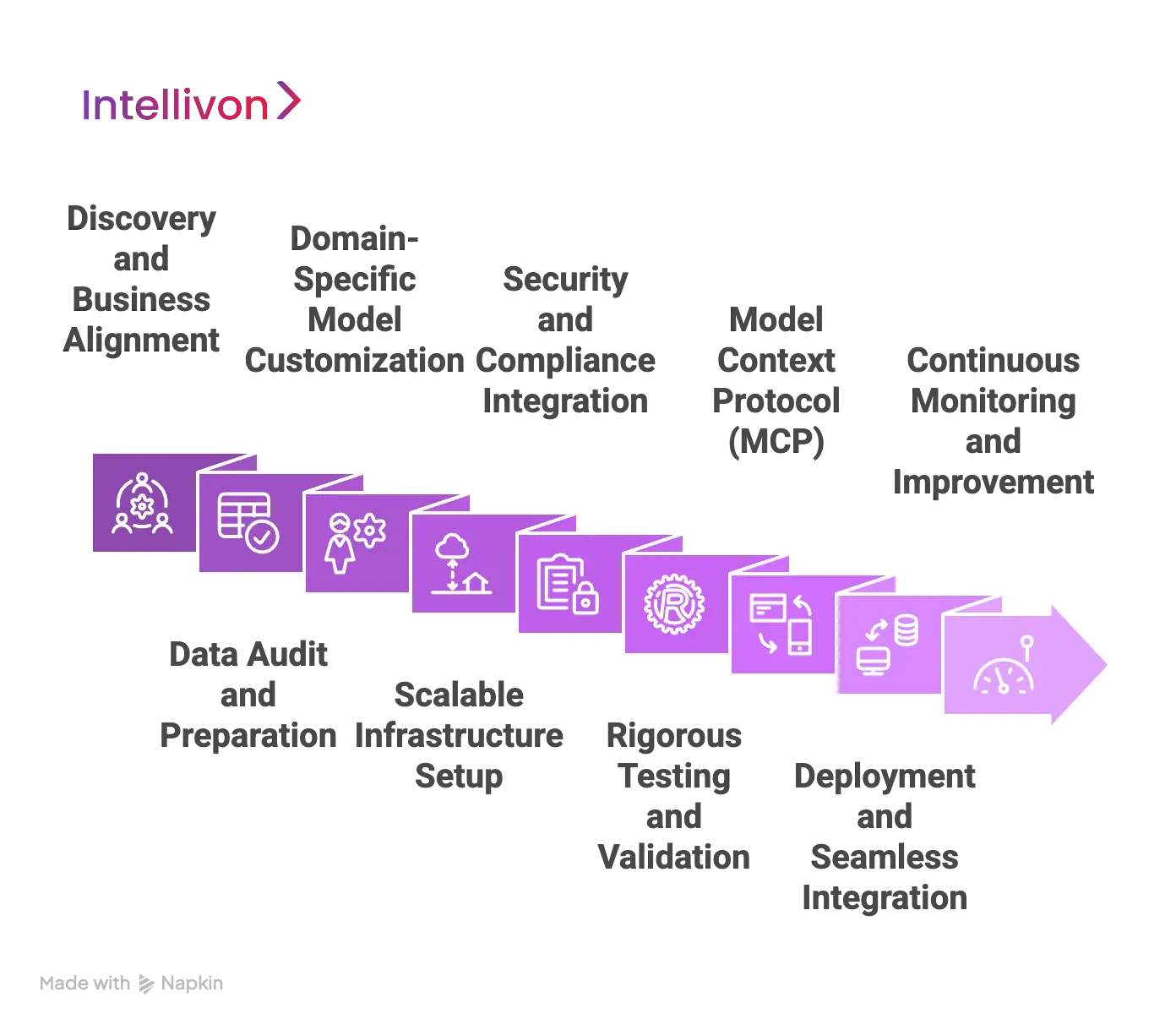

How Intellivon Builds Your Custom LLM Step-By-Step

Our LLM experts understand the unique needs of every enterprise, and hence we design intelligent, scalable solutions that are specifically tailored to meet your enterprise’s unique needs. Our proven step-by-step approach ensures that your custom LLM delivers maximum business value, superior performance, and robust security from day one. Here’s how we do it:

Step 1: Discovery and Business Alignment

Our process begins with a collaborative discovery phase. We partner with key stakeholders within your organization to thoroughly understand your business objectives, challenges, and workflows.

This ensures that the custom LLM we build is aligned with your broader goals. Whether it’s increasing operational efficiency, enhancing customer experiences, or driving innovation, we identify the specific use cases where an LLM will create the most value for your enterprise.

If you’re in the healthcare sector, we’ll identify use cases like improving patient interaction or streamlining medical record analysis. For retail companies, we might focus on automating customer support or predictive sales analysis.

Step 2: Data Audit and Preparation

The quality of your data is crucial for building an effective LLM. In this step, we conduct a comprehensive audit of your data sources. We assess data quality, relevance, and completeness. Once we identify any gaps, we take the necessary steps to clean, enrich, and standardize the data.

We also ensure that the data complies with privacy and security standards such as GDPR, HIPAA, and CCPA.

A financial institution may have data scattered across multiple platforms. Our team would consolidate and clean that data, ensuring it’s ready for training an LLM that can detect fraudulent activity in real time, while ensuring full compliance with privacy laws.

Step 3: Domain-Specific Model Customization

Once your data is ready, we move to fine-tuning the model. Unlike off-the-shelf models that are designed for general-purpose use, we take a domain-specific approach to tailor the model to your enterprise’s unique requirements.

We adapt the foundational models using your proprietary data, industry-specific language, and workflows. This ensures that the LLM understands the context of your business, delivering more accurate and relevant results than a generic AI solution.

Example: For a legal firm, we’ll fine-tune the LLM to understand complex legal terminology, helping automate the review of contracts and identify key legal terms or clauses.

Step 4: Scalable Infrastructure Setup

Building a scalable infrastructure is critical for ensuring that your LLM can grow with your enterprise. We deploy your solution on a cloud-based, on-premises, or hybrid architecture depending on your needs.

Using technologies like container orchestration, distributed training, and quantization, we ensure the model is not only cost-effective but also highly performant, with low latency and the ability to scale smoothly as your business grows.

A global e-commerce platform might require a scalable solution that handles thousands of product queries per minute. Our cloud-based solution is designed to scale automatically, ensuring performance remains steady even during high-traffic periods.

Step 5: Security and Compliance Integration

Security and compliance are always top priorities at Intellivon. In this phase, we integrate data encryption, strict access controls, and privacy-preserving techniques into your LLM. We implement methods like differential privacy and federated learning to ensure that sensitive data is protected. Continuous security monitoring and auditing help maintain the trustworthiness of the AI model throughout its lifecycle, from development to deployment.

For a healthcare provider, we ensure the model complies with HIPAA standards, protecting patient data while using the LLM for tasks like predicting patient outcomes or recommending treatments.

Step 6: Rigorous Testing and Validation

Before your custom LLM goes live, we conduct extensive testing to ensure it meets your enterprise’s high standards. This includes evaluating accuracy, fairness, latency, and robustness in real-world scenarios.

We address any issues by fine-tuning the model iteratively, making adjustments based on feedback. This testing phase ensures that your LLM performs optimally and without biases.

Step 7: Model Context Protocol (MCP)

With Model Context Protocol (MCP), we ensure your LLM remains contextually aware and adaptive throughout its deployment.

The MCP that we integrate into the LLM helps the model retain relevant context from prior interactions, allowing it to provide personalized, accurate responses over time. This is especially critical for businesses that require continuity in customer interactions or when dealing with evolving datasets and requirements.

For a customer service AI, MCP allows the model to remember past conversations, enabling seamless and context-aware interactions, providing customers with more personalized and efficient service.

Step 8: Deployment and Seamless Integration

Once testing is complete, we integrate your custom LLM into your existing systems and workflows. Whether it’s your CRM, ERP, or customer support platforms, we ensure a seamless integration that empowers your team to leverage AI-driven insights easily. Our modular APIs and tools make the deployment smooth, so you can start benefiting from your custom LLM right away.

For a manufacturing company, we integrate the LLM into their supply chain management system, allowing the AI to analyze inventory data and make real-time recommendations on order optimization, without disrupting existing workflows.

Step 9: Continuous Monitoring and Improvement

Our job doesn’t stop once the LLM is deployed. We establish a real-time monitoring system to track the performance of the model.

We continuously collect user feedback and use incremental learning to enhance the model’s performance. This ensures that your custom LLM evolves alongside your business, data patterns, and emerging use cases, delivering sustained ROI.

Our step-by-step process ensures that your custom LLM is secure, scalable, and high-performing, enabling your enterprise to leverage AI with confidence. By working closely with your team and stakeholders, we ensure that every solution we deliver is not just a tool, but a strategic asset that propels your business forward.

Tools and Technology Powering Our Custom LLM Development

At Intellivon, we use a mix of cutting-edge tools and technologies to build custom LLMs that meet the specific needs of large enterprises. From data preparation to deployment and continuous improvement, the right tools ensure that each solution is scalable, secure, and high-performing. Here’s a breakdown of the key technologies that power our custom LLM development process.

1. NLP Frameworks

NLP is the backbone of any LLM. We use advanced NLP frameworks to ensure that the model can understand and generate human-like text. Some of the leading NLP frameworks we use include:

- SpaCy: This open-source library is known for its efficiency and speed in processing large volumes of text data. We use SpaCy for text preprocessing, entity recognition, and part-of-speech tagging.

- Hugging Face Transformers: This library provides pre-trained models like BERT, GPT, and RoBERTa that we fine-tune to create highly specific LLMs for your enterprise. It allows us to quickly adapt models for different tasks, such as text classification, question answering, and summarization.

- OpenNLP: We use this for basic NLP tasks like tokenization, named entity recognition (NER), and sentence segmentation. It’s especially useful in environments requiring rapid development and deployment.

These frameworks allow us to fine-tune models to understand specific domain language and industry jargon, ensuring that the LLM delivers precise results.

2. Machine Learning (ML) Platforms

Machine learning forms the core of our LLMs, helping models learn from data and improve over time. The ML platforms we use enable robust training, fast iteration, and seamless deployment of LLMs:

- TensorFlow: A leading open-source ML framework by Google, used for building and training deep learning models. We use TensorFlow for training large models and performing tasks like text generation, classification, and predictive analytics.

- PyTorch: This platform, developed by Facebook, is preferred for research and development in natural language processing. We use PyTorch for custom LLM training, allowing us to implement complex architectures and experiment with new algorithms quickly.

- Keras: Built on top of TensorFlow, Keras simplifies the process of creating neural networks and is used for rapid prototyping and testing of LLMs.

These platforms help us build LLMs with the flexibility and scalability required to meet the growing needs of large enterprises.

3. Cloud Infrastructure for Scalability

We deploy our custom LLMs on cloud-based infrastructures to ensure scalability and high availability. By leveraging the power of cloud computing, we can scale our models to handle increasing data and usage without compromising on performance.

- Amazon Web Services (AWS): With its EC2 instances and SageMaker platform, AWS provides a flexible and scalable environment for training and deploying LLMs. We use AWS for large-scale model training, enabling us to quickly adapt the LLM as business needs evolve.

- Google Cloud Platform (GCP): GCP’s AI Platform offers powerful tools for training machine learning models. We use GCP’s infrastructure for large-scale deployment and data management, ensuring that LLMs are easily accessible and efficient at any scale.

- Microsoft Azure: Azure’s machine learning services provide tools for model deployment and monitoring. We use Azure to host LLMs that require robust security and compliance features, which is often essential for industries like healthcare and finance.

These cloud platforms allow us to deploy and scale LLMs quickly, ensuring that enterprises get the most out of their AI investments.

4. Data Privacy and Security Tools

Protecting enterprise data is a top priority, especially when working with sensitive information. We use a range of tools and technologies to ensure data privacy and compliance with regulations like GDPR, HIPAA, and CCPA:

- Encryption Tools: We use AES encryption (Advanced Encryption Standard) to encrypt both data at rest and data in transit, ensuring that all sensitive data is protected.

- Differential Privacy: To maintain privacy while still using data to train LLMs, we apply differential privacy techniques. This helps ensure that individual data points are not identifiable, even in large datasets.

- Secure Data Platforms: We integrate with secure, compliant data storage platforms, like AWS KMS (Key Management Service) and Google Cloud’s Data Loss Prevention API, to safeguard and anonymize enterprise data.

These security measures ensure that our custom LLMs not only perform at their best but also meet strict data protection standards.

5. Continuous Integration and Deployment (CI/CD) Tools

For seamless deployment, monitoring, and updating of LLMs, we use CI/CD tools that automate the process of building, testing, and deploying models. This ensures that updates can be rolled out efficiently and with minimal disruption:

- Jenkins: Used to automate the process of building, testing, and deploying models, ensuring that we maintain consistent quality and performance at all stages.

- Docker: Containerizing LLMs using Docker ensures that the model is portable and can run consistently across different environments, whether on-premises or in the cloud.

- Kubernetes: For managing containerized applications, Kubernetes ensures that our LLMs scale efficiently and run smoothly across multiple environments. It’s particularly useful for large-scale deployments.

These tools streamline our development pipeline, enabling us to deliver high-quality, reliable LLM solutions on time.

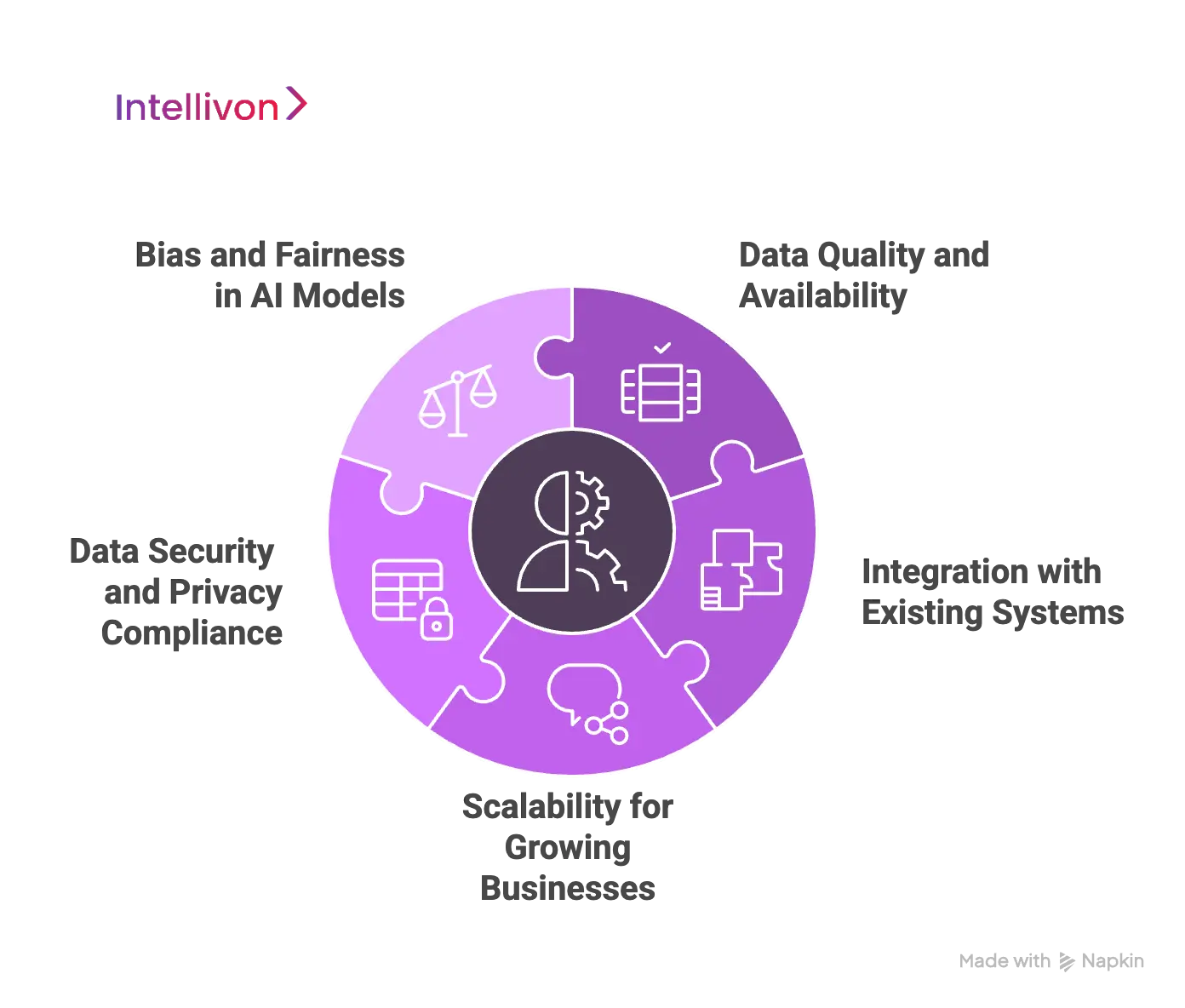

Custom Enterprise LLM Integration Challenges and Our Solutions

Implementing custom LLMs for enterprises can be complex, with several challenges that need to be addressed to ensure a smooth deployment and long-term success. At Intellivon, we are committed to providing tailored solutions that overcome these obstacles. Here are six common challenges and how we address them:

1. Data Quality and Availability

Challenge: Enterprises often struggle with poor data quality or inaccessible data, which can negatively impact the performance of LLMs. Without clean, relevant, and well-structured data, even the most advanced models can underperform.

Our Solution: We conduct a comprehensive data audit to identify gaps and inconsistencies in your data. We then cleanse, augment, and structure the data to ensure it is ready for training. Our approach guarantees that the data used for model training is of the highest quality, leading to better outcomes.

2. Integration with Existing Systems

Challenge: Integrating a custom LLM into an enterprise’s existing IT infrastructure can be difficult, especially if the organization uses legacy systems that are not AI-friendly. Seamless integration is essential for ensuring that the LLM works as expected without disrupting daily operations.

Our Solution: We ensure smooth integration by customizing the LLM to fit within your existing IT ecosystem, whether it’s a CRM, ERP, or custom software. Our modular APIs and tools allow for easy integration, ensuring that the LLM works harmoniously with your existing systems.

3. Scalability for Growing Businesses

Challenge: As businesses grow, so do their needs. An LLM that works well in a small-scale environment might face challenges when scaling to handle increased traffic or data volume.

Our Solution: We design LLM solutions with scalable infrastructure, leveraging technologies like container orchestration, distributed training, and cloud-based architectures. This allows your LLM to handle growing data, traffic, and complexity without sacrificing performance or efficiency.

4. Ensuring Data Security and Privacy Compliance

Challenge: Data privacy and security are top concerns, especially for industries like healthcare, finance, and law. Ensuring compliance with regulations like GDPR, HIPAA, and CCPA while protecting sensitive data is a significant challenge.

Our Solution: We implement privacy-by-design measures, including data encryption, federated learning, and differential privacy. Our LLMs comply with industry regulations and undergo regular security audits to ensure data protection is never compromised.

5. Bias and Fairness in AI Models

Challenge: LLMs are susceptible to biases in their outputs, especially if the training data is unbalanced or not diverse enough. This can lead to unfair or inaccurate results, which can negatively affect business decisions.

Our Solution: We prioritize fairness and accuracy throughout the development process by testing models for biases and refining them as needed. We use diverse training data and implement regular bias audits to ensure that the LLM provides unbiased, fair, and accurate results across all use cases.

By focusing on data quality, seamless integration, scalability, security, fairness, and continuous improvement, we ensure that your LLM solution is tailored to meet the specific needs of your business while providing long-term value. With Intellivon, your enterprise can confidently harness the power of AI to drive innovation and operational success.

Conclusion

In conclusion, custom LLMs offer enterprises significant advantages over off-the-shelf solutions, including tailored features, improved scalability, and enhanced data security.

By addressing key challenges such as data quality, system integration, and regulatory compliance, businesses can unlock the full potential of AI. With the right approach, custom LLMs drive efficiency, innovation, and long-term success, helping enterprises stay competitive in a rapidly evolving digital landscape.

Partner with Intellivon for Your Custom LLM Development

With over 11 years of experience in AI for regulated industries and more than 500 successful custom AI implementations, we are your trusted partner in building tailored AI solutions. Whether it’s automating compliance workflows or providing actionable insights for risk management, we help enterprises turn complex regulatory challenges into operational advantages.

Why Choose Intellivon for Your Custom AI Solution?

- Tailored AI Models for Compliance: We design AI systems specifically for your industry’s unique regulatory needs, ensuring your solution is compliant and adaptable.

- Seamless Integration with Existing Systems: Our custom AI models integrate smoothly with your ERP, CRM, and legacy systems, ensuring data sovereignty and operational efficiency.

- Long-Term ROI Commitment: Our custom AI solutions are designed to deliver tangible business outcomes. We help streamline processes, reduce manual errors, and enhance decision-making, all while ensuring regulatory adherence.

Ready to Transform Your Business with Custom AI?

Book a discovery call with our AI consultants today and get:

- A comprehensive audit of your AI readiness and regulatory needs

- A tailored AI compliance roadmap for your industry

- A detailed implementation plan with an ROI forecast

FAQ’s

Q1. What is a Custom LLM, and why is it important for enterprises?

A1. A custom LLM model is an AI system built specifically for an enterprise’s unique needs, trained on proprietary data and tailored to understand industry-specific language and workflows. Unlike off-the-shelf models, custom LLMs offer higher accuracy, better contextual understanding, and improved relevance, helping enterprises enhance efficiency, automate tasks, and ensure compliance with regulations.

Q2. How does a Custom LLM improve operational efficiency in enterprises?

A2. Custom LLMs streamline tasks like customer support, data analysis, and documentation by automating repetitive processes. This reduces manual workload, minimizes errors, and accelerates decision-making. By adapting to specific business needs and workflows, a custom LLM can boost productivity, reduce costs, and provide faster, more accurate insights, leading to higher operational efficiency.

3. What are the main benefits of using Custom LLMs over off-the-shelf models?

A3. Custom LLMs offer tailored features, improved scalability, and better data security compared to off-the-shelf models. They are fine-tuned to understand your business’s specific language, compliance needs, and workflows, delivering more accurate and context-aware results. Custom solutions also allow for seamless integration with existing systems, ensuring long-term scalability and adaptability to evolving business needs.

4. How do Custom LLMs ensure data privacy and compliance with regulations like GDPR and HIPAA?

A4. Custom LLMs are built with privacy-first architecture, ensuring sensitive data is protected through encryption, anonymization, and secure data handling practices. They can also be designed to comply with specific industry regulations like GDPR, HIPAA, and CCPA, ensuring that all data processing is secure and meets legal standards throughout the model’s lifecycle.

5. How do enterprises benefit from the scalability of Custom LLMs?

A5. Custom LLMs are designed for scalability, allowing them to grow with your enterprise. As your business expands, these models can handle increasing amounts of data, user interactions, and evolving demands. With technologies like cloud-based deployment and distributed training, Custom LLMs ensure reliable performance even as business operations scale, ensuring consistent efficiency and cost-effectiveness over time.