The landscape of AI is rapidly evolving, particularly with large enterprises embracing LLMs. These powerful AI tools are becoming central to digital strategies. A recent survey shows that 72% of companies plan to increase LLM investments this year, with 40% expecting to invest over $250,000. This highlights the growing recognition of GenAI as essential for future business success.

However, despite this wave of investment, there’s a significant gap between ambition and implementation. While 88% of U.S. business leaders plan to increase AI budgets, only 1% report reaching AI maturity. Many AI projects remain superficial or fail to deliver expected outcomes. By 2027, over 40% of AI projects are expected to be canceled due to strategic failures, unclear value, and poor risk management. The potential failure of such projects stems from specific hesitations related to enterprise adoption, such as integration with existing systems, data privacy and compliance regulations, and choosing the wrong AI model. These challenges can slow down progress and reduce the effectiveness of AI strategies.

This guide will help enterprises overcome these challenges. It will provide a clear pathway for selecting the right LLM for your enterprise, whether it’s OpenAI, Mistral, or Claude. With Intellivon’s expertise in AI solutions, we can guide your organization through each step of LLM adoption. Our team of vetted AI engineers ensures the seamless integration of LLMs into your enterprise operations, helping you avoid common pitfalls and scale extensively.

Why Generative AI is Essential for Enterprise Growth

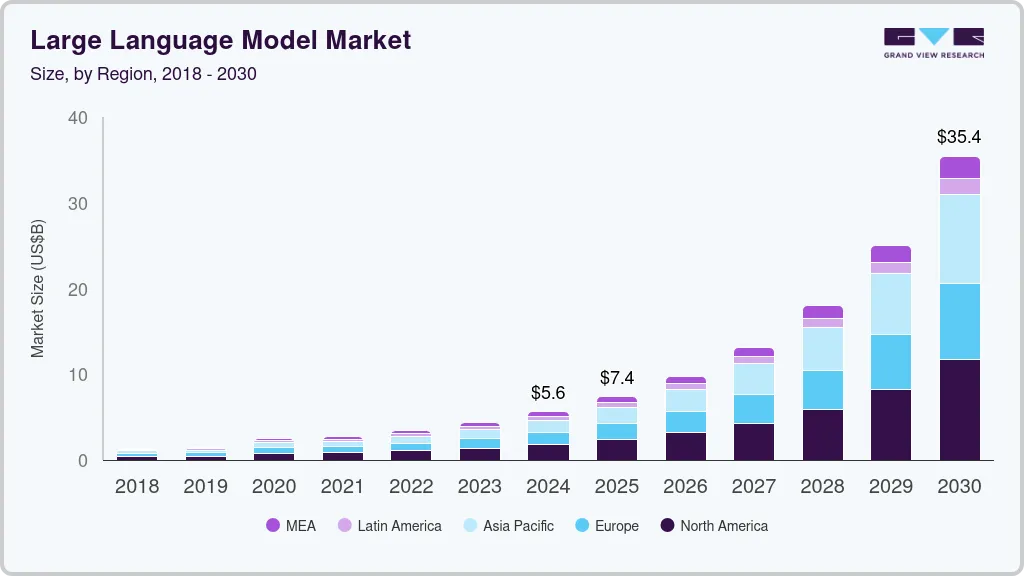

The global market for large language models (LLMs) was valued at USD 5.6 billion in 2024. It is expected to grow to USD 35.4 billion by 2030, with a compound annual growth rate (CAGR) of 36.9% from 2025 to 2030.

Key Market Insights:

- As of 2025, around 67% of organizations globally have adopted LLMs to support operations with generative AI. Additionally, 72% of enterprises plan to increase their LLM spending in 2025, with nearly 40% already investing over $250,000 annually in LLM solutions.

- 73% of enterprises are spending more than $50,000 each year on LLM-related technology and services, and global spending on generative AI (including LLMs) is expected to reach $644 billion this year.

- The global LLM market, valued at $4.5 billion in 2023, is projected to grow to $82.1 billion by 2033, with a compound annual growth rate (CAGR) of 33.7%.

- Retail and ecommerce lead the way with 27.5% of LLM implementations, while finance, healthcare, and technology are also high adopters.

- 88% of LLM users report improved work quality, including increased efficiency, better content, and enhanced decision support. More than 30% of enterprises are expected to automate over half of their network operations using AI/LLMs by 2026.

However, challenges remain, with 35% of users citing reliability and accuracy issues, particularly in domain-specific tuning. Data privacy and compliance concerns remain major barriers to LLM adoption, especially in regulated industries. While 67% of enterprises use LLMs in some capacity, fewer than a quarter report full commercial deployment, with many still in the experimental phase.

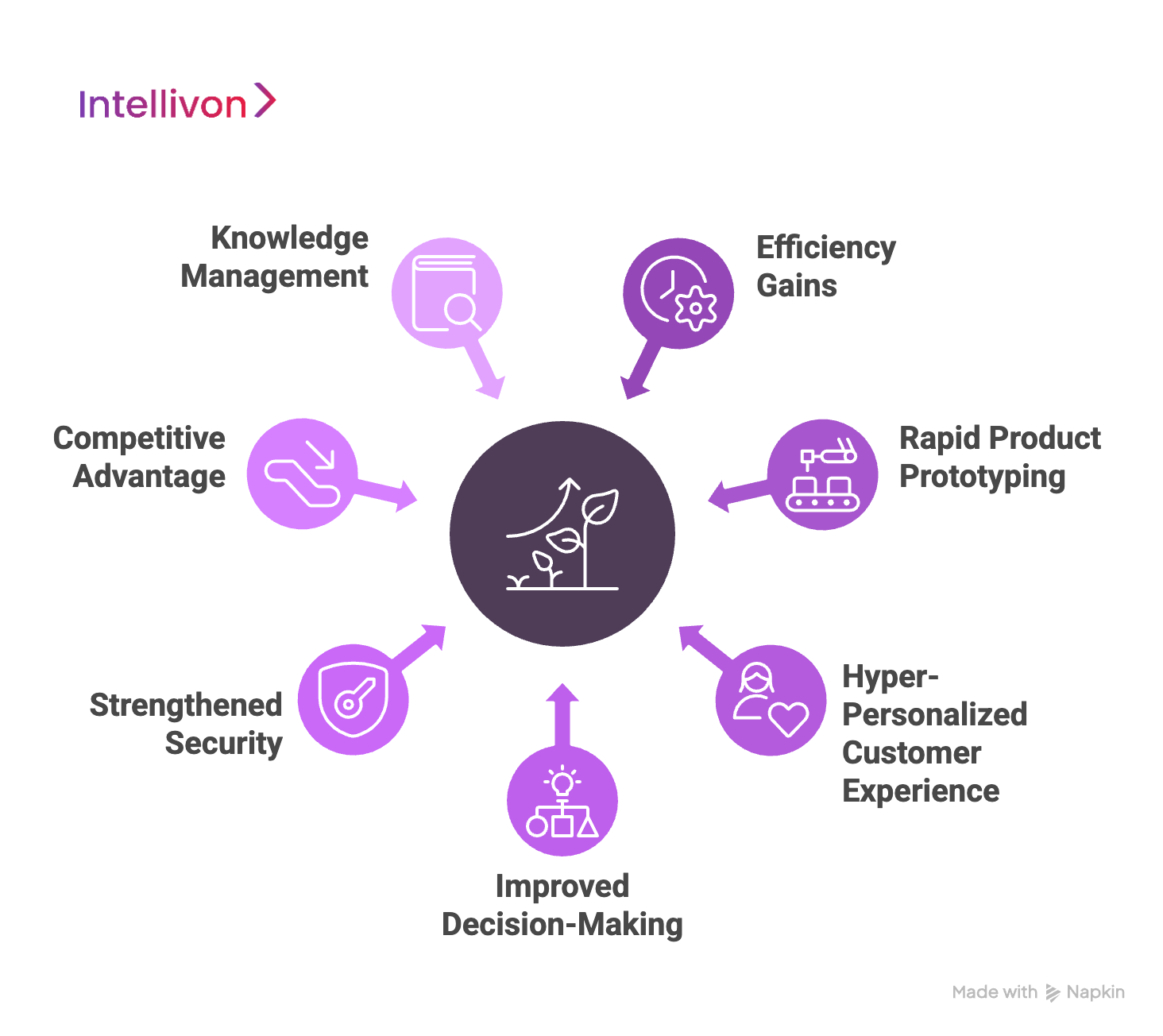

Benefits of LLM Adoption for Enterprises

Generative AI is becoming a key driver of enterprise growth. It transforms how businesses operate, innovate, and create value. LLMs provide several benefits across productivity, customer experience, and competitiveness.

1. Efficiency Gains

Generative AI automates many time-consuming tasks. These include document summarization, content generation, data analysis, and reporting. By automating routine work, LLMs free employees to focus on strategic and complex problem-solving. This leads to higher productivity and lower operational costs.

2. Rapid Product Prototyping

Enterprises use generative AI to rapidly prototype new product ideas. AI helps personalize marketing campaigns at scale and assists R&D in discovering new concepts. It also improves software development cycles. This helps businesses innovate faster and stay ahead of competitors.

3. Hyper-Personalized Customer Experience

Generative AI enables hyper-personalization. It tailors products, services, and recommendations in real time. AI-powered tools like chatbots and virtual assistants improve customer interactions, leading to higher satisfaction, better retention, and more revenue.

4. Improved Decision-Making

LLMs can process large, complex datasets to uncover valuable insights. This helps businesses make more informed decisions. AI can improve forecasting, optimize supply chains, and enhance risk management. It supports smarter, data-driven strategies.

5. Strengthened Security

AI-generated synthetic data helps detect fraud and strengthen security. It can simulate threats, test resilience against cyberattacks, and ensure compliance with security standards. This improves overall enterprise security.

6. Competitive Advantage

Adopting generative AI early allows enterprises to innovate and operate faster than competitors. This leads to market leadership by delivering unique products, personalized experiences, and operational excellence.

7. Knowledge Management

LLMs act as smart tools for knowledge management. They help employees find relevant information quickly and synthesize insights from multiple sources. This leads to better and faster decisions, boosting overall performance.

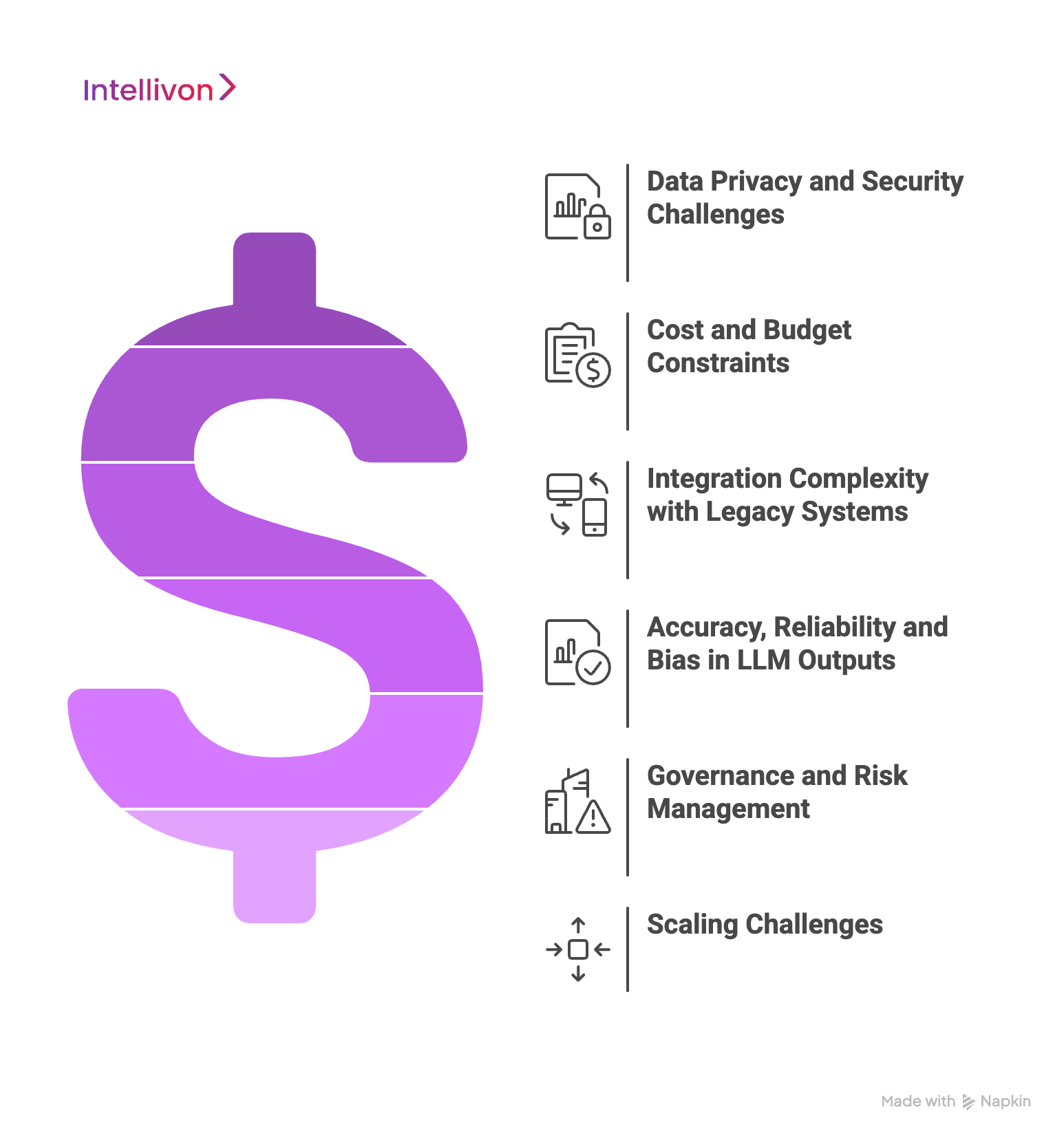

Understanding Enterprise Pain Points in LLM Adoption

As LLM adoption continues to rise, many organizations face a unique set of challenges that impede their ability to fully integrate and leverage these technologies for long-term success.

In this section, we’ll explore these pain points in greater detail. Understanding these challenges is crucial for businesses looking to achieve deep, transformative integration of LLMs.

1. Data Privacy and Security Challenges

Data privacy and security remain top concerns for enterprises adopting LLMs . In fact, 44% of businesses cite these issues as significant barriers. The nature of LLMs, which are trained on vast datasets, presents unique challenges.

A. Lack of Governance Controls

Current LLMs often lack strong data governance controls. This makes it easy for malicious actors to manipulate the system and extract sensitive information, posing serious risks for businesses.

B. The Black-Box Nature of LLMs

Many LLMs are viewed as “black boxes.” It’s difficult to understand how they arrive at their results. This lack of transparency complicates the identification of privacy breaches and hinders compliance with data protection regulations like GDPR.

2. Cost and Budget Constraints

LLMs can be expensive to implement, and 24% of enterprises cite budget limitations as a key concern. Costs vary widely, from low-cost, on-demand models to high-cost instances for large-scale operations.

A. Model Complexity and Its Costs

More complex models, like OpenAI’s GPT-4, require significant computational power, driving up costs. Larger LLMs typically offer better accuracy but require more resources to run, which results in higher expenses.

B.Token-Based Pricing Model

Most LLM providers use a pay-per-token model. This means businesses are charged based on how much input data and output they process. Larger volumes of data lead to higher costs, especially if special characters or non-English languages are involved.

3. Integration Complexity with Legacy Systems

Integrating LLMs with existing enterprise systems can be challenging. Although only 14% of enterprises flag integration complexity as a top issue, embedding LLMs into legacy systems requires substantial effort.

A. Fundamental Changes Needed

Real enterprise integration goes beyond simply adding an AI chatbot. It involves revamping service orchestration, compliance policies, and infrastructure monitoring to support LLMs. For example, a large financial institution may need to overhaul its CRM system to integrate LLMs seamlessly.

B. Contextual Understanding and Action

True integration means allowing LLMs to understand, generate, and act on natural language appropriately. This requires modifications across the existing IT infrastructure, ensuring compatibility and efficiency.

4. Accuracy, Reliability, and Bias in LLM Outputs

Despite their impressive capabilities, LLMs are not without errors. Enterprises face challenges like hallucinations, reliability issues, and bias in LLM outputs.

A. Hallucinations

LLMs sometimes “hallucinate” by generating incorrect or fabricated information. For instance, an LLM might invent facts that have no basis in reality, which can significantly undermine the reliability of business processes.

B. Bias in LLMs

LLMs can inherit biases from their training data, leading to unfair or discriminatory results. For example, an LLM used for hiring might unintentionally favor certain demographics based on biased training data, which could harm a company’s diversity efforts.

5. Governance and Risk Management

The high failure rate of AI projects is often linked to poor governance and risk management. LLMs introduce new risks that enterprises must proactively manage to avoid project failure.

A. Prompt Injection Risks

Prompt injection is a significant security risk where attackers manipulate LLM input prompts to extract sensitive data or trigger malicious actions. This could lead to unauthorized access to business-critical information or even social engineering attacks.

B. Shadow AI

Shadow AI refers to unauthorized AI model usage within an organization. This can lead to misconfigurations, unmonitored risks, and exposure of sensitive data, making it difficult for security teams to protect business assets.

6. Scaling Challenges

The increasing demand for AI-driven technologies is placing unprecedented stress on global infrastructure. Scaling LLM deployments faces both technical and logistical challenges.

A. Compute and Infrastructure Demands

LLMs require vast computational power, which strains data centers and network infrastructure. Global infrastructure is struggling to meet this demand, leading to delays in scaling LLMs.

B. Real-World Hurdles in Scaling

Scaling LLMs also faces real-world challenges such as supply chain delays, labor shortages, and regulatory barriers. For example, delays in the availability of required hardware or regulatory friction around grid access can slow down LLM deployment.

Incorporating LLMs into large enterprises offers tremendous potential, but understanding and addressing these pain points is crucial for achieving success. With the right strategies and proactive management, enterprises can unlock the full value of LLMs while minimizing risks.

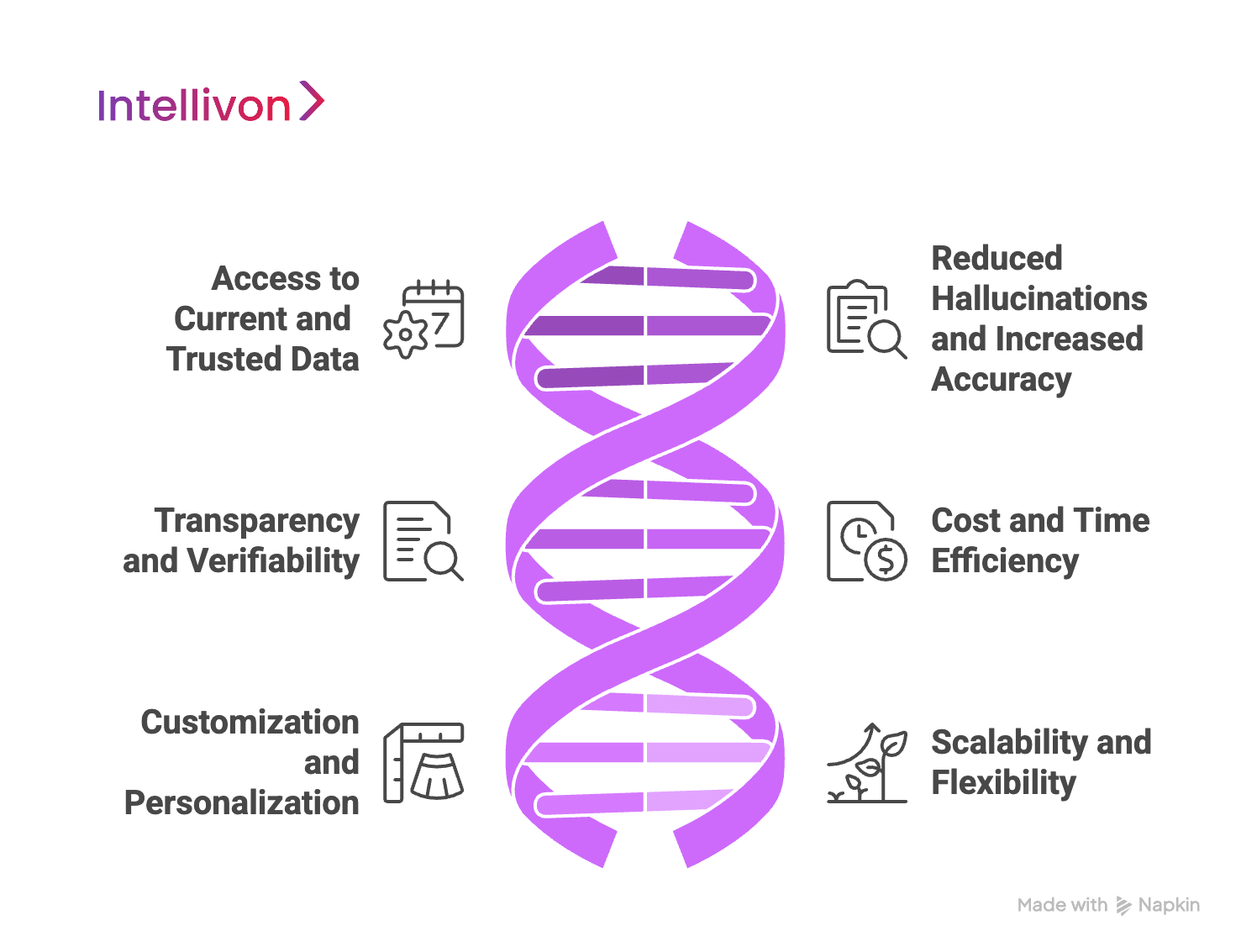

The Role of RAG in Enterprise LLM Adoption

Retrieval-Augmented Generation (RAG) enhances LLMs by combining their generative capabilities with real-time access to external data. Unlike standard LLMs that rely on static datasets, RAG pulls in fresh, verified data from internal and external sources, making the AI more accurate and trustworthy.

1. Access to Current and Trusted Data

RAG enables enterprises to update data in LLM responses continuously. This is a game-changer for businesses, as it ensures that the AI generates timely, accurate, and relevant information without requiring expensive retraining.

For instance, a customer service chatbot can provide answers based on the most current policies or products, rather than relying on outdated training data.

2. Reduced Hallucinations and Increased Accuracy

LLMs sometimes generate outputs that are not based on reality, a phenomenon known as hallucination. RAG reduces this risk by grounding responses in retrieved documents. This not only improves the accuracy of responses but also boosts user trust, critical for enterprise applications like customer support or legal advice.

3. Transparency and Verifiability

RAG systems often include citations or source attributions, making it easier for users to verify AI-generated answers. This transparency is essential for enterprises, particularly in regulated industries that require auditability and compliance. By making the AI’s sources clear, RAG helps organizations meet legal and regulatory requirements like GDPR

4. Cost and Time Efficiency

With RAG, enterprises can avoid frequent and costly LLM retraining. Instead, knowledge bases are updated dynamically, reducing operational costs and speeding up time-to-value. For example, rather than retraining an LLM on new product information, enterprises can simply update their internal database and rely on RAG to pull that data into the AI’s responses.

5. Customization and Personalization

RAG allows businesses to personalize interactions by integrating their specific datasets with the broader knowledge of the LLM. Whether it’s customer support chatbots offering tailored responses or personalized marketing recommendations, RAG enhances customer satisfaction and business outcomes.

6. Scalability and Flexibility

RAG can scale efficiently as the business grows. As new data is added to the knowledge base, RAG adapts without requiring changes to the core LLM. This flexibility is crucial for enterprises that need to continuously evolve their AI systems to meet shifting demands.

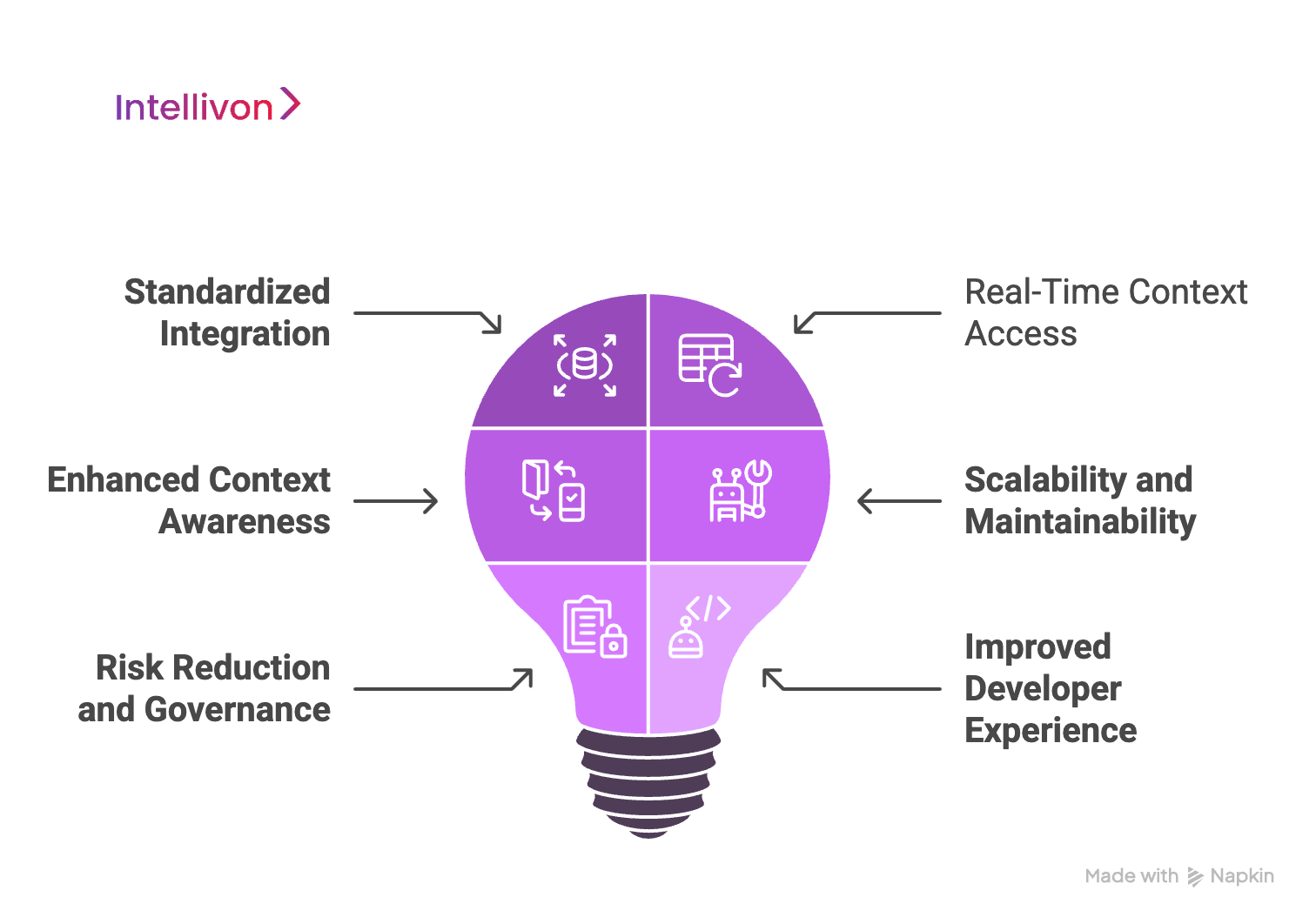

MCP and Its Impact on Enterprise LLM Adoption

The Model Context Protocol (MCP), introduced by Anthropic in late 2024, addresses a critical limitation in traditional LLMs: their isolation from real-time, proprietary data. MCP enables seamless integration between LLMs and external tools, data sources, and systems.

1. Standardized Integration

MCP offers a universal framework for connecting LLMs with enterprise applications. It uses a JSON-RPC interface, which simplifies the connection process. This eliminates the need for custom coding when integrating multiple tools, significantly reducing development time and complexity. Enterprises can now easily connect LLMs with databases, APIs, and other systems without extensive technical resources.

2. Real-Time Context Access

MCP allows LLMs to retrieve and utilize up-to-date business data in real time. This ensures that the AI outputs are relevant and accurate, especially when compared to traditional LLMs that rely on static training datasets. For example, an LLM used for generating legal documents can access the latest case law and regulations, improving its usefulness and compliance.

3. Enhanced Context Awareness

MCP enables LLMs to store and share context across multi-step workflows. This improves the AI’s ability to handle complex tasks that require coordinated action, like multi-agent customer service or multi-stage risk analysis. Unlike traditional, stateless prompt-based systems, MCP ensures that the context is retained across processes, leading to more reliable AI outputs.

4. Scalability and Maintainability

By standardizing the integration of LLMs with enterprise tools, MCP makes it easier for businesses to scale AI systems. As enterprises grow and their AI tool ecosystem expands, MCP ensures that new systems can be added without disrupting existing workflows.

5. Risk Reduction and Governance

MCP supports better governance and compliance by structuring context flow and logging. This structured approach improves transparency and explainability, making it easier for enterprises to comply with regulations like GDPR or HIPAA.

6. Improved Developer Experience

MCP simplifies the developer experience by reducing the need for custom integrations. This results in faster, more efficient AI application development, allowing businesses to deploy AI models quicker and at a lower cost.

Challenges with MCP

While MCP is an excellent tool for LLM adoption, it is still evolving. Some current challenges include:

- Limited application support: Not all enterprise tools are fully compatible with MCP out-of-the-box.

- Single LLM dependency: Enterprises may face challenges if they want to integrate multiple LLMs into the same workflow.

- Need for technical expertise: While MCP simplifies integration, technical knowledge is still required for deployment and optimization.

This is where Intellivon shines. Our vetted experts demonstrate hands-on experience with seamless MCP implementation, ensuring enterprises maximize LLM adoption by providing scalable, secure, and efficient AI-driven solutions tailored to their needs.

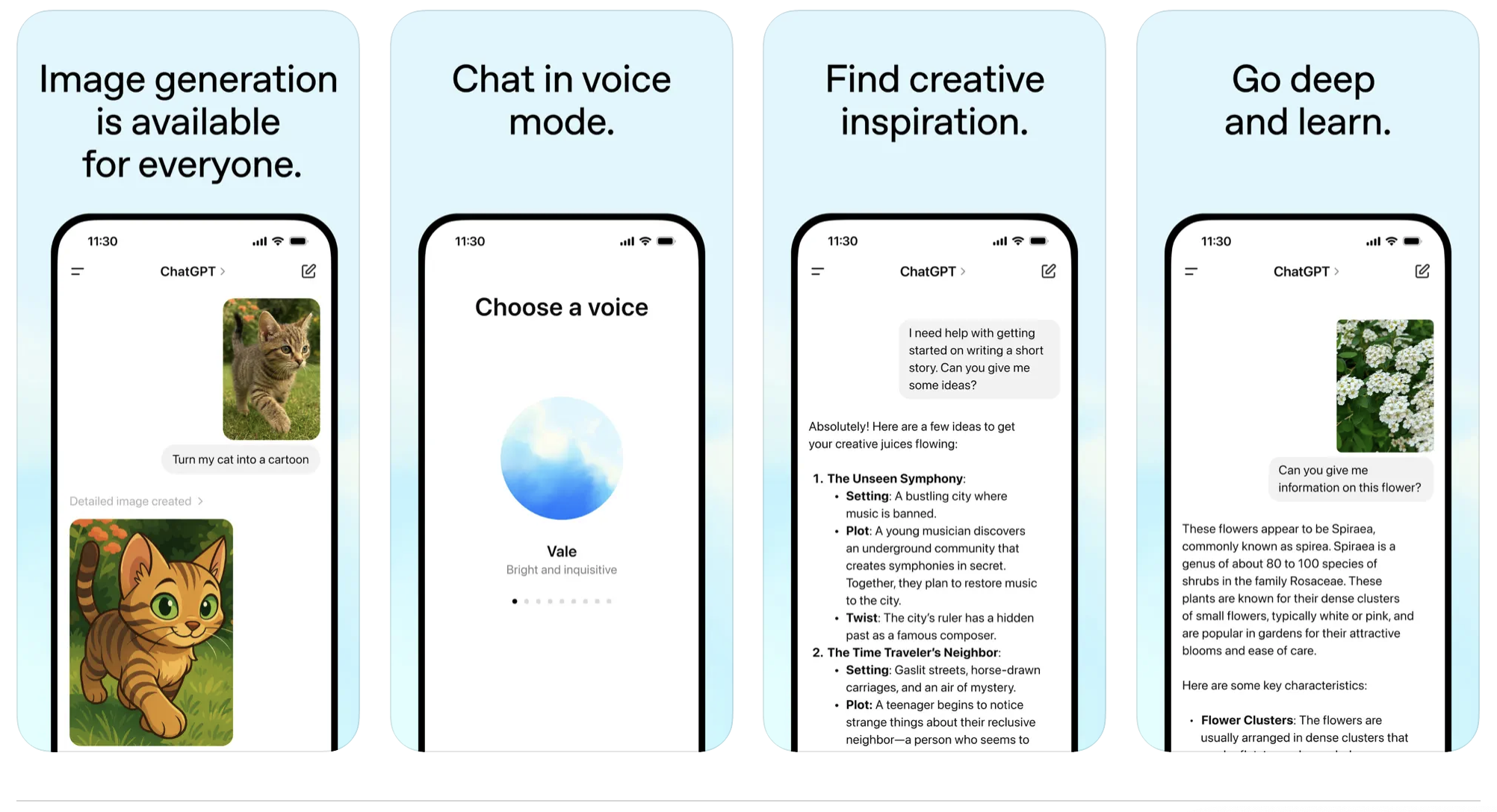

Deep Dive Into OpenAI: The Industry Leader

OpenAI, with its flagship ChatGPT, has rapidly become a leader in the LLM revolution, especially in large enterprises. Its models are designed to meet the demanding needs of corporate environments, with continuous improvements in capabilities and performance.

Key Enterprise Features

OpenAI’s ChatGPT Enterprise has seen significant adoption, with 92% of Fortune 500 companies integrating it within its first year. Notable features include:

- Unlimited High-Speed Access to GPT-4o: Enterprises benefit from access to OpenAI’s most capable models without usage caps, facilitating high-volume workloads.

- Extended Context Windows: With 128,000 token context windows, ChatGPT handles long documents and complex interactions seamlessly.

- Unlimited Advanced Data Analysis: OpenAI’s platform empowers businesses to derive deeper insights from proprietary datasets.

- Internally Shareable Chat Templates: Teams can create standardized templates for consistent communication.

- Scalable Deployment Tools: OpenAI provides tools that scale with the organization’s growth, from small teams to large enterprises.

Security, Privacy, and Compliance

OpenAI has made strides to address key enterprise concerns:

- Data Ownership: OpenAI guarantees that data inputs and outputs are under customer control by default, ensuring privacy.

- Encryption: Industry-standard encryption protocols, such as AES-256 and TLS 1.2, safeguard data.

- Compliance: OpenAI is SOC 2 certified and compliant with GDPR, HIPAA, and CCPA. For healthcare enterprises, a Business Associate Agreement (BAA) is available to ensure HIPAA compliance.

Pricing Models

This pricing model can scale depending on the enterprise’s usage, and OpenAI offers several models, each with varying levels of complexity and pricing. More advanced models like GPT-4 come with higher costs, while simpler models like GPT-3.5 Turbo are more affordable for less complex tasks.

Here’s a detailed breakdown of the pricing for different OpenAI models:

| Model | Price per 1,000 Input Tokens | Price per 1,000 Output Tokens | Ideal Use Cases |

| GPT-4 | $0.03 | $0.06 | Complex tasks, creative content generation, in-depth customer service, research. |

| GPT-3.5 Turbo | $0.0015 | $0.002 | Basic tasks, simple queries, cost-efficient for high-volume use cases. |

| GPT-3 | $0.002 | $0.004 | General-purpose tasks, light workloads, education, and non-critical applications. |

Use Cases for OpenAI in Large Enterprises

OpenAI’s models excel in a variety of enterprise applications. Their versatility allows businesses to improve operational efficiency, enhance customer experiences, and drive innovation. Here are some key use cases for OpenAI in large enterprises:

1. Conversational AI and Virtual Assistants

OpenAI powers sophisticated chatbots and virtual assistants that enhance customer support and internal operations. By providing accurate, context-aware responses, OpenAI reduces the workload on human agents and improves customer satisfaction.

2. Developer-Heavy Environments

In tech-driven industries, OpenAI helps developers by generating code, automating testing, and streamlining development processes. Its ability to assist in software development accelerates time-to-market and reduces manual effort.

3. Fraud Detection and Risk Analysis

OpenAI’s capabilities extend to fraud detection and risk analysis. By processing vast amounts of transaction data and detecting anomalies, it helps financial institutions identify fraud and mitigate risks effectively.

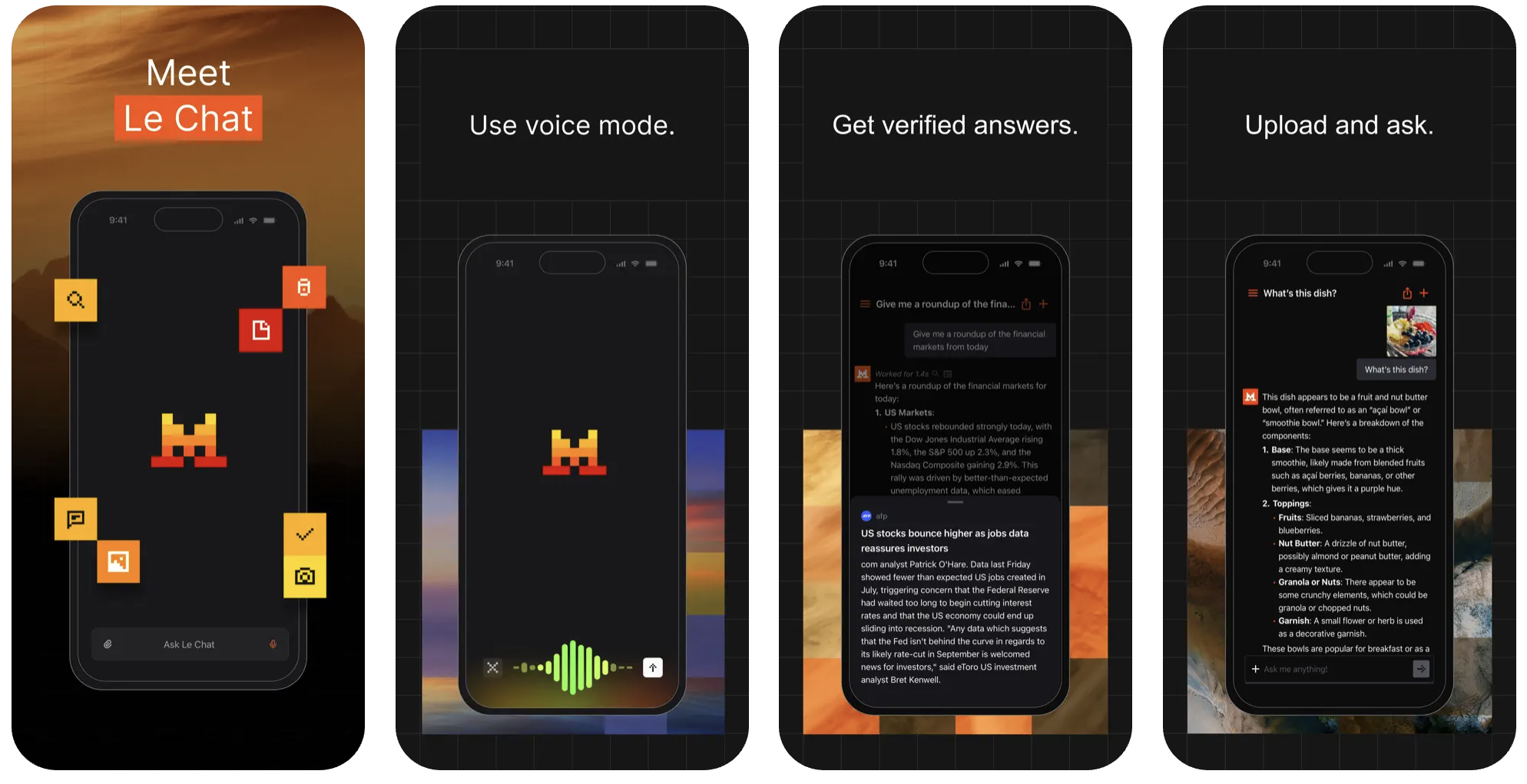

Deep Dive Into Mistral: The Open-Weight Advantage

Mistral AI has quickly emerged as a key player in the enterprise LLM landscape. Known for its focus on open-weight models, data sovereignty, and competitive pricing, Mistral offers enterprises a unique value proposition, particularly for organizations seeking control, customization, and compliance.

Key Enterprise Features of Mistral AI

Mistral offers a range of models tailored to various enterprise needs. Its open-weight approach provides flexibility and control, allowing enterprises to deploy models in ways that suit their specific requirements.

1. Full Deployability and Control

Mistral’s models can be deployed locally, on private cloud infrastructure, or through Mistral’s API endpoints. This gives businesses more transparency and control over their AI infrastructure. For compliance-heavy organizations, this control is critical, ensuring that data privacy standards are met.

2. Vertical-Specific Tools

Mistral offers specialized models for different industries. For example, Magistral is designed for legal and medical applications, while Codestral focuses on code generation. These vertical-specific tools provide more precise and effective solutions, enabling enterprises to meet the unique demands of their sectors.

3. Comprehensive Customization Options

Enterprises can customize Mistral’s models using advanced fine-tuning techniques like Low-Rank Adaptation (LoRA) and Reinforcement Learning with Human Feedback (RLHF). These methods allow businesses to tailor the models to their specific datasets and industry nuances, enhancing model performance.

4. Developer-Centric Design

Mistral empowers developers with a suite of tools, including Software Development Kits (SDKs), orchestration tools, and an API-first design. These features streamline integration, making it easier for enterprises to build custom AI agents and enhance their AI-driven workflows.

Security, Privacy, and Compliance Posture

Mistral AI is deeply committed to data sovereignty and compliance, which are essential for enterprises operating in regulated sectors. Mistral’s approach ensures that businesses maintain control over their data while adhering to industry regulations.

1. Sovereign AI and Regional Data Centers

Through a strategic partnership with NTT DATA, Mistral focuses on developing sovereign AI solutions hosted in regional data centers. This enables Mistral to meet stringent data privacy and security requirements, especially for customers in regions like Europe and Asia-Pacific.

2. Private Deployments

Mistral’s models can be deployed in private cloud environments or on-premises data centers, allowing businesses to control data flows and ensure compliance with regulations like GDPR and the AI Act.

3. Data Locality and Model Transparency

By utilizing NTT DATA’s infrastructure, Mistral guarantees secure processing and model operation with strict locality and transparency assurances. This approach contrasts with more closed, proprietary platforms, ensuring better data control and compliance.

Pricing Models

Mistral sets itself apart with a flexible pricing model that benefits businesses seeking both cost efficiency and transparency. This approach is particularly appealing for high-volume or sensitive workloads.

By providing open-weight models for local hosting, Mistral allows enterprises to control costs. This is especially valuable for high-volume workloads where per-token API calls can quickly add up. Businesses can avoid the costs of continuous API usage by hosting models locally. For hosted models, Mistral Large costs $0.4 per million input tokens and $2 per million output tokens. Codestral, the specialized model, is even more affordable at $0.3 per million input tokens and $0.9 per million output tokens.

| Model | Input Price (per 1M tokens) | Output Price (per 1M tokens) | Use Case |

| Mistral Large 24.11 | $2.00 | $6.00 | High-complexity tasks, advanced reasoning |

| Pixtral Large | $2.00 | $6.00 | Multimodal tasks (vision + language), document understanding |

| Mistral Small | $0.10 | $0.30 | Translation, summarization, lightweight tasks |

| Codestral | $0.20 | $0.60 | Code generation, programming assistance |

| Mistral NeMo | $0.15 | $0.15 | Code-specific tasks, optimization |

| Mistral Saba | $0.20 | $0.60 | Custom-trained models for specific markets and customers |

| Mistral 7B | $0.25 | $0.25 | Fast-deployed, customizable 7B transformer |

| Mixtral 8x7B | $0.70 | $0.70 | Sparse Mixture-of-Experts (SMoE), efficient edge model |

| Mixtral 8x22B | $2.00 | $6.00 | High-performance open model |

| Ministral 8B 24.10 | $0.10 | $1.00 | On-device use cases, efficient processing |

| Ministral 3B 24.10 | $0.04 | $0.04 | Edge model, cost-effective deployment |

| Pixtral 12B | $0.15 | $0.15 | Vision-capable small model |

| Mistral Embed | $0.10 | N/A | State-of-the-art text representation extraction |

| Mistral Moderation | N/A | $0.10 | Content moderation classifier |

Enterprise Use Cases

Mistral’s flexibility, transparency, and cost-effectiveness make it an ideal choice for enterprises seeking full control over their LLM deployments. Here are some use cases and ROI examples:

1. Software Development and Code Generation

Mistral’s Codestral model is a powerful tool for enterprises involved in software development. It assists with AI-assisted coding, automated documentation, and code testing, improving developer productivity and accelerating development cycles.

2. Custom AI Assistants

Enterprises can use Mistral’s open models to build task-specific AI assistants. These AI agents can be tailored to handle specific workflows or customer needs, providing businesses with more control over their AI operations.

3. Vision and Text Workflows

Mistral’s Pixtral model supports tasks like document parsing, OCR (Optical Character Recognition), and visual question answering. These capabilities are particularly beneficial for industries like logistics, legal, and media, where processing and interpreting visual data is critical.

4. Financial Services and Risk Management

Mistral is used by leading financial institutions like BNP Paribas and AXA for text generation, fraud detection, and risk management. Its ability to comply with security standards makes it ideal for highly regulated industries.

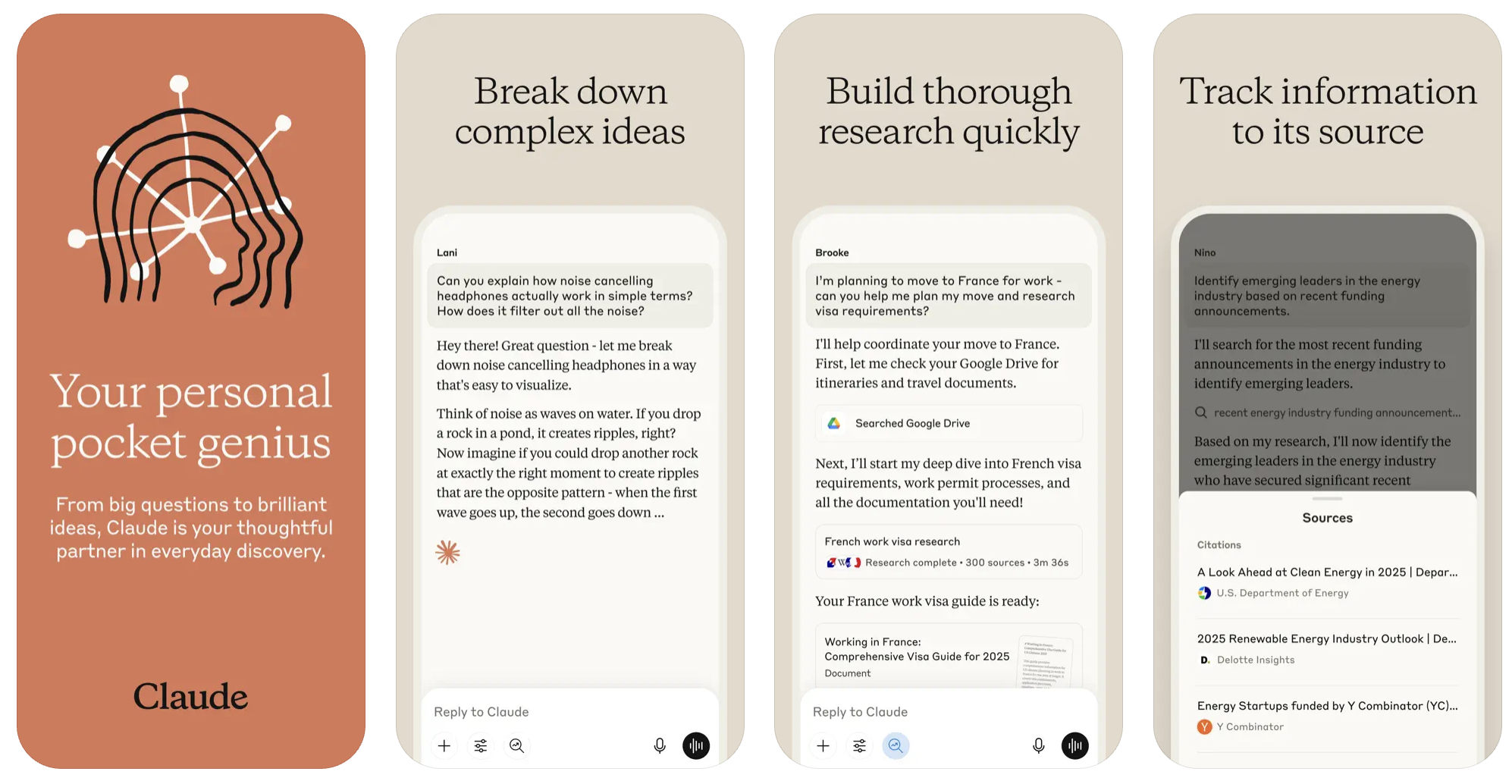

Deep Dive Into Claude: Ethical AI

Claude AI, developed by Anthropic, is rapidly becoming a standout choice for enterprises looking for ethical AI. Its strong focus on AI safety, robust analytical reasoning, and extensive context windows makes it ideal for high-stakes applications where accuracy, trustworthiness, and ethical considerations are paramount.

Key Enterprise Features of Claude

Claude is built with Constitutional AI principles, ensuring ethical guidelines are embedded directly into the AI’s design. This makes it particularly well-suited for enterprises where safe, helpful, and honest AI interactions are critical.

1. Analytical Powerhouse

Claude excels in analytical reasoning and complex document processing, making it perfect for industries with strict compliance requirements, such as finance and healthcare. It can handle extensive data sets and generate insights from large volumes of information.

2. Extensive Context Window

Claude’s expanded context window allows it to process and reference vast amounts of data, enabling it to manage complex tasks. For example, it can process the equivalent of 100 30-minute sales transcripts, 15 full financial reports, or 100,000 lines of code. This extensive memory and contextual understanding give Claude superior abilities for deep analysis.

3. Integration Ecosystem

While Claude may have fewer third-party integrations than OpenAI, it still boasts an extensive integration ecosystem with 1,000+ business applications. This growing capability ensures businesses can connect Claude to existing enterprise tools, enhancing its utility for a variety of workflows.

Security, Privacy, and Compliance Posture

Claude was built with enterprise security and compliance in mind. Its focus on ethical AI aligns with stringent industry regulations, offering enterprises peace of mind when deploying AI solutions.

1. Constitutional AI

Claude’s foundational principle, Constitutional AI, ensures ethical behavior by preventing harmful or biased outputs. This principle is critical for organizations in highly regulated industries where AI accountability is essential.

2. Data Privacy

Claude guarantees data privacy by not using conversations for model training unless explicitly opted in. For Claude for Work, this means that data inputs and outputs remain confidential and are not used to improve the model unless the user permits it.

3. Compliance Readiness

Claude’s architecture is designed with compliance in mind, particularly for industries like financial services and healthcare. It supports HIPAA compliance and other regulatory requirements, ensuring that businesses meet the legal standards in their respective sectors.

4. Enterprise-Grade Controls

Claude offers enterprise-grade security features, such as Single Sign-On (SSO), role-based access control, and SCIM for automated user provisioning. These features ensure secure access and help businesses manage user roles and permissions efficiently.

Pricing Models

Claude offers a tiered pricing structure designed to meet the varying needs of businesses, from small startups to large enterprises. Here’s an overview of Claude’s pricing options:

| Plan | Price (Annual Billing) | Key Features | Ideal Use Case |

| Free | $0 | Limited access to basic features, 5 chat sessions/day | Small businesses or testing purposes |

| Pro | $17/month | Full access to features, 100 chat sessions/day | Daily productivity and small teams |

| Max | $100/month (starting) | 5-20x more usage than Pro, full API access | Large enterprises with high demands |

| Enterprise | Custom Pricing | Tailored solutions for large-scale deployment | Custom enterprise applications |

While Claude’s pricing is tiered, its large context window and advanced reasoning abilities can help reduce costs. By solving complex problems more efficiently, enterprises can minimize the number of API calls and avoid relying on simpler, more expensive models for each task.

Ideal Enterprise Use Cases

Claude’s strengths in analytical rigor, safety-conscious design, and extensive context windows make it ideal for data-intensive and complex enterprise applications.

1. Financial Services

Claude is highly effective for financial applications, including financial modeling, scenario analysis, and document processing. For instance, major banks use Claude to generate financial models from natural language, process S-1 filings, and analyze earnings call transcripts.

2. Healthcare

Claude is designed to meet HIPAA compliance, making it an excellent choice for healthcare organizations focused on patient privacy and medical research. Claude’s ability to analyze complex medical documents and offer accurate insights helps improve decision-making in healthcare.

3. Legal and HR

Claude is also ideal for legal document drafting and HR policy analysis, where avoiding biases and ensuring fairness is essential. It helps businesses comply with industry regulations while efficiently handling complex document workflows.

4. Code Analysis

Claude’s Claude Code tool has seen tremendous growth in the developer community. It allows enterprises to automate code generation and code review, saving time and increasing productivity. Claude Code’s adoption has grown by 300%, with a 5.5x run-rate revenue increase.

Strategic Approach to Choosing the Right LLM for Your Enterprise

Selecting the optimal LLM for a large enterprise is a strategic decision that involves more than just raw performance metrics. To ensure an informed decision, it’s crucial to assess the following critical enterprise-relevant criteria when comparing different LLMs.

1. Model Capabilities & Performance

When choosing the right LLM, assessing capabilities and performance is key. The most suitable model will depend on the specific tasks, complexity, and workload your enterprise requires.

| LLM | Strengths | Best For |

| OpenAI | Offers best-in-class general-purpose language models, particularly GPT-4o, for creative content generation, conversational AI, and developer productivity. | Creative content generation, conversational AI, and developer productivity. |

| Mistral AI | Demonstrates impressive performance, especially in multilingual reasoning, code generation (Codestral), and multimodal document processing (Pixtral). | Multilingual tasks, code-heavy workloads, and multimodal document processing. |

| Claude AI | Stands out for superior analytical reasoning, the ability to process complex documents, and safety-conscious applications, especially in legal, HR, and financial sectors. | Legal, HR, financial analysis, and industries requiring ethical AI behavior. |

2. Security & Data Privacy

Security and data privacy are non-negotiable for enterprises, particularly those operating in regulated industries. It’s essential to choose an LLM that offers the necessary data protection features to safeguard sensitive information.

| LLM | Security Features | Data Privacy |

| OpenAI | Strong encryption (AES-256 at rest, TLS 1.2+ in transit), SOC 2, HIPAA BAA, GDPR, CCPA compliance. | Does not use customer data for training unless opted in. |

| Mistral AI | Emphasizes data sovereignty, offering local/private deployment options and regional data centers. | Full control over data locality and compliance with GDPR and AI Act. |

| Claude AI | Built with Constitutional AI principles ensuring ethical behavior. | Data is not used for training by default, offering strong data privacy. |

3. Scalability & Integration

Enterprises need scalable solutions that integrate well with existing systems. Whether you’re scaling up for increased data volume or integrating with complex workflows, the LLM should meet these evolving needs.

| LLM | Scalability Features | Integration Ecosystem |

| OpenAI | Robust API ecosystem, scalable deployment tools, integrated with Microsoft Azure. | Wide integration support with various third-party enterprise tools. |

| Mistral AI | Flexible deployment options (local, private cloud, API), modular architecture. | Strong developer-centric tools for easy integration into existing systems. |

| Claude AI | Scalable API infrastructure with proven scalability and growing enterprise integration ecosystem. | Supports integrations with over 1,000 business applications. |

4. Cost-Effectiveness

Choosing an LLM with an appropriate pricing model is crucial for enterprise budget management. Enterprises must weigh cost-effectiveness with the model’s capabilities and the potential ROI.

| LLM | Pricing Structure | Cost Optimization |

| OpenAI | Usage-based pricing (per token), more expensive at scale. | Custom enterprise plans available for high-volume use, optimizing costs. |

| Mistral AI | Competitive pricing, often more cost-effective than other models. | Open-source models allow for cost control by hosting locally. |

| Claude AI | Tiered pricing (Free, Pro, Max) and custom enterprise plans. | Large context windows reduce the need for multiple API calls, lowering costs. |

5. Customization & Control

Customization and control over the AI’s training, deployment, and fine-tuning are critical for enterprises with specific requirements.

| LLM | Customization Features | Control Over AI |

| OpenAI | Fine-tuning available, but some limitations in customization. | Offers APIs and flexibility, but less control compared to open models. |

| Mistral AI | Full customization, fine-tuning support (LoRA, RLHF). | Complete control over deployment and customization. |

| Claude AI | Securely extend knowledge with company data for customized use. | Ability to securely modify and extend the model for enterprise needs. |

6. Governance Support

Effective governance ensures that your LLM operates in compliance with regulations and organizational standards.

| LLM | Governance Features | Compliance and Management |

| OpenAI | Admin console, SSO, audit logs, data retention controls. | Comprehensive compliance support for GDPR, CCPA, and HIPAA. |

| Mistral AI | Local deployability, transparent operations for compliance. | Secure private deployments and governance support for sensitive data. |

| Claude AI | Constitutional AI for ethical guidelines, offers audit logs, role-based access, SCIM. | Audit logs, custom data retention policies, and full enterprise controls. |

Guidance on Matching LLM to Enterprise Needs

Selecting the right LLM is driven by an enterprise’s specific industry, regulatory landscape, data sensitivity, and IT infrastructure. Here’s a guide to matching the LLM to your business’s needs:

| LLM | Best For | Ideal Use Case |

| OpenAI | Organizations prioritizing best-in-class general models and a vast ecosystem for customer-facing content generation, conversational AI, and developer-heavy environments. | Customer-facing content generation, conversational AI, and developer-heavy environments requiring powerful, flexible solutions. |

| Mistral AI | Best suited for enterprises that prioritize data sovereignty, maximum control over AI infrastructure, and cost-efficiency through open-source models. | Highly regulated or sensitive environments that require strict compliance with privacy laws and a high degree of model control. |

| Claude AI | The preferred choice for use cases demanding high trust, analytical rigor, and safety-conscious AI behavior, especially when processing large, complex, and sensitive documents. | Financial services, healthcare, legal, and HR, where trust, accuracy, and ethical AI are critical for sensitive and complex documents. |

The right LLM is strategic and should align with your enterprise’s industry, data sensitivity, IT infrastructure, and regulatory landscape. At Intellivon, we ensure a ‘fit-for-purpose’ approach that ensures businesses select the model that best meets their unique needs, balancing performance, security, cost, and compliance.

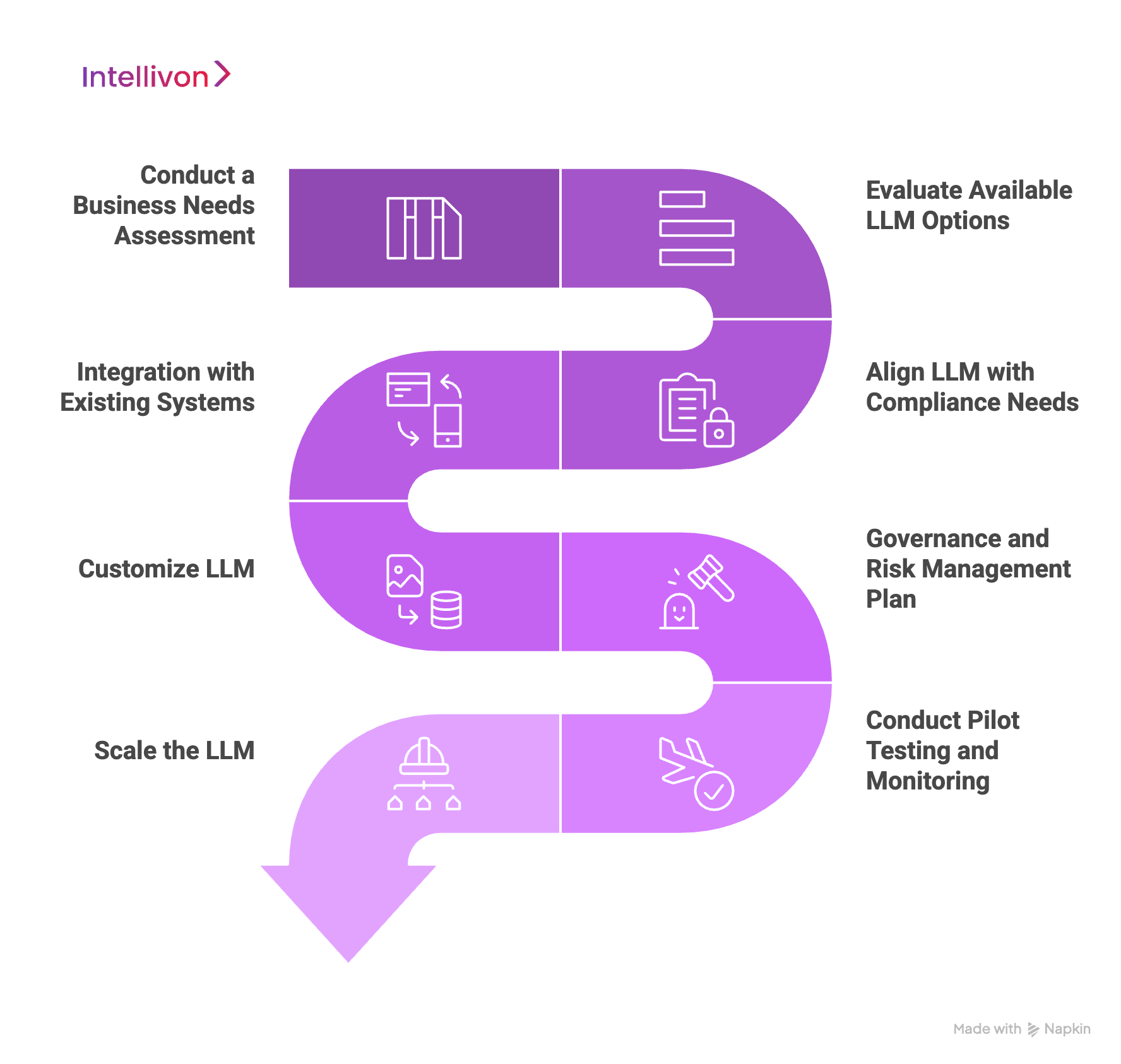

Our Strategic Framework for Enterprise LLM Adoption

Adopting an LLM within an enterprise requires a structured, strategic approach to ensure successful implementation. The following 8-step framework outlines the key stages of LLM adoption, where Intellivon directly implements each stage, ensuring that your enterprise maximizes the value of LLM technology while aligning with your business objectives.

1. Conduct a Business Needs Assessment

The first step in LLM adoption is to clearly identify your organization’s specific business needs. Intellivon implements this step by collaborating closely with your team to define clear use cases and strategic objectives. Whether you are automating customer service, enhancing data analysis, or streamlining internal workflows, we prioritize the areas that will benefit the most from LLM integration.

Through this assessment, we ensure that the selected LLM aligns with your long-term goals and business priorities.

2. Evaluate Available LLM Options

After understanding your business needs, we evaluate the available LLMs to identify the model that best suits your technical requirements and strategic objectives. We directly compare models like OpenAI, Mistral AI, and Claude AI based on performance, cost, data privacy, and compliance. By considering your organization’s unique context, we ensure that you select the model that delivers the greatest value, offering the most suitable performance for your tasks.

3. Align LLM with Compliance Needs

Compliance is a critical consideration, especially for enterprises in regulated sectors like healthcare, finance, and legal services. Intellivon ensures that the selected LLM meets your organization’s compliance and regulatory requirements.

We verify that the model complies with laws such as GDPR, HIPAA, and CCPA and includes audit logs for transparency. By handling these legal aspects, we ensure that your enterprise can confidently adopt the LLM without exposing it to legal or compliance risks.

4. Integration with Existing Systems

Successfully integrating the LLM into your existing systems is essential for maximizing its value. Intellivon implements this step by working closely with your IT team to ensure seamless integration with CRM, ERP, customer service tools, and other critical systems.

We handle the entire process, from identifying the systems to be integrated to ensuring smooth data synchronization and API integration. This minimizes disruption to your operations and ensures that the LLM fits into your existing infrastructure without causing inefficiencies.

5. Customize LLM

To maximize the effectiveness of the LLM, it must be customized to suit your enterprise-specific needs. Intellivon directly implements this step by fine-tuning the LLM with your proprietary data.

Whether it’s improving performance for customer support, marketing, or data analysis, we ensure the LLM provides contextually relevant and accurate solutions for your business. By integrating internal data and fine-tuning the model, we ensure that the LLM performs optimally in your specific environment.

6. Governance and Risk Management Plan

Effective governance is critical to prevent issues such as bias, data breaches, and ethical concerns. We implement a comprehensive governance framework that includes policies for data access, usage, and compliance.

We ensure that the LLM’s usage is ethically sound by setting up role-based access controls, conducting regular audits, and continuously monitoring the model’s behavior. This step guarantees that your LLM complies with both internal and external regulations.

7. Conduct Pilot Testing and Monitoring

Before scaling the LLM across the organization, we run a pilot program to assess its performance in real-world conditions. During the pilot, we monitor the LLM’s accuracy, efficiency, and user satisfaction. We collect feedback from the initial users to identify any issues or opportunities for optimization. We ensure that any necessary adjustments are made before the LLM is fully deployed, ensuring it performs as expected.

8. Scale the LLM

Once the pilot program has proven successful, Intellivon manages the scaling of the LLM across the enterprise. We ensure that the infrastructure is capable of handling increased data flow and user volume as the LLM is rolled out to other departments.

We also provide ongoing training and support to ensure that employees can leverage the LLM effectively, optimizing its value across the organization.

By assessing your business needs, regulatory requirements, and technical infrastructure, we guide you through the entire process, from selecting the right model to scaling it across your enterprise. Our approach ensures that you maximize the value of AI while minimizing risks and ensuring alignment with your organizational goals.

Conclusion:

Successful LLM adoption is key to unlocking the full potential of AI for your enterprise. By following a strategic framework and implementing strong LLM governance, businesses can integrate these models effectively, ensuring compliance, security, and ethical AI use.

The right approach maximizes efficiency, drives innovation, and ensures long-term success.

Ready to Unlock the Power of AI for Your Enterprise?

With over 11 years of enterprise AI expertise and 500+ successful deployments globally, Intellivon is your trusted partner in selecting, implementing, and governing the right LLM solution for your business. From seamless AI model integration to enhanced data-driven decision-making, we help enterprises move from fragmented AI solutions to scalable, compliant, and intelligent systems.

What Sets Intellivon Apart?

- Custom-Tailored LLM Solutions: We select and fine-tune LLMs to suit your enterprise’s unique needs, ensuring optimal performance for your specific use cases, from customer service automation to content generation.

- Comprehensive AI Integration: Our solutions integrate seamlessly with your existing IT infrastructure, empowering your enterprise to leverage AI without disrupting operations.

- Compliance-First Architecture: Every LLM deployment is fully compliant with industry standards and regulations, ensuring data security, privacy, and adherence to legal frameworks.

- Scalability at Every Stage: Whether you’re starting small or scaling rapidly, our models grow with your business, providing ongoing value and adaptability.

- Continuous Monitoring and Optimization: Intellivon ensures that your LLM performs at its best, continuously optimizing to meet evolving needs and delivering long-term ROI.

Book your free strategy call with an Intellivon expert today, and get:

- A detailed review of your current AI strategy and infrastructure

- LLM model selection and deployment strategy tailored to your business goals

- A compliance and risk management checklist specific to your industry

- A customized implementation and optimization roadmap for success

Ready to transform your enterprise operations? Scale with Intellivon.

FAQs

Q1: How do I choose between OpenAI, Mistral, and Claude for my enterprise?

A1: Choose based on your enterprise’s needs. OpenAI excels in scalability and general-purpose tasks, Mistral offers cost-effective solutions and full control over data, and Claude provides strong ethical AI with analytical reasoning, ideal for regulated industries like finance and healthcare.

Q2: What is RAG and how does it improve LLM performance?

A2: RAG (Retrieval-Augmented Generation) improves LLM performance by incorporating real-time, verified data from external sources into the model’s responses. It enhances the accuracy of results, reduces hallucinations, and ensures responses are relevant, fact-based, and aligned with business requirements.

Q3: How do enterprises ensure LLM compliance and governance?

A3: Enterprises ensure LLM compliance and governance by implementing role-based access controls, conducting regular audits, adhering to ethical guidelines, and ensuring strict compliance with legal frameworks like GDPR, HIPAA, CCPA, and specific industry regulations for data privacy and security.

Q4: What are the strengths and weaknesses of OpenAI, Mistral, and Claude for large businesses?

A4: OpenAI offers unparalleled versatility and scalability for diverse applications, Mistral provides cost-effective and customizable solutions with strong data sovereignty, while Claude excels in ethical AI, analytical reasoning, and complex document processing but may lack in broad third-party integrations.

Q5: How can Intellivon help with LLM integration and risk management?

A5: Intellivon specializes in seamless LLM integration, ensuring compatibility with your existing systems. We manage risk through comprehensive governance frameworks, ensuring compliance with industry standards, reducing bias, securing sensitive data, and providing ongoing optimization to maximize long-term value and mitigate risks.