Clinicians are spending more time documenting care rather than providing it. Hours are lost to clinical charting and corrections, which leads to longer workdays, fewer appointment slots, and frustrated clinical teams. Most leaders realize that documentation has hit a breaking point, but fixing it requires more than basic speech-to-text. Real-time clinical note generation works only when models truly understand medical language, format notes into SOAP structures, recognize specialty-specific terms, and integrate safely with EHR systems. In other words, accuracy and value depend on how these models are trained.

Intellivon’s expertise in training these models comes from years of creating clinical-grade, compliant AI platforms for health networks, telemedicine platforms, and digital health companies. We build training processes around actual clinician-patient conversations, EHR context, medical vocabularies, and billing needs. In this blog, we will explore how we train these models from the ground up for real-time clinical note generation.

Key Takeaways of the Clinical Note Generation Market

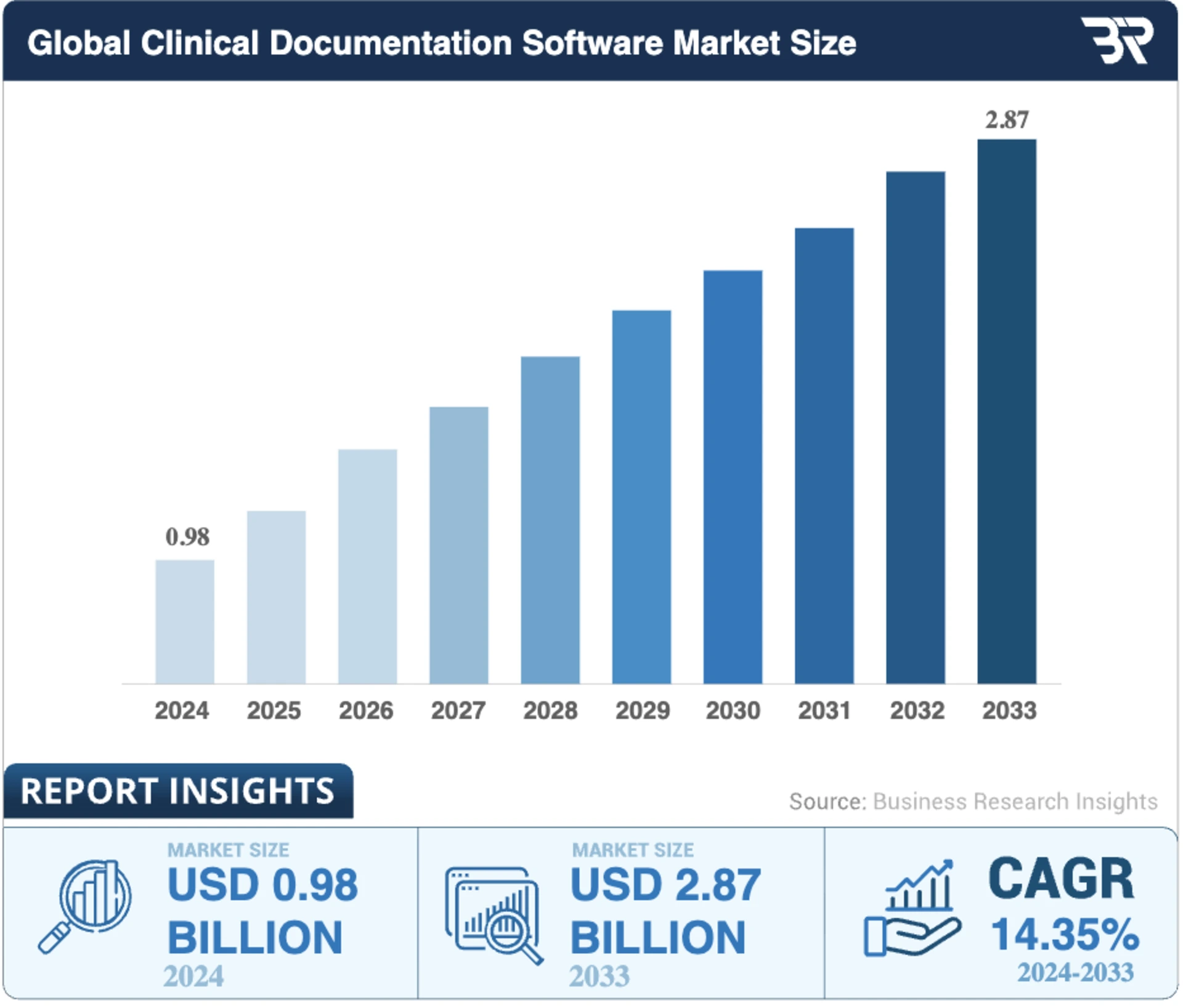

The global market for AI-driven clinical documentation is scaling quickly. The Clinical Documentation Software Market, valued at USD 0.98 billion in 2024, is projected to reach USD 1.12 billion in 2025 and USD 3.28 billion by 2033, maintaining a 14.35% CAGR from 2025 to 2033. Growth is even stronger in adjacent categories.

The NLP in Healthcare and Life Sciences market, which includes clinical note generation, is expected to rise from $5.18 billion in 2025 to $16.01 billion in 2030, with a 25.3% CAGR.

Key Insights:

- In a 2025 survey, 100% of health systems reported adoption activities for ambient AI scribe technology, with 53% reporting high success in live deployments.

- Large health systems like Kaiser Permanente, UCSF, and Cleveland Clinic have implemented AI-powered note automation to reduce manual entry and speed up clinical workflows.

- Automating real-time documentation can reduce clinician workload by 60% on average, improving productivity and lowering burnout.

- A primary care clinic reported a 94.13% ROI and $291,200 in annual savings from AI-enabled note automation, with a breakeven period of just over six months.

- Clinics using AI scribes freed 2–3 hours per physician per day, enabling five additional patient visits daily and generating $195,000 in new annual revenue.

- Multiple implementation pilots show measurable time savings per note, reducing administrative load and allowing clinicians to focus on patient care.

Real-time clinical note generation delivers operational savings, faster revenue capture, stronger compliance, and improved patient throughput. It replaces manual documentation with a scalable system that enhances accuracy, reduces risk, and gives clinicians time back, creating both financial and clinical value across the organization.

What Is Real-Time Clinical Note Generation?

Real-time clinical note generation is an AI-driven approach that listens to the clinician–patient conversation and produces a structured medical note during the encounter. It does not wait until the visit ends.

The system interprets speech, understands clinical context, summarizes the interaction, and builds a sign-ready SOAP or Assessment & Plan note in seconds. The goal is to reduce manual typing, eliminate after-hours charting, and help clinicians spend more time with patients.

How It Differs From Traditional AI Note Generation

Traditional AI note generation works after the appointment. Clinicians record a conversation or dictate a summary, and the system produces a draft later. This still leaves gaps in workflow, and clinicians must review, correct, and finalize notes long after the patient has left.

Real-time systems operate during the encounter. They capture speech, convert it into structured text, and produce medically aligned notes that can be reviewed and signed before the next patient. The experience feels more like having a digital clinical scribe than a transcription tool.

| Feature | Traditional AI Note Generation | Real-Time Clinical Note Generation |

| When notes are produced | After the visit | During the visit |

| Input | Uploaded audio or dictation | Live clinician–patient speech |

| Output | Unstructured or partially structured notes | Fully structured SOAP / HPI / A&P |

| Speed | Minutes or hours later | Sub-second to seconds |

| Editing workload | High | Low |

| Integration | Often manual copy-paste | Direct EHR writeback |

| Real clinical value | Moderate | High |

How It Works

Real-time note generation runs quietly in the background of a clinical encounter. While the clinician focuses on the patient, the system listens, interprets, and assembles a structured note that is ready for review by the time the visit ends.

1. Capture the Conversation

The process begins when microphones in the exam room or telehealth platform record the interaction. Voice activity detection keeps the system listening only when someone is speaking, which preserves privacy and reduces noise.

2. Transcribe Speech

In this step, a medical ASR engine converts spoken words into text in milliseconds. It recognizes clinical terminology, accents, interruptions, and natural speech patterns. Additionally, punctuation and speaker labels are added so the text reads like a proper transcript rather than a raw audio dump.

3. Understand the Clinical Meaning

Here, NLP models interpret what was said, and not just what was heard. The system identifies symptoms, medications, medical history, and assessment details. After this, it separates clinically relevant information from casual conversation.

4. Pull Context From the EHR

The system checks the patient chart for meds, allergies, vitals, and recent visits. This grounding step prevents hallucination and aligns the note with real clinical data. If the patient already takes a medication, the note reflects that automatically.

5. Generate the Structured Note

Here, summarization models turn the conversation into a clean SOAP or Assessment & Plan. They remove filler speech, keep essential medical details, and write in standard clinical phrasing. By the time the visit ends, a draft is waiting inside the EHR.

6. Review and Learn

The clinician reviews the note, makes quick edits, and signs off, and every correction becomes training feedback. Over time, the system learns the organization’s preferred templates, phrasing, and specialty language, reducing editing even further.

The workflow feels simple to the user, but under the surface, multiple AI systems work together to deliver a sign-ready note in real time. The result is less typing, shorter workdays, and faster turnaround for the billing cycle, all without changing how clinicians practice medicine.

Why Hospitals Need Real-Time Clinical Note Generation

Clinical documentation was meant to preserve medical history, and not consume it. Yet, documentation has become one of the largest drains on clinical capacity, revenue, and physician well-being.

The numbers are staggering and indicate that clinicians are spending more time feeding the EHR than speaking with patients, and the financial system is paying for it twice, once in labor and again in denied claims.

1. Time Lost to Administrative Work

Multiple studies confirm that physicians now spend nearly half of their working hours inside the EHR. One analysis published in the Annals of Internal Medicine found that doctors spend 49% of their workday on EHR and desk work, and only 27% in direct patient care.

In primary care settings, documentation alone consumes 36 minutes per patient visit.

The work does not end when clinic hours do. A 2022 study reported that U.S. physicians spend 1.77 hours every day completing charts after hours. For many clinicians, this “pajama time” has become routine.

Documentation has become a second shift instead of just being a part of the shift.

2. Documentation Follows Clinicians Home

Surveys show that nearly 70% of clinicians chart from home, often late at night after family hours. While burnout has many contributors, EHR workload remains one of the most cited causes of emotional exhaustion in medicine.

It is not patient care that exhausts clinicians, but it is the paperwork. Even when patient volumes are stable, administrative creep continues to steal time and energy.

3. The Hidden Financial Drain

In U.S. hospitals, 5–10% of claims are denied, and documentation or coding errors are a major driver.

A survey from Medical Economics found that 38% of providers experience denial rates of 10% or more, directly tied to missing or insufficient clinical detail..

Hospitals end up hiring additional scribes, auditors, and revenue cycle specialists to fill the gaps, paying twice for documentation that should have been right the first time.

Physician Retention Becomes a Revenue Problem

The documentation burden is now one of the strongest predictors of burnout and early exit from medicine. Replacing a single physician can cost a hospital $500,000 to $1 million when factoring in hiring, credentialing, and lost revenue during transition.

This means burnout is now a financial liability, not just an emotional one.

This is why real-time clinical note generation matters. Healthcare needs time returned to clinicians, claims protected at the source, and notes that write themselves instead of consuming entire workdays.

The burden is measurable, and healthcare enterprises cannot scale if physicians spend 50% of their professional lives documenting care instead of delivering it.

How AI Powers Model Training for Clinical Note Generation

Real-time clinical documentation works because the underlying models learn how clinicians speak, how patients describe symptoms, and how medical notes are written. The models improve as they see more conversations, specialties, and edits. Each layer of AI in the system learns a different skill. Together, they create the accuracy and reliability hospitals expect.

1. Learning From Real Clinical Conversations

Training starts with a de-identified clinician-patient audio paired with the final signed notes. The model learns how real conversations unfold, including interruptions, filler speech, medical terminology, and accents.

It sees how a clinician asks questions and how a patient explains symptoms in their own words. This real-world exposure makes the system effective in busy clinical settings, not just in controlled environments.

2. Training Medical ASR

Medical Automatic Speech Recognition engines learn from thousands of hours of clinical audio. They are trained to recognize drug names, abbreviations, and specialty-specific language.

The goal is to reduce word error rate while keeping latency low. During training, the model learns how to handle background noise, overlapping speakers, and different microphone setups. Once tuned, it can produce clean text without slowing the encounter.

3. Training Models to Understand Clinical Language

After transcription, NLP models identify symptoms, history, medications, and assessments. They learn how clinicians phrase questions and how patients describe conditions.

They also learn negations, such as “no shortness of breath,” and temporal cues like “started yesterday.” This is how the system knows what is clinically important and what can be ignored.

4. Summarization Models

Summarization models are trained on completed clinical notes. They learn how to turn long conversations into clear SOAP or Assessment & Plan sections.

Instead of copying the transcript, the model rewrites it in proper medical language. It learns what to condense, what to highlight, and how clinicians communicate decisions. This is the layer that produces sign-ready notes instead of raw text.

5. Training RAG for Clinical Grounding

Retrieval-Augmented Generation (RAG) adds another layer of intelligence. During training, the model learns how to fetch patient information, such as medications, allergies, vitals, and recent labs, from the EHR.

It learns when to insert that information into the note and when to flag uncertainty. This grounding step keeps the output factual and prevents hallucination. Clinical grounding is one of the biggest factors in enterprise trust, because it ties documentation to real patient data instead of assumptions.

6. Continuous Learning From Clinician Edits

The model improves after deployment. Every correction teaches preferred phrasing, specialty-specific vocabulary, and documentation patterns. Over time, the system starts writing notes that match the style of each organization.

Editing time drops, and acceptance rates rise. Continuous learning turns a good model into a dependable clinical tool.

AI training gives the system the skills clinicians expect, such as listening accurately, understanding context, writing clearly, and staying grounded in patient data. When each layer learns the right behavior, the output becomes reliable enough for daily use in live clinical environments.

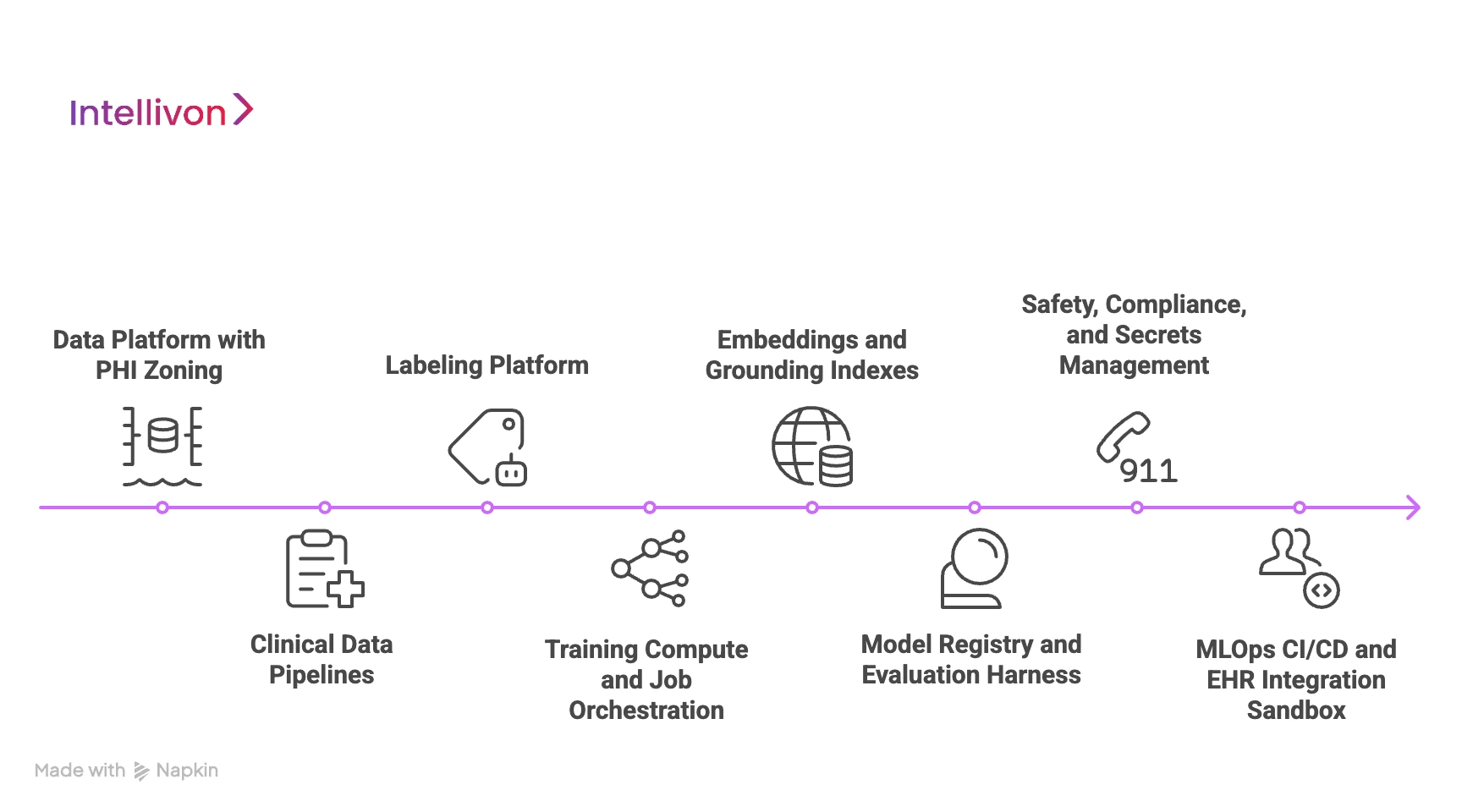

Architecture For Training Clinical Note Generation Models

Training a real-time clinical note generation system needs an architecture that can perform secure data handling, strict compliance, and continuous learning. Every layer must work together so the trained models perform reliably in live clinical settings.

1. Data Platform With PHI Zoning

The foundation is a secure data lake that separates raw PHI from de-identified training data. Sensitive audio and transcripts remain in a protected zone with strict access controls.

Once de-identified, data moves to a research zone where engineers can train models safely. Audit logs, encryption, and data lineage records show exactly how clinical data flows through the system. This gives hospitals confidence that privacy is protected at every step.

2. Clinical Data Pipelines

Data arrives in both streaming and batch form. Pipelines clean audio, attach metadata, and verify that patient identifiers have been removed. At the same time, machine learning and rule-based tools detect and redact remaining PHI before training starts.

This ensures the model learns from real conversations without exposing personal information. It also helps the dataset reflect real acoustic conditions: busy exam rooms, telehealth calls, and different microphones.

3. Labeling Platform

A labeling platform lets trained annotators tag symptoms, medications, allergies, and assessments inside the transcript. They mark where the conversation fits into SOAP sections and link terms to ICD, SNOMED, and RxNorm codes.

These labels become the “ground truth” that the model learns to match. Because medical language varies across specialties, annotation guidelines ensure consistency and accuracy over time.

4. Training Compute and Job Orchestration

Models are trained on GPU clusters that scale based on workload. Containerized jobs run in stages, starting from ASR, then NLP, then summarization. Checkpointing prevents progress from being lost, and distributed training shortens training cycles.

Run logs capture every parameter and dataset version, so results can be reproduced later. This keeps experimentation structured, even with large datasets and frequent updates.

5. Embeddings, and Grounding Indexes

Clinical models need context, and not just audio. A feature store holds reusable signals such as speaker roles, acoustic profiles, and common clinical phrases. Additionally, an embedding service converts medical language and chart data into machine-understandable vectors.

These power the retrieval layer, which keeps the model aligned with real patient data. It is one of the core reasons why the model can avoid hallucinations.

6. Model Registry and Evaluation Harness

Every trained model is stored in a central registry with versioning and metadata. An evaluation harness tests accuracy, latency, hallucination rate, clinical entity extraction, and SOAP completeness.

If a model fails on any metric, it does not move forward. Instead, dashboards help teams compare runs and track improvement over time. This makes the training pipeline predictable and measurable.

7. Safety, Compliance, and Secrets Management

Healthcare AI must prove it is safe. For this, the policy engine checks medication doses, interaction logic, and uncertainty levels during validation.

Secret management protects keys, credentials, and EHR connections. Additionally, compliance scans confirm encryption policies, retention rules, and least-privilege access.

Every training run produces an audit bundle so hospitals can verify alignment with HIPAA and internal governance.

8. MLOps CI/CD and EHR Integration Sandbox

Once a model passes evaluation, it moves through an MLOPs CI/CD pipeline. The EHR sandbox tests whether notes land in the right fields, with the right codes and structure.

Quantization and optimization prepare the model for real-time performance. Here, shadow mode lets the system run in the background before full rollout, which protects clinical workflows. When everything checks out, the model is ready for production.

This architecture turns raw clinical data into safe, reliable, and scalable AI models. When the foundation is built correctly, the output is a documentation system clinicians can depend on day after day.

What Model Types Power Clinical Note Generation

Real-time clinical note generation is a coordinated stack of AI components, each solving a different part of the problem. Together, these models create documentation that is sign-ready, compliant, and consistent.

1. Medical ASR

Medical Automatic Speech Recognition (ASR) converts speech into text with near-instant latency. Unlike general dictation tools, medical ASR learns clinical vocabulary, abbreviations, drug names, and overlapping speech.

It can separate speakers, filter background noise, and produce readable text instead of raw transcripts. Real-time systems rely on this layer for speed. If ASR is slow or inaccurate, the entire stack suffers.

2. Clinical Language Models

Once the speech becomes text, the system needs to understand what it means. Clinical language models detect symptoms, history, medications, allergies, and exam findings inside natural conversation.

They recognize negations like (“no chest pain”), duration (“for three days”), and severity. This is how the system knows what matters clinically and what can be ignored. Without this layer, the output would be transcription, not documentation.

3. Summarization Models

Summarization models transform long conversations into structured medical notes. They learn how clinicians document assessments, plans, and medical decisions.

Here, filler speech is removed, and relevant details are rewritten in clean clinical language. These models are trained on thousands of signed notes, so the final output matches industry and specialty norms. The result is a concise, readable note that fits standard templates.

4. Clinical NER

Named Entity Recognition (NER) models extract medications, dosages, allergies, vitals, procedures, and diagnosis terms.

They also map text to ICD, SNOMED, and RxNorm codes when needed. This helps with billing, quality reporting, and clinical accuracy. At the same time, NER ensures the note is coded correctly.

5. RAG and EHR Grounding

Retrieval-Augmented Generation (RAG) and grounding models check the patient’s existing chart for meds, allergies, vitals, and medical history.

This keeps the model factual and prevents hallucination. If the patient is already on a drug, the note reflects it. If something sounds uncertain, the system flags it for review. Grounding is one of the most important layers for enterprise trust.

6. Safety Models and Policy Enforcement

Healthcare requires strict guardrails, and safety models verify medication doses, interaction risks, and compliance requirements.

They route uncertain statements to the clinician instead of guessing. Combined with audit trails and access controls, these models make the system safe enough for real clinical work.

7. Real-Time Optimization

The final layer ensures everything runs fast. This is where models are optimized and distilled to run with low compute and minimal delay.

This lets clinicians speak naturally without waiting for the system to catch up. If the system lags, clinicians will not adopt it. Real-time optimization makes the entire solution feel invisible.

Each of these models plays a different role, and none can replace the others. When the stack is trained correctly, the result is a note that is accurate, compliant, and ready for signature before the next patient arrives.

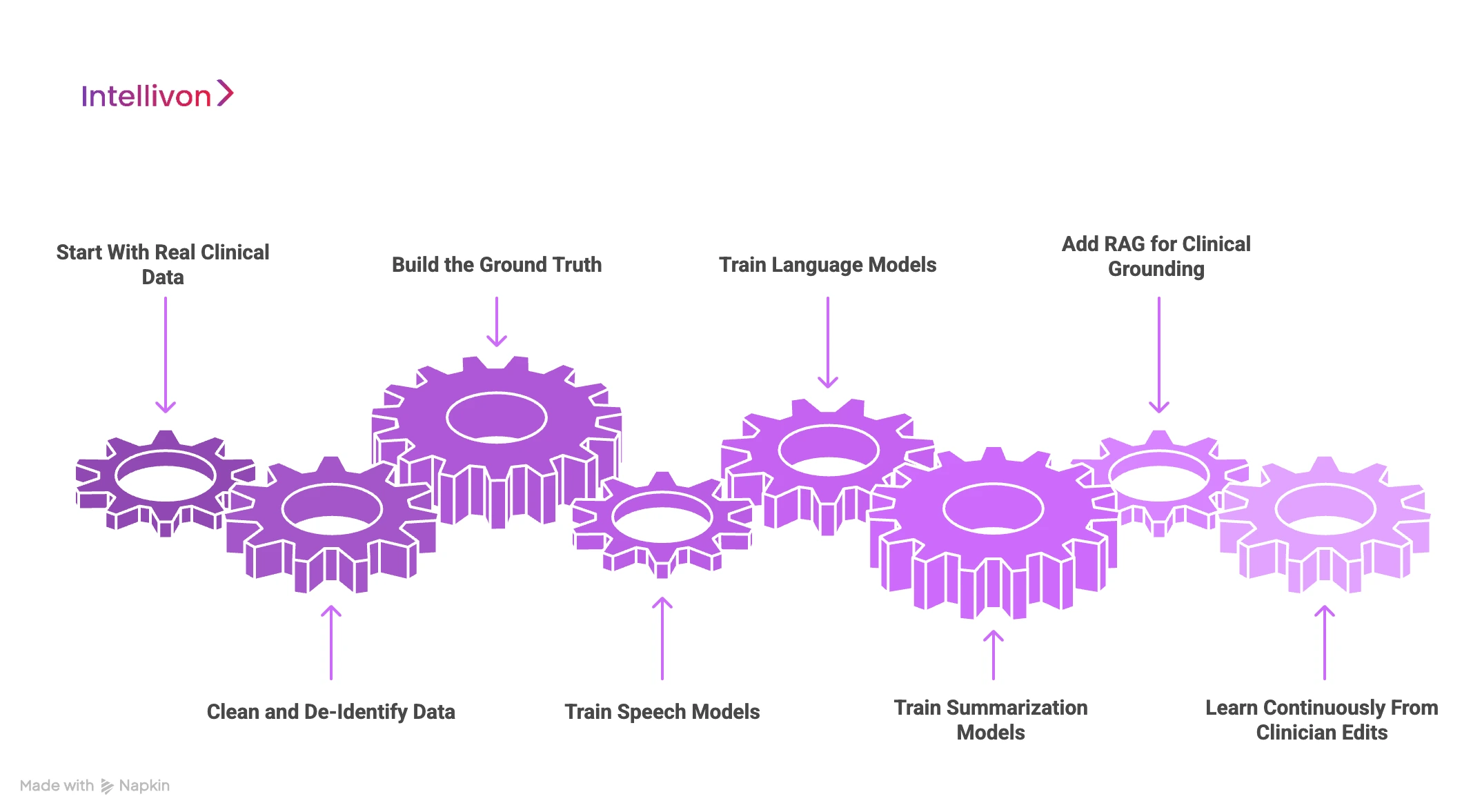

How We Train Real-Time Note Generation Models

Training a clinical note generation system follows a structured pipeline designed to make the output reliable in busy hospitals, urgent care centers, and telehealth settings.

At Intellivon, our goal is to train models that produce notes that are accurate, grounded in patient data, and ready for signature with minimal edits. Here is how that process works step by step.

1. Start With Real Clinical Data

Training begins with de-identified clinician–patient conversations paired with the final signed notes from the EHR. This teaches the models how real medicine sounds, not scripted examples.

They learn how clinicians ask questions, how patients describe symptoms, and how decision-making language appears in conversation. The more diverse the dataset, the better the system performs in real-world conditions.

2. Clean and De-Identify Data Safely

Every audio file moves through a secure de-identification pipeline. Here, PHI is removed, background noise is filtered, and metadata such as specialty, encounter type, or microphone source is attached.

Only de-identified data enters the training environment. This keeps the entire process HIPAA-aligned and gives hospitals confidence that privacy is protected.

3. Build the Ground Truth

Next, clinical annotators label the data. They tag symptoms, medications, allergies, exam findings, and assessment details inside the transcript.

They also mark the structure of the note, which includes what belongs to HPI, ROS, Exam, or A&P. This becomes the “answer key” that models learn to match. Without structured ground truth, the system would transcribe well but fail to write clinically useful notes.

4. Train Speech Models for Medical Accuracy

Automated Speech Recognition (ASR) models are trained on clinical language, not consumer speech. They learn drug names, abbreviations, complex phrasing, and overlapping speakers.

We also optimize them for real-time use, because latency matters at the bedside. The goal is to produce clean text in milliseconds so clinicians never feel the system slowing them down.

5. Train Language Models

Speech alone is not enough. This is why the next layer learns what the text means clinically. NLP models identify the history, symptoms, plans, and decisions inside the conversation. They learn negations (“no chest pain”), duration (“two weeks”), and severity (“mild shortness of breath”).

This gives the system the ability to separate small talk from medically relevant details.

6. Train Summarization Models

Summarization models learn from thousands of real clinical notes. They study how clinicians document conditions, plan follow-ups, or phrase assessments.

When trained well, the model converts long conversations into short, structured documentation that fits standard templates. The output reads like a real clinician wrote it, not like a transcript.

7. Add RAG for Clinical Grounding

Retrieval-Augmented Generation (RAG) gives the model factual memory. It pulls medications, allergies, vitals, and labs directly from the chart. This prevents hallucination and ensures the note reflects real patient data, not assumptions.

If something is missing or uncertain, the system flags it for review instead of guessing. Grounding is one of the biggest factors behind physician trust.

8. Learn Continuously From Clinician Edits

Once deployed, the model continues to learn. Every approval, correction, or template change becomes training feedback.

Within weeks, editing time drops and acceptance rates rise. Over months, the system adapts to each organization’s language, coding preferences, and documentation culture. What starts strong becomes even stronger with real usage.

This is how real-time note generation becomes reliable, compliant, and scalable. Intellivon’s training pipeline makes that performance repeatable across hospitals, telehealth platforms, and multi-specialty groups, without disrupting clinical workflows.

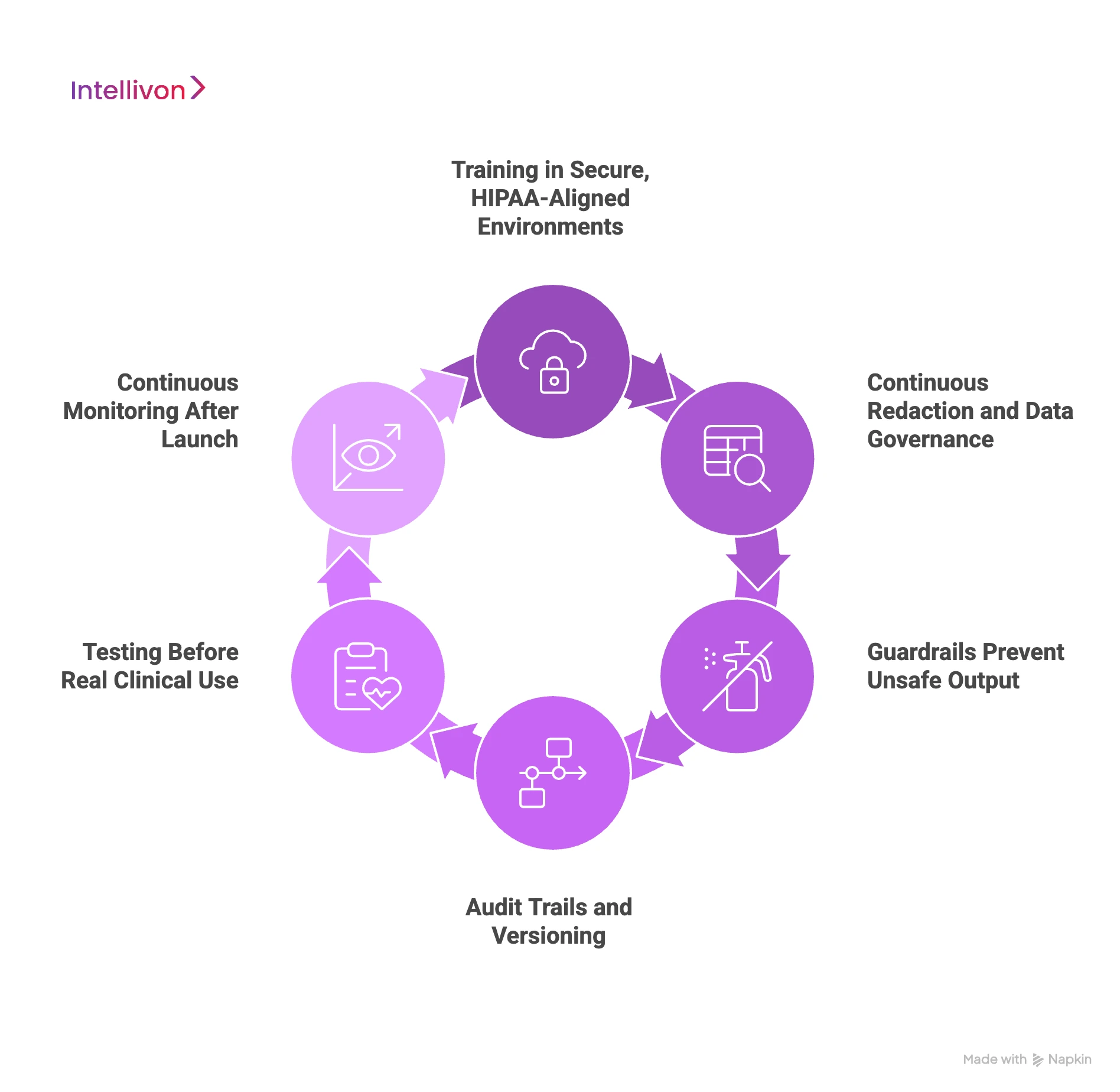

How We Ensure Safety and Compliance in Models

Clinical documentation is a regulated workflow. Any AI system that touches patient records must protect privacy, preserve data integrity, and avoid clinical risk. Compliance is the foundation of trust, and at Intellivon, safety is built into the system long before the first model is trained.

1. Training in Secure, HIPAA-Aligned Environments

All training happens inside secure cloud or on-prem environments designed for PHI. Raw data stays in protected storage with encryption at rest and in transit.

Only de-identified data enters the model training zone. Access is role-based, logged, and audited, so every step is traceable and defensible.

2. Continuous Redaction and Data Governance

Before data reaches a model, automated redaction tools remove patient identifiers. Human review checks edge cases to avoid accidental exposure.

Each file here is tagged with metadata, lineage, and retention rules. This keeps the process aligned with HIPAA, GDPR, and internal compliance policies.

3. Guardrails Prevent Unsafe Output

Safety models continuously check medication doses, contraindications, and interaction risks. If something sounds uncertain, the system flags it for clinical review. RAG grounding adds another layer by pulling facts directly from the chart, like medications, allergies, vitals, and recent visits. This prevents hallucination and keeps the note clinically accurate.

4. Audit Trails and Versioning

Every training run produces a full audit bundle: datasets used, checkpoints, evaluation metrics, and access logs. Hospitals can trace how a model learned, what data shaped it, and how each version changed over time.

This supports internal compliance teams and prepares for FDA or EU AI Act oversight.

5. Testing Before Real Clinical Use

Models go through safety simulations, hallucination tests, latency checks, and red-flag detection. A staging environment mirrors real EHR workflows to ensure templates, timestamps, and routing behave correctly.

Shadow mode lets the system run quietly alongside clinicians before going live.

6. Continuous Monitoring After Launch

Safety continues after deployment. Dashboards track accuracy, editing time, latency, and unusual patterns. If a model starts drifting, retraining pipelines update it with new data. This keeps accuracy high even as medical language, drug lists, and workflows evolve.

Safety and compliance are what make real-time clinical documentation viable, and not just high accuracy scores. With strong governance, chart-level grounding, and constant monitoring, health systems get a solution that is trustworthy, predictable, and ready for daily clinical use.

Conclusion

Real-time clinical note generation is now a reality. With the right training process, solid grounding, and ongoing learning, these models can take away after-hours charting, cut down on denied claims, and provide clinicians with more time for patients. The technology is most effective when it is based on real clinical data, strict compliance, and measurable results.

Hospitals don’t have to tackle this on their own. Success comes from working with a solution provider that knows healthcare workflows, regulatory needs, and robust AI engineering. The right partner offers the necessary frameworks, safety measures, and MLOps practices to produce reliable, sign-ready clinical documentation at scale.

Train Real-Time Clinical Note Generation Models With Intellivon

At Intellivon, we train real-time clinical note generation models that deliver medical-grade accuracy, regulatory compliance, and enterprise-scale performance.

Our systems eliminate manual charting, reduce billing errors, and cut after-hours documentation, while integrating cleanly with existing EHR workflows. Every build is engineered for high trust, low latency, and measurable ROI from the start.

Why Partner With Intellivon?

- Compliance-First Design: Training happens inside HIPAA-aligned, GDPR-ready environments with PHI zoning, encrypted storage, audit trails, and FDA/EU AI Act readiness.

- Healthcare-Tuned AI Models: ASR, NLP, summarization, and RAG models are trained on clinical audio, specialty language, and real-world workflows—producing sign-ready SOAP and A&P notes.

- EHR Grounding and Interoperability: Models are grounded in live patient context and mapped for FHIR, HL7, and SMART on FHIR, so documentation lands in the right chart automatically.

- Scalable Deployment Architecture: Cloud-native inference, observability dashboards, autoscaling, and high-availability design ensure reliability across hospitals and telehealth networks.

- Continuous Optimization: MLOps pipelines detect drift, retrain models, and improve output through clinician feedback, and accuracy grows with usage, not just at launch.

- Zero-Trust Security: Encryption at every layer, strict IAM, tokenization, and continuous threat detection safeguard PHI without slowing clinical care.

- Human-Centered Workflows: Clinician review screens, coder views, and audit controls make verification simple. Adoption rises because the experience feels natural.

- Proven Healthcare Expertise: With years of large-scale healthcare AI deployments, we bring reference architectures, security maturity, and outcome-based delivery models.

Book a strategy call with Intellivon to see how a custom-trained real-time note generation system can reduce documentation time, increase billing accuracy, and scale seamlessly across your clinical network.

FAQs

Q1. How accurate are real-time clinical note generation models in real hospital settings?

A1. Accuracy depends on clinical data quality, speech model tuning, and grounding with real patient records. Well-trained systems consistently achieve low word-error rates, structured SOAP output, and high clinician acceptance. Most hospitals report that clinicians review and sign notes in seconds, not minutes.

Q2. How long does it take to train a clinical note generation model for production use?

A2. Most enterprise deployments require initial training and validation over 8–16 weeks, depending on dataset size, specialties, and compliance scope. After go-live, continuous learning pipelines improve accuracy with every new edit, template update, and usage pattern.

Q3. What data is needed to train these models safely?

A3. Training requires de-identified clinician-patient audio, transcripts, final signed notes, and structured labels for clinical entities. To prevent hallucination, models must also be grounded in real patient context, medications, allergies, vitals, and recent encounters.

Q4. Can these systems work with Epic, Cerner, or Meditech?

A4. Yes. Real-time note generation models are deployed with FHIR, HL7, and SMART on FHIR connectivity. Notes can auto-post to the right chart, encounter, and timestamp. No manual copy-paste, no workflow disruption.

Q5. What does it cost to train and deploy a real-time note generation system?

A5. Most enterprise builds fall between $60,000 – $150,000, depending on scope, integrations, compliance requirements, and scaling needs. Ongoing optimization typically ranges from 15–25% of the initial build. Costs drop when models are fine-tuned instead of trained from scratch.