Emergency departments now handle many more patient visits and higher demand than they did in previous years. However, physician capacity and staffing have not kept up. This has increased operational strain on clinical teams. Health systems, insurers, and telehealth platforms are responding by using AI health assistants as part of their operations. These systems expand clinical capacity without hiring more staff or increasing burnout.

Creating consumer symptom checkers is fairly simple. However, building enterprise-grade AI health assistants is complex. Integrating with clinical workflows, complying with HIPAA regulations across jurisdictions, and meeting the standards of medical directors present challenges that many guides miss. These challenges will determine whether an AI project delivers lasting value or leads to clinical and regulatory issues.

At Intellivon, we develop AI health assistants for health systems where clinical accuracy validation and real-time EHR synchronization are essential. Our platforms tackle the risk of conversational AI errors and ensure data isolation from the ground up. This approach supports reliable clinical assistance while keeping enterprise deployments ready for audits amid changing regulations. In this blog, we will draw from this experience and discuss how to build such apps from scratch.

Why Enterprise Healthcare Organizations Are Investing in AI Health Assistants Now

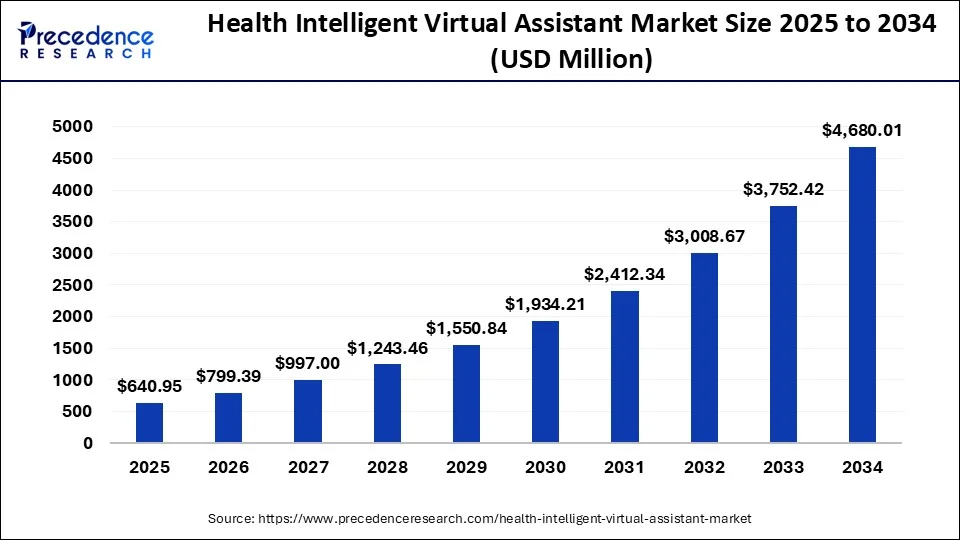

AI health assistant apps use NLP, machine learning, and clinical decision support algorithms to provide 24/7 patient triage, symptom assessment, medication management, and care navigation. The global market for intelligent virtual assistants in healthcare is expected to grow from approximately USD 513.9 million in 2024 to nearly USD 4.68 billion by 2034, expanding at a CAGR of about 24.7% between 2025 and 2034.

Market Insights:

- Healthcare systems are shifting toward preventive care powered by AI assistants.

- Early detection and chronic condition management reduce avoidable hospital stays.

- In the UK, acute bed overuse remains critical, with 13% occupancy pressure.

- AI analyzes wearables and longitudinal health data to trigger timely interventions.

- Proactive monitoring helps avert clinical deterioration before crises occur.

Why AI Delivers an Advantage at Enterprise Scale

- Personalization operates beyond human capacity limits.

- Predictive models forecast health risks with up to 90% accuracy.

- Outcomes improve by 30–35% through early intervention.

- Each interaction strengthens the system through continuous learning.

- Compounding data improves monitoring precision and care recommendations.

AI health assistants shift healthcare from reactive intervention to proactive prevention at enterprise scale. For organizations, they unlock measurable cost control, stronger outcomes, and sustainable care delivery models.

What Are AI Health Assistant Apps?

AI health assistant apps are clinical support platforms that use natural language processing, machine learning, and decision support logic to interact with patients and guide care. They collect symptoms, answer routine health questions, support medication adherence, and assist with care navigation.

In enterprise settings, these assistants integrate with EHRs, scheduling systems, and care management tools. They operate under strict security, compliance, and accuracy controls.

Unlike consumer chatbots, AI health assistants support real clinical workflows. They extend access, reduce administrative burden, and generate structured data for quality, compliance, and population health programs.

How Do They Work

AI health assistant apps operate through a structured workflow aligned with real clinical processes. Each step captures patient input, applies clinical logic, and ensures safe escalation without bypassing medical oversight.

Step 1: Patient Input Collection

Patients describe symptoms, concerns, or questions using text or voice interfaces. The system captures both structured responses and free-text input for downstream analysis.

Step 2: Language Understanding

Natural language processing interprets intent, symptoms, timelines, and context. Medical entities such as conditions, medications, and risk indicators are extracted for reasoning.

Step 3: Clinical Reasoning

Machine learning models and decision-support rules evaluate the collected data. The system assesses urgency, identifies potential risks, and determines appropriate care pathways.

Step 4: Response Generation

The assistant generates guidance grounded in verified medical knowledge. Confidence thresholds control tone, depth, and whether recommendations are displayed or restricted.

Step 5: Escalation and Handoff

High-risk or low-confidence cases escalate to clinicians or support teams. Context transfers automatically to prevent repetition and preserve continuity.

Step 6: System Integration

Conversations sync with EHRs, scheduling systems, and care platforms. This ensures clinical visibility, documentation, and workflow alignment.

This workflow enables safe automation while preserving clinical judgment. Each step balances speed, accuracy, and enterprise-grade compliance.

What Separates Symptom Checkers from Enterprise AI Health Assistants

Consumer symptom checkers and enterprise AI health assistants solve very different problems. While both may use conversational AI, their design goals, risk profiles, and accountability levels diverge sharply.

Enterprise platforms operate inside regulated care delivery environments, where clinical accuracy, integration, and compliance determine adoption and long-term viability.

At this level, the difference surrounds whether the system can safely participate in healthcare operations.

Key Differences at a Glance

| Dimension | Consumer Symptom Checkers | Enterprise AI Health Assistants |

| Clinical Accuracy & Liability | Informational guidance with disclaimers | Clinically validated logic aligned with diagnostic and triage standards |

| Regulatory Architecture | Basic encryption and privacy policies | HIPAA- and GDPR-by-design systems with audit logging and BAAs |

| System Integration | Standalone experience | Deep integration with EHRs, scheduling, billing, and care platforms |

| Escalation & Handoff | Generic “consult a doctor” messaging | Intelligent routing with full clinical context transfer |

| User Access Model | Patient-only interface | Role-based access for patients, clinicians, care teams, and proxies |

| Data Governance | User data is often monetized or loosely governed | Clear data ownership, retention rules, and de-identification controls |

| Hallucination Management | Minimal safeguards against incorrect advice | Guardrails, confidence scoring, curated fallback content, and human-in-the-loop |

| Performance Expectations | Best-effort availability | High-availability architecture with disaster recovery planning |

Why These Differences Matter

1. Clinical Accuracy and Liability

Consumer tools avoid responsibility through disclaimers. Enterprise systems cannot. When AI influences care pathways, organizations assume liability for errors, making validation and oversight mandatory.

2. Regulatory Compliance Architecture

Basic security is insufficient in regulated environments. Enterprise platforms must enforce access controls, immutable audit trails, and documented compliance processes that withstand regulatory scrutiny.

3. Integration Requirements

Standalone tools create data silos. Enterprise assistants must synchronize with EHRs and operational systems to avoid duplicate documentation and clinician resistance.

4. Escalation and Handoff Protocols

Generic advice breaks trust. Clinicians adopt systems that escalate appropriately and transfer full context without forcing patients or staff to repeat information.

5. Multi-Stakeholder Access

Healthcare delivery is collaborative. Enterprise platforms support multiple roles with permissioned access rather than isolating the experience to the patient alone.

6. Hallucination Risk Management

Plausible but incorrect medical responses create patient harm. Enterprise systems mitigate this risk through confidence thresholds, guardrails, and human review mechanisms.

7. Performance and Reliability

Downtime is inconvenient for consumer apps. In healthcare settings, it is unacceptable. Enterprise platforms are engineered for reliability under load.

Symptom checkers optimize for convenience. Enterprise AI health assistants optimize for safety, accountability, and operational fit. These requirements shape every architectural, clinical, and compliance decision from day one.

AI-Powered Virtual Assistants Reduced Call Volume by 30%

Healthcare contact centers are under constant pressure from routine patient inquiries. Appointment questions, medication clarifications, eligibility checks, and follow-ups consume clinical and non-clinical staff time daily.

AI-powered virtual assistants are now absorbing a large share of this demand, reducing call volume while improving response speed and consistency.

1. Routine Inquiry Deflection at Scale

AI virtual assistants handle high-frequency, low-risk patient questions automatically. These include appointment logistics, basic symptom guidance, medication reminders, and coverage questions.

2. Faster Resolution Without Human Bottlenecks

AI assistants respond instantly and operate 24/7 without queue delays. Patients receive answers in seconds instead of waiting on hold or for callbacks.

This speed shortens resolution time for routine issues and improves patient satisfaction without increasing staffing levels.

3. 60–80% of Routine Inquiries Handled Automatically

Healthcare organizations report that AI assistants resolve 60–80% of routine inquiries end-to-end. Human agents are reserved for complex, high-context, or high-risk cases.

This separation improves staff utilization and reduces burnout across support and clinical teams.

4. Operational Impact Beyond Call Centers

Lower call volume reduces staffing costs and overtime reliance. Structured AI conversations create defensible documentation trails for audits and quality reporting. Consistent responses also reduce variability and compliance risk across patient communications.

AI-powered virtual assistants remove routine demand from healthcare call centers at scale. This shift frees human teams to focus on clinical and complex interactions that require judgment and empathy.

Essential AI-Powered Features in Enterprise Health Assistant Apps

Enterprise AI health assistants are not defined by conversation alone. Their value comes from how accurately they assess risk, integrate into care delivery, and support clinical decision-making at scale.

Each feature below contributes to operational efficiency, patient safety, and measurable outcomes when implemented correctly.

1. Intelligent Symptom Assessment & Triage

This capability analyzes patient-reported symptoms using natural language input to support structured triage. It relies on clinical ontologies, probabilistic reasoning, and confidence scoring to assess urgency.

At the enterprise level, outputs must align closely with physician decision-making for common presentations. High-acuity signals require immediate escalation to avoid false reassurance and clinical risk.

2. Conversational Care Navigation

Care navigation guides patients to the appropriate setting, such as primary care, urgent care, telehealth, or emergency services. The system interprets intent, context, and prior history across multi-turn conversations.

Enterprise deployments must account for network status, provider availability, and scheduling constraints. Without real-time integration, navigation loses clinical and financial relevance.

3. Medication Management & Adherence Support

Medication support includes reminders, refill alerts, side-effect monitoring, and interaction awareness. These workflows depend on accurate medication lists and trusted drug knowledge sources.

For enterprises, formulary awareness and prior authorization visibility are critical. Clinical oversight remains necessary, as incorrect interaction guidance introduces liability and patient safety concerns.

4. Chronic Disease Monitoring & Coaching

This feature tracks longitudinal biometric data from remote monitoring devices and identifies concerning trends. Time-series analysis and anomaly detection enable early intervention.

Enterprise-grade systems integrate with medical-grade devices and notify care teams when thresholds are crossed. Coaching logic must support escalation pathways rather than operate in isolation.

5. Mental Health & Behavioral Support

Behavioral support includes mood tracking, guided exercises, risk detection, and care navigation. Sentiment analysis and safety scoring help identify when human intervention is required.

In enterprise settings, integration with behavioral health platforms and crisis services is mandatory. AI supports engagement and access, but cannot replace licensed clinicians.

6. Preventive Care & Health Maintenance

Preventive workflows track screenings, immunizations, and routine visits based on patient profiles. This is why recommendation engines analyze gaps in care using structured clinical data.

Enterprises require support for multiple guidelines and internal protocols. Generic reminders are insufficient in regulated, value-based care environments.

7. Multilingual and Accessibility Support

Accessibility features include real-time translation, voice interaction, and visual accommodations. At the same time, medical language understanding must account for dialects, literacy levels, and cultural context.

At scale, these capabilities expand access and support equity objectives. In many public and regulated programs, accessibility is not optional.

These features define what enterprise AI health assistants can deliver in real healthcare environments. When designed with clinical rigor and integration depth, they create operational value without compromising safety.

Technical Architecture for Scalable AI Health Assistant Platforms

Enterprise AI health assistants run under sustained load, strict compliance, and constant integration pressure. Architecture decides whether features remain stable as usage grows. Weak foundations show up later as latency, downtime, and clinical risk.

This section covers the patterns required for safe, scalable deployment.

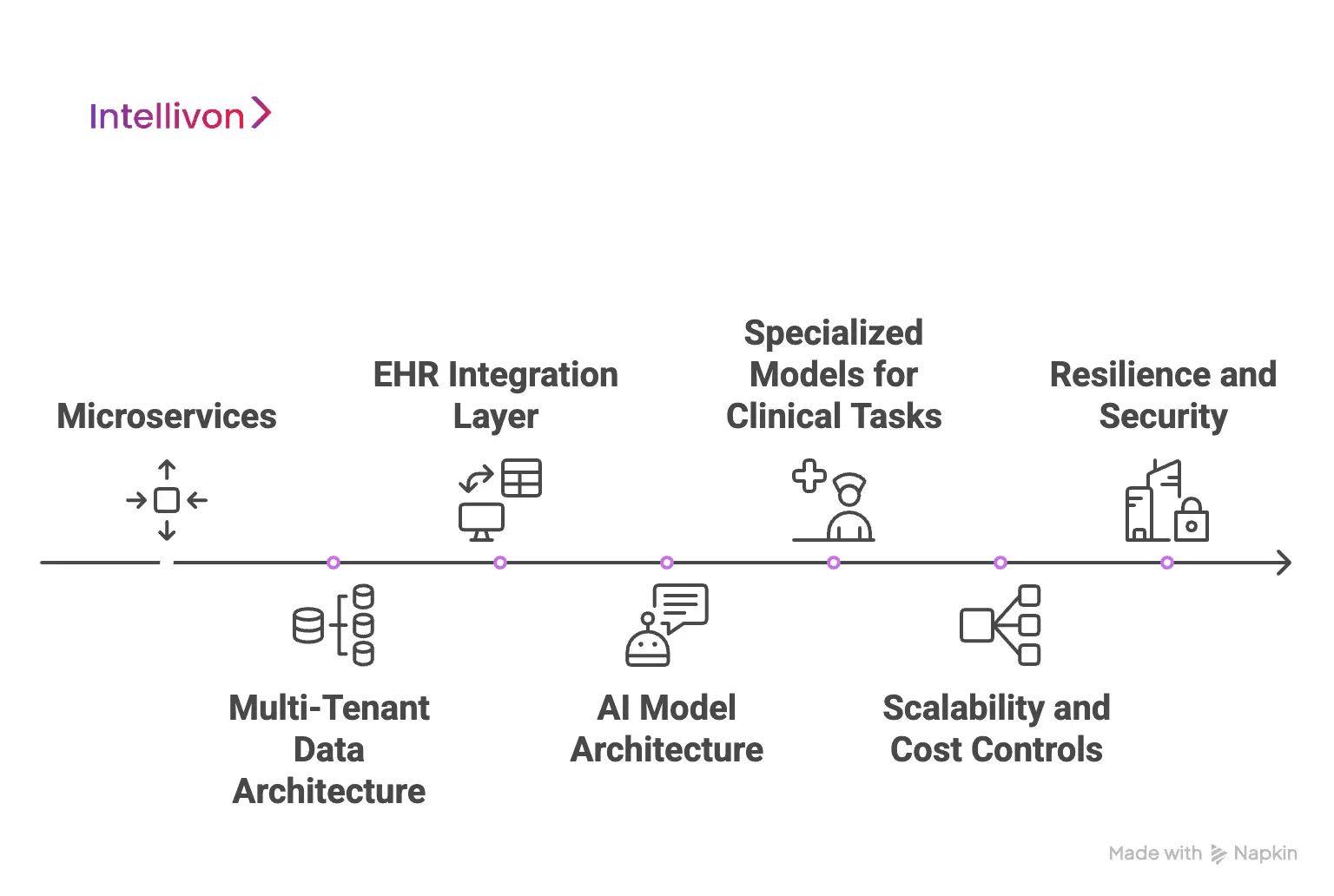

1. Microservices

Microservices let each capability scale independently. Symptom assessment and medication workflows rarely receive the same traffic. Isolated services prevent one feature from slowing the entire platform. This model adds complexity, since teams must manage tracing, service communication, and operational overhead.

A monolith still fits early-stage delivery when usage is limited and iteration speed matters. Many enterprise platforms start monolithic and split services only when clear bottlenecks appear.

2. Multi-Tenant Data Architecture

Multi-tenancy must protect isolation without breaking operational efficiency. A shared schema with a tenant identifier is simple and cost-efficient but offers weaker isolation. Separate schemas per tenant improve separation while keeping operations manageable.

At the same time, separate databases maximize isolation but increase cost and complexity. Many enterprises adopt separate schemas, while large health systems often require full database isolation for compliance confidence.

3. EHR Integration Layer

EHR integration determines whether clinicians trust the system. Modern environments expose FHIR R4 APIs, while many workflows still depend on HL7 v2 messages. An abstraction layer normalizes data across vendors and protects the core application from vendor-specific behavior.

Each EHR implements standards differently, so adapters and mapping logic are unavoidable. Integration also requires ongoing maintenance because vendor APIs, rate limits, and workflows shift over time.

4. AI Model Architecture

Enterprise assistants combine conversational AI with retrieval and safety controls. Base models may be general-purpose or healthcare-tuned, but they still require grounding for clinical use.

Retrieval-augmented generation (RAG) pulls verified medical content before responses are generated. This reduces hallucination risk and allows fast knowledge updates without retraining.

At the same time, guardrails enforce confidence thresholds, restrict unsafe topics, and route uncertain cases to human teams when needed.

5. Specialized Models for Clinical Tasks

Not every task belongs in an LLM. Symptom assessment often performs better with fine-tuned clinical language models. Risk stratification uses structured data models trained on EMR features.

Additionally, anomaly detection supports vitals monitoring from RPM devices. Specialized models improve stability and accuracy, especially for high-risk decisions and repeatable workflows.

6. Scalability and Cost Controls

Scalable platforms use load balancers, API gateways, and independently scalable services. Read replicas reduce database contention during peak traffic.

Auto-scaling reacts to latency and resource pressure to maintain performance under load. Additionally, cost control comes from matching compute to demand, using burst capacity for spikes and predictable capacity for baseline usage.

7. Resilience and Security

Resilience patterns prevent failures from spreading across the platform. Circuit breakers isolate outages from EHRs and third-party services. This being said, graceful degradation keeps the assistant functional when live sync is unavailable.

Security architecture enforces encrypted communication, restricted network access, and role-based authentication for every user type. Monitoring detects anomalies and supports audit readiness across regulated workflows.

Hidden Complexity in Real Deployments

Here are some hidden complexities:

- Vendor APIs change without notice, and rate limits restrict throughput.

- AI performance shifts as patient behavior and clinical protocols evolve.

- Each health system also has workflow differences that require configuration and customization.

Architecture must absorb these realities without exposing instability to clinicians or patients.

Strong architecture determines whether an AI health assistant survives real clinical load or fails under operational pressure. At enterprise scale, reliability, safety, and integration matter more than feature velocity.

Regulations and Compliance In AI Health Assistant Apps

AI health assistants operate inside the most regulated data environment in software. Compliance requirements vary by geography, use case, and data type, which makes one-size-fits-all approaches ineffective.

Many teams underestimate the scope and timeline of compliance, only to discover late-stage blockers that force redesigns or delay launches.

1. Compliance Is Context-Dependent

Health data carries stricter obligations than general personal data. Rules change based on whether the platform supports care delivery, administration, wellness, or analytics.

Geographic deployment also matters, since regulatory expectations differ sharply between the US, EU, and individual states. Successful platforms treat compliance as a design constraint, not a checklist added after development.

2. HIPAA Requirements in the United States

HIPAA applies automatically to covered entities such as providers, payers, and clearinghouses. It also applies to business associates that handle protected health information on their behalf, which requires signed Business Associate Agreements.

Even when an organization falls outside formal HIPAA definitions, users and enterprise customers still expect HIPAA-equivalent safeguards.

From a technical standpoint, HIPAA requires encrypted data storage and transmission, role-based access controls, and detailed audit logging of all PHI access. Platforms must retain audit logs for multiple years and support breach notification timelines defined by regulators. Annual security risk assessments must be documented and defensible during audits.

3. Business Associate Agreements

BAAs are required with cloud providers, AI vendors, and monitoring tools that may access PHI. These agreements define data ownership, breach responsibilities, audit rights, and subcontractor obligations.

A common failure point is discovering that a critical SaaS vendor does not support BAAs, forcing late-stage architectural changes.

4. GDPR Obligations in Europe

GDPR introduces stricter consent and data rights than HIPAA. Consent must be explicit, revocable, and purpose-specific. Individuals have the right to access, port, and delete their data, which complicates clinical data retention strategies.

Data processing agreements are required with all vendors, and high-risk processing demands formal impact assessments.

Cross-border data transfer adds further complexity. EU health data generally cannot leave approved regions without safeguards. Legal uncertainty following Schrems II has pushed many enterprises toward regional deployments for EU and US operations.

5. State-Level Privacy and Telehealth Laws

In the US, states such as California impose additional privacy obligations through laws like CCPA and CPRA. These grant rights around data access, deletion, and sale, with enforcement mechanisms that include private lawsuits for breaches.

Telehealth regulations also vary by state, affecting licensing, prescribing, and reimbursement models. This patchwork increases operational complexity for national platforms.

6. FDA Considerations

FDA oversight becomes relevant when AI systems diagnose or recommend treatment. Informational tools often remain exempt, but symptom checkers that suggest specific actions fall into a gray area.

Conservative interpretation and early legal guidance help avoid unexpected regulatory exposure.

In AI health assistants, compliance shapes architecture, workflows, and vendor choices. Platforms that treat regulation as a foundational requirement avoid rework and earn long-term enterprise trust.

How Intellivon Develops AI Health Assistant Apps

Enterprise AI health assistants fail when teams treat them like chatbot projects. Intellivon approaches them as a clinical infrastructure that must be safe, auditable, and usable inside real workflows.

The process below is designed to reduce clinical risk, prevent integration surprises, and deliver measurable outcomes at scale.

Step 1: Discovery and Clinical Requirements

The work starts with stakeholder alignment across clinical, IT, legal, compliance, and operations. Current workflows are mapped in detail, including escalation paths and documentation requirements.

Priority use cases are selected based on safety, feasibility, and operational impact. Success metrics are defined early, so value can be proven during pilots.

Step 2: Architecture and Compliance Design

Intellivon designs the platform architecture alongside the compliance framework. Data flows, access controls, audit logging, and tenant isolation are defined before feature development begins.

Integration scope is validated against EHR realities, not assumptions. Clinical governance is also formalized, including who approves content, thresholds, and handoff protocols.

Step 3: Clinical UX and Conversation Design

Conversation flows are built around how patients describe symptoms and how clinicians triage risk. Prompting is structured to collect the minimum required information without creating user fatigue.

Response formats are defined for clinical safety, including uncertainty handling and escalation cues. Language and tone are standardized to reduce variability across interactions.

Step 4: Model Strategy and Safety Controls

Model selection is based on the clinical task, not popularity. Intellivon combines conversational AI with retrieval systems to ground responses in verified sources.

Confidence scoring controls what the assistant can say, when it must hedge, and when it must escalate. Guardrails restrict high-risk content and enforce safe defaults across edge cases.

Step 5: Integration, Build, and Data Normalization

Integration work runs as a core stream, not a final-phase activity. FHIR and HL7 connectivity is implemented with vendor-specific adapters where needed. Data normalization ensures that problem lists, medications, allergies, and encounters remain consistent across systems.

Degraded modes are designed so workflows continue even when external systems slow down.

Step 6: Clinical Validation and Oversight

Validation uses structured test cases that reflect real presentations and operational workflows. Outputs are reviewed against clinician expectations, triage safety, and documentation quality.

Edge cases are cataloged and used to refine prompts, guardrails, and escalation logic. A medical review loop is established so safety governance continues post-launch.

Step 7: Security Testing and Compliance Readiness

Security controls are tested through penetration testing, access reviews, and audit trail verification. Vendor contracts and BAAs are finalized alongside deployment architecture.

Compliance evidence is documented in a format enterprises can defend during audits. Logging and alerting are configured for ongoing risk monitoring.

Step 8: Pilot Deployment and Scale Rollout

Deployment begins with a controlled pilot across a defined population and workflow. KPIs are tracked weekly, including deflection, escalation rates, clinical safety flags, and satisfaction signals.

Feedback is incorporated through short release cycles. Scale rollout only occurs after clinical and operational acceptance is achieved.

Intellivon reduces risk by treating clinical validation, integration, and compliance as first-class engineering requirements. This process produces AI health assistants that clinicians adopt, patients trust, and enterprises can operate at scale.

What Does It Cost to Build an Enterprise AI Health Assistant App?

For healthcare enterprises starting with one or two clearly defined, high-impact use cases, an AI health assistant can be built within a controlled, enterprise-ready budget of USD 50,000 to 150,000.

At Intellivon, we structure AI health assistant cost models around leadership budget cycles, regulatory exposure, and near-term operational ROI.

Rather than launching a broad, all-journey assistant, we focus on building a use-case-specific clinical intelligence core. This core integrates cleanly with existing systems and is designed to scale safely as adoption grows. The result is predictable spending and faster validation of business value.

Estimated Phase-Wise Cost Breakdown

| Phase | Description | Estimated Cost (USD) |

| Discovery & Use-Case Definition | Clinical workflow mapping, risk assessment, integration review, and success metrics definition | 6,000 – 12,000 |

| Architecture & Platform Blueprint | Core platform design, data flows, security, and compliance foundations | 8,000 – 18,000 |

| Core AI Health Assistant Development | Conversational flows, triage logic, basic dashboards | 15,000 – 35,000 |

| Data Ingestion & Integrations | Patient inputs, limited EHR data access, third-party health APIs | 8,000 – 20,000 |

| AI Intelligence & Safety Layer | NLP logic, confidence scoring, escalation rules, guardrails | 10,000 – 25,000 |

| Security & Compliance Controls | Encryption, role-based access, consent management, and audit logs | 6,000 – 15,000 |

| Testing & Pilot Deployment | Functional testing, security checks, controlled rollout | 5,000 – 12,000 |

Total Initial Investment Range:

USD 50,000 – 150,000

This range supports a secure, enterprise-grade AI health assistant deployed for a focused use case, operating in a live environment, and aligned with healthcare compliance expectations.

Annual Maintenance and Optimization

Ongoing costs include infrastructure management, integration upkeep, security monitoring, and incremental model tuning. These remain predictable when governance and safety logic are designed correctly.

- 12–18% of the initial build cost annually

- Approx. USD 6,000 – 25,000 per year

Hidden Costs Enterprises Should Plan For

- Expanding into additional clinical workflows or conditions

- Adding new EHR endpoints, devices, or third-party data sources

- Regulatory updates across regions or care models

- Increased cloud usage from higher interaction volume

- Periodic AI validation, retraining, and safety reviews

- Change management and internal enablement as adoption scales

Planning for these early prevents budget spikes and protects long-term stability.

Best Practices to Stay Within the USD 50K–150K Range

- Start with one clearly defined, high-impact clinical use case

- Avoid multi-region and multi-regulation complexity in phase one

- Use modular architecture to support controlled expansion

- Embed privacy, consent, and auditability from day one

- Review operational impact and safety metrics within 90 days

This approach ensures value is proven before broader capital deployment.

Talk to Intellivon’s healthcare platform architects to receive a phased cost estimate aligned with your AI health assistant roadmap, compliance environment, and near-term operational goals. Our team helps enterprises validate scope, control risk, and plan expansion without committing capital before clinical and business value is proven.

Overcoming Challenges in Building AI Health Assistant Apps

Every enterprise AI health assistant initiative encounters predictable challenges. The difference between success and failure is not avoiding them. It is addressing them early, before they affect timelines, budgets, or clinical safety.

Below are the challenges we consistently see and how Intellivon solves each in practice.

Challenge 1: AI Hallucination in Medical Contexts

LLMs can generate confident but incorrect medical responses. In healthcare, this creates real patient harm and direct organizational liability.

To address this risk at the foundation, Intellivon grounds responses using retrieval-augmented generation tied to verified medical sources. Confidence scoring controls output visibility, with low-confidence cases routed to human clinicians.

Clinical guardrails restrict high-risk topics, while physician-reviewed fallback content handles common questions. Flagged conversations undergo regular medical review.

Challenge 2: EHR Integration Complexity

Once safety is addressed, integration becomes the next constraint. EHRs implement standards inconsistently, APIs change, and rate limits apply. Solutions that work in testing often fail in production.

To prevent integration friction from stalling adoption, we build abstraction layers that isolate EHR-specific logic from the core platform. Support spans FHIR, HL7 v2, and custom adapters.

Cached data enables graceful degradation during outages. Integration timelines are planned realistically, with integration treated as a primary workstream.

Challenge 3: Clinical Adoption Resistance

Even with safe, integrated systems, adoption is not guaranteed. Physicians remain skeptical of AI tools that add friction or duplicate documentation.

This is where workflow alignment becomes decisive. Clinical workflows are co-designed with physicians from the start. Assistants integrate directly into existing systems rather than operating as standalone tools.

Early use cases focus on real pain points, with clear limits on what the AI can and cannot do. Feedback loops remain active throughout development.

Challenge 4: Regulatory Uncertainty

As adoption grows, regulatory questions intensify. Teams struggle with HIPAA applicability, GDPR obligations, and potential FDA oversight. Guidance often evolves mid-project.

To reduce regulatory exposure, Intellivon applies conservative interpretations from day one and partners with healthcare regulatory counsel early. Decisions are documented as part of an audit trail.

Regulatory pathways are defined before architecture is finalized, with designs supporting multiple jurisdictions.

Challenge 5: Data Privacy Across Jurisdictions

Regulatory complexity increases further when platforms span regions. Data residency rules differ, and consent requirements vary by state and country.

To manage this variability, platforms support regional data residency, configurable consent management, and encryption at every layer. Immutable audit trails provide visibility into all data access for compliance verification.

Challenge 6: Algorithmic Bias

As usage scales, performance disparities emerge. AI trained on narrow datasets performs inconsistently across populations, creating equity and legal risk.

To address this systematically, training data is diversified intentionally. Performance is monitored across demographic segments. Disparities trigger clinical review and retraining priorities rather than post-hoc explanations.

Challenge 7: Maintaining Clinical Accuracy Over Time

Over time, even well-validated systems degrade. Medical knowledge evolves, workflows change, and data patterns shift.

Intellivon conducts continuous monitoring to track safety, accuracy, and latency. Retraining pipelines incorporate new guidelines, with A/B testing validating changes before rollout. Clinical governance remains active post-deployment.

Solving these challenges requires experience across clinical, technical, and regulatory domains. When addressed in sequence rather than isolation, they become manageable instead of compounding.

Top Examples of AI Health Assistant Apps

The AI health assistant category includes both consumer-facing tools and enterprise-aligned platforms. Studying how leading products work, where they succeed, and how they monetize helps enterprises understand which models scale safely in regulated environments.

1. Ada Health

Ada Health is a conversational symptom assessment platform that guides users through structured medical questioning. It applies clinical logic to narrow possible conditions and suggest next steps. The system relies on physician-reviewed medical knowledge and probabilistic reasoning rather than free-form chat.

Monetization: Ada monetizes primarily through B2B licensing with employers, insurers, and health systems, rather than consumer subscriptions.

2. Babylon Health

Babylon Health combines AI-driven triage with access to licensed clinicians through virtual consultations. The assistant gathers symptoms, assesses urgency, and routes users to digital or in-person care. Human clinicians remain embedded in the workflow.

Monetization: Revenue comes from payer contracts, government partnerships, and subscription-based access to virtual primary care services.

3. Buoy Health

Buoy Health focuses on symptom checking and care navigation rather than diagnosis. It helps users determine the most appropriate care setting based on symptoms and context. The platform emphasizes utilization management and access optimization.

Monetization: Buoy operates on an enterprise SaaS model, licensing its assistant to health systems and payers to reduce unnecessary utilization.

4. K Health

K Health uses large-scale clinical datasets to support AI-assisted primary care and pediatric guidance. Users interact with an assistant that analyzes symptom patterns and provides next-step recommendations, with clinician access available when needed.

Monetization: The platform combines subscription fees with telehealth visit revenue and employer-sponsored care programs.

5. Woebot

Woebot delivers mental health support using structured cognitive behavioral therapy techniques. The assistant guides users through evidence-based exercises while monitoring engagement and risk signals. Human care escalation is built into the model.

Monetization: Woebot monetizes through enterprise licensing to employers, health plans, and providers rather than direct consumer billing.

Successful AI health assistants align clinical scope, workflow integration, and monetization strategy from the start. Enterprise-ready platforms prioritize safety, validation, and operational fit over broad consumer reach.

Conclusion

AI health assistants are becoming core infrastructure for healthcare organizations facing rising demand, limited clinical capacity, and tighter regulatory scrutiny. Enterprises that succeed prioritize clinical accuracy, workflow integration, and governance before feature expansion.

They invest early in validation, architecture, and change management rather than retrofitting safety later. When built deliberately, AI health assistants extend care capacity, improve outcomes, and create durable operational advantages. Execution, not ambition, ultimately determines impact.

Build An AI Health Assistant App With Intellivon

At Intellivon, we build AI health assistant platforms as clinical and operational infrastructure, not chatbots or isolated automation tools. Our platforms are designed to support triage, follow-ups, preventive workflows, and condition-specific journeys within a single governed system. Each solution integrates cleanly with existing EHRs, enterprise systems, and care delivery models.

Every platform is engineered for enterprise healthcare environments. Architecture comes first. Compliance is embedded by design. Intelligence improves longitudinally as engagement grows. As adoption scales across populations, conditions, or regions, performance, governance, and safety remain predictable. This enables healthcare organizations to deliver measurable clinical, operational, and financial outcomes without increasing risk.

Why Partner With Intellivon?

- Enterprise-first platform design aligned with real clinical workflows, care coordination models, and operational accountability

- Deep interoperability expertise across EHRs, care management systems, health APIs, and secure enterprise integration layers

- Compliance-by-design architecture supporting HIPAA, GDPR, consent management, auditability, and AI governance requirements

- AI-enabled orchestration and personalization for triage, proactive nudging, escalation control, and outcome tracking

- Scalable, cloud-native delivery with phased rollout, controlled expansion, and continuous optimization as usage grows

Book a strategy call to explore how an AI health assistant platform can extend clinical capacity, reduce operational strain, and scale safely across your enterprise, with Intellivon as your long-term delivery partner.

FAQs

Q1. What is an AI health assistant app?

A1. An AI health assistant app is a clinical support platform that uses NLP, machine learning, and decision logic to triage patients, guide care, manage follow-ups, and support preventive workflows. In enterprise healthcare, these apps integrate with EHRs, comply with regulations, and operate under clinical governance rather than acting as standalone chatbots.

Q2. How is an enterprise AI health assistant different from a symptom checker?

Symptom checkers provide informational guidance with disclaimers. Enterprise AI health assistants are clinically validated, integrated into care workflows, governed by compliance rules, and designed to escalate safely to human clinicians. The difference lies in accountability, integration depth, and operational use inside healthcare systems.

Q3. How much does it cost to build an AI health assistant app?

A3. A focused, enterprise-ready AI health assistant can be built within a budget of USD 50,000 to 150,000 when scoped deliberately. Costs depend on use-case complexity, integration depth, compliance requirements, and how gradually the platform is rolled out, rather than on feature count alone.

Q4. Are AI health assistant apps compliant with HIPAA and GDPR?

A4. They can be, but only when compliance is designed into the architecture. Enterprise AI health assistants require encryption, access controls, audit logs, consent management, data residency options, and vendor agreements. Compliance cannot be added later without significant rework.

Q5. What are the biggest challenges in building AI health assistant apps?

A5. Common challenges include AI hallucination risk, EHR integration complexity, clinical adoption resistance, regulatory uncertainty, data privacy across regions, and model bias. Successful platforms address these upfront through architecture, clinical validation, governance, and phased deployment strategies.