A recent analysis of more than 20,000 children’s apps revealed that over 81% contain embedded trackers, quietly collecting or transmitting data that most parents never agreed to. When a learning platform handles a child’s name, progress patterns, voice recordings, or in-app behaviour, even a small oversight can expose highly sensitive information.

The Children’s Online Privacy Protection Act (COPPA) was designed to stop exactly these situations by enforcing parental consent, transparency, and strict limits on what information platforms can collect and share on the app.

At Intellivon, we have spent years delivering enterprise-grade children’s learning platforms that satisfy COPPA governance, secure architecture, and global readiness. In this blog, we’ll explain why COPPA is critical for modern kids’ learning apps and walk you through how we design apps that keep safety, compliance, and long-term scalability at the centre of every decision.

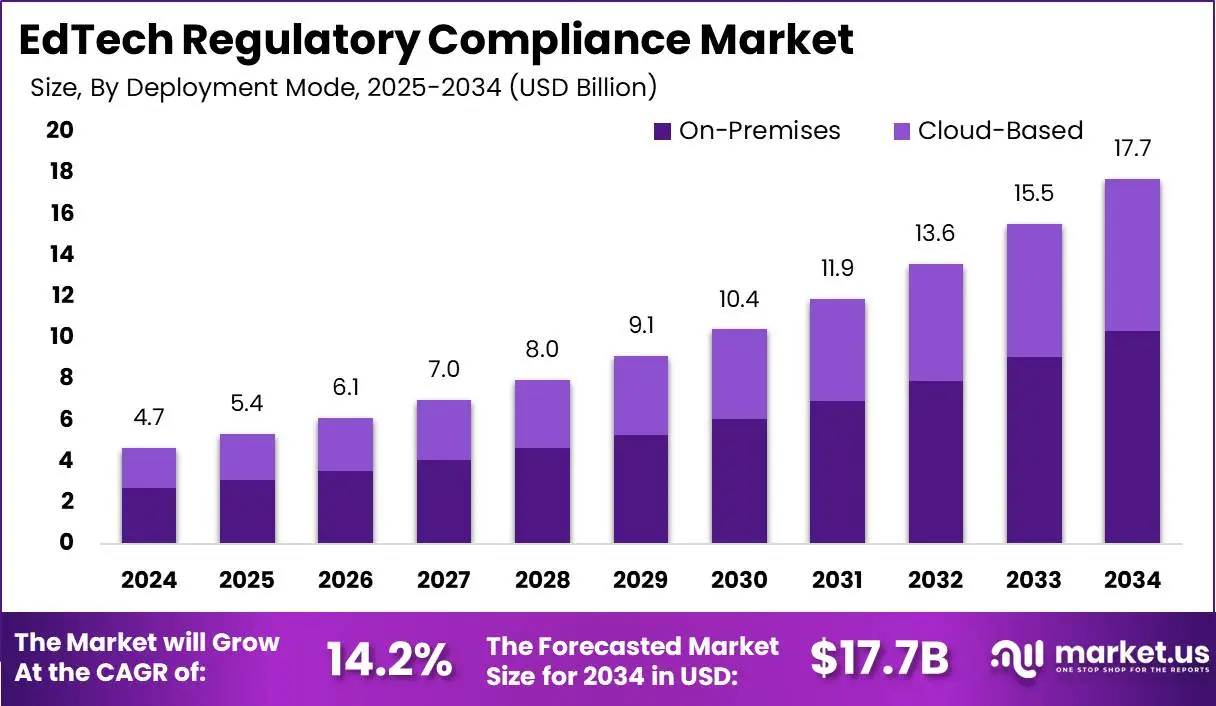

Key Takeaways of the Global EdTech Regulatory Compliance Market

The global EdTech regulatory compliance market is expanding rapidly. It is projected to reach USD 17.7 billion by 2034, up from USD 4.7 billion in 2024, growing at a 14.2% CAGR. North America leads with 42% market share, generating USD 1.9 billion in 2024.

Key Trends

- Broader digital learning adoption is also accelerating, with the U.S. market expected to hit USD 300 billion by 2033. Mobile learning, which is one of the primary channels for kids’ learning apps, will reach USD 110.42 billion by 2025.

- Regulators have increased enforcement. The FTC updated COPPA rules in April 2025 to restrict data retention, require explicit opt-in for targeted ads, and demand higher transparency from vendors.

- Schools and districts are prioritizing platforms that emphasize data minimization, transparency, and parental or institutional consent mechanisms.

- There is a market shift toward privacy-first alternatives and privacy-focused audit tools, with many schools preferring vendors who provide clear data handling and deletion practices.

- Deployment preference remains with on-premises solutions for higher control and security (over 58% of compliance tool deployments in 2024), though cloud adoption is growing due to scalability and accessibility.

Business Impact

- Noncompliance risks are severe: FTC can levy substantial fines for every child’s privacy violation, with recent enforcement actions resulting in multi-million-dollar penalties and suspended contracts.

- Successful platforms build market share by integrating privacy compliance into their core value proposition, fostering trust with both educational buyers and parents.

If more detail or a competitor breakdown is needed, it can be provided for enterprise-level context.

What Is a COPPA-Compliant Kids’ Learning App?

A COPPA-safe kids’ learning app creates a protected space where children can interact without unnecessary data exposure. It limits what information enters the system, gives parents full visibility, and follows strict rules for storage, access, and consent. Enterprises rely on this framework to meet compliance expectations and build long-term trust.

A COPPA-safe app focuses on privacy, transparency, and strong governance. It treats children’s data with the same discipline used in regulated industries while keeping the learning experience engaging and easy to use. This balance is why schools, districts, and families increasingly prefer platforms built with COPPA safety at the core.

Types of Data Legally Allowed Under COPPA

COPPA permits only the data needed to support core learning functions. Every data point must have a clear purpose, follow verified parental consent, and avoid profiling or behavioural tracking. Enterprises benefit from this narrow scope because it simplifies data flows, reduces exposure, and supports long-term compliance.

COPPA limits what children’s apps can collect. This restriction ensures that learning experiences remain safe, predictable, and free from unnecessary data extraction.

1. Basic Account Information

COPPA allows limited account details, such as a parent’s email, a username, and an age range. These fields enable login and basic personalisation without relying on identifiers that reveal a child’s identity.

2. Learning Progress and Activity Data

Apps may collect progress data to support lessons, rewards, and adaptive learning. This includes completed tasks, quiz scores, and time spent on activities. These insights must remain inside the platform and cannot be used for advertising or external analysis.

3. Device and Operational Data

Technical information such as device type, crash logs, and app version is allowed. These fields support troubleshooting and performance improvement, but cannot be used to track a child across services.

4. Parent-Initiated Communication

If parents contact support, the platform may collect the information needed to resolve the issue. This data must stay within the support workflow and cannot be repurposed.

5. Excludes Sensitive Identifiers

Sensitive data, such as photos, GPS location, and audio recordings, requires explicit parental approval and a clear functional purpose. Most COPPA-safe platforms avoid these fields entirely to reduce risk.

Following COPPA’s narrow data rules helps enterprises build trust with families and educators. A lean data model reduces operational burden, strengthens compliance, and supports safer learning environments at scale.

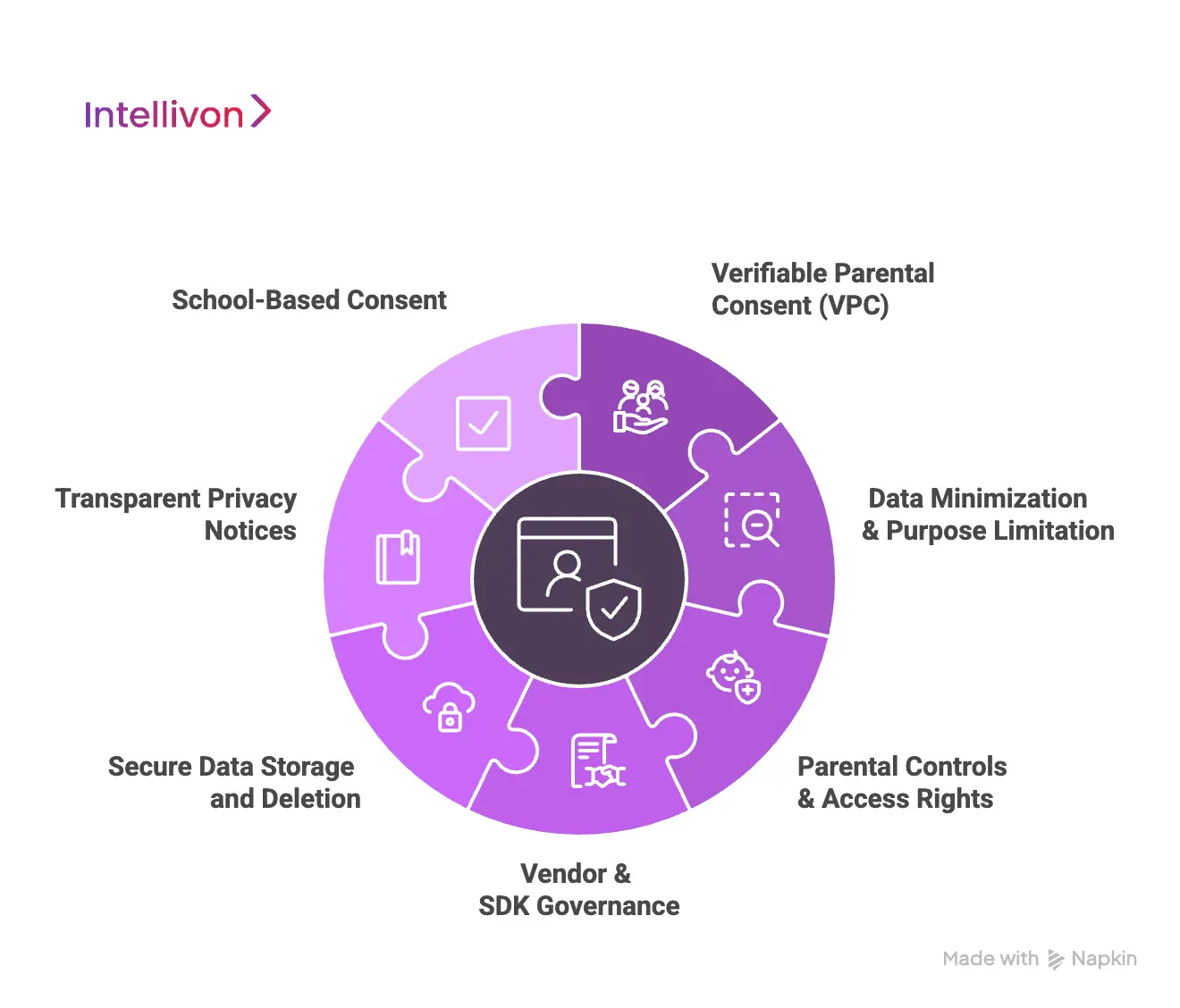

Key Requirements In Apps for COPPA Compliance in 2025

COPPA compliance in 2025 requires clear parental consent workflows, strict data minimization, secure storage, transparent notices, and full governance of SDKs and vendors. Learning apps must verify consent, limit identifiers, protect storage systems, and document every data pathway to avoid violations and maintain trust with families and schools.

Enterprises that approach COPPA as an architectural framework, and not a checklist, end up with platforms that scale cleanly, win school-district approvals faster, and avoid expensive remediation efforts later.

1. Verifiable Parental Consent (VPC)

VPC is the foundation of COPPA and carries the most scrutiny in audits. A compliant platform must verify that the individual granting consent is the actual parent or legal guardian. Common methods include government-ID checks, credit-card verification, knowledge-based authentication, and school-admin-approved consent flows.

Learning platforms often struggle here because fragmented workflows, outdated UI patterns, or unsupported geographies weaken verification strength. Enterprises should adopt a centralised consent engine that logs every approval, timestamp, revocation, and data-use change. This creates an auditable history that protects both the organisation and the family.

2. Data Minimization & Purpose Limitation

COPPA expects learning apps to collect only what they need, not what is convenient. That means no unnecessary identifiers, behavioural patterns, or device data. AI features that rely on voice, progress analytics, or personalization must state their purpose clearly and use anonymized or aggregated outputs wherever possible.

Executives should ensure their architecture separates required learning data from optional analytics. By reducing the default scope, you shrink the risk surface and simplify ongoing compliance.

3. Parental Controls & Access Rights

Parents have the right to see what an app collects, request changes, and delete their child’s information. A compliant platform needs a dashboard that makes these actions simple and traceable.

Deletion workflows often create operational issues in enterprises because they touch multiple storage layers. A strong system coordinates deletion requests across databases, caches, logs, and integrated services, ensuring there are no residual copies that could violate a future audit.

4. Vendor & SDK Governance

This is where most COPPA breaches occur. Many learning apps rely on tracking SDKs, analytics libraries, or advertising tools that quietly transmit data to third parties. Even one unvetted SDK can break compliance and expose the organisation to penalties.

A COPPA-ready organisation maintains an inventory of all third-party components, runs static analysis on every build, and enforces strict policies around tag managers, SDKs, and server-side tracking. Vendor contracts should explicitly restrict data reuse and require timely reporting of breaches.

5. Secure Data Storage and Deletion

Data protection must extend beyond operational databases. COPPA expects encryption at rest, encryption during transfer, role-based access control, key rotation, and environment separation. Storage systems should handle logs, backups, and AI training data with the same level of protection.

Deletion is equally important. Once a parent revokes consent, data must be purged across live systems and long-term archives. Inconsistent deletion strategies are one of the fastest ways to fail an audit.

6. Transparent Privacy Notices

COPPA requires privacy policies to be easy to understand, accurate, and updated whenever features or data flows change. Notices must spell out what data the platform collects, how it is used, who receives it, and how parents can exercise their rights.

School-mode notices differ from consumer-mode policies. Enterprise teams often forget this distinction, which leads to compliance gaps that legal teams must manually repair.

7. School-Based Consent

Schools can authorize data collection for learning purposes under specific conditions. This is useful for large deployments but must be handled carefully. The app must limit data to what is needed for the educational experience and ensure that the school, not the child, is the primary user.

A compliant implementation logs the school’s authorization, connects it to the correct accounts, and provides mechanisms for parents to later review or revoke access.

When these requirements come together, they form a stable privacy backbone that keeps young users safe and your organisation audit-ready.

Why Over 80% of Parents Prefer Privacy-Aligned Kids’ Platforms

Parents have become far more attentive to the way digital services store, track, and share their children’s information. Their expectations now influence which learning tools gain traction, and which ones never make it past the trial stage. For enterprises entering the children’s learning market, this shift turns privacy alignment into a competitive lever rather than a compliance task.

1. Parental Concern at Record Levels

Recent findings from the UK’s Information Commissioner’s Office show that nine in ten parents are uneasy about how companies handle children’s data.

That level of concern creates pressure on every organisation building products for young users. Parents expect clear explanations, consistent safeguards, and visible accountability from the first onboarding screen. Without this, platforms face hesitation before adoption even begins.

2. Trust Directly Influences Adoption

Families shape the decisions teachers, districts, and procurement teams make about digital learning tools. When a platform demonstrates a strong child-privacy posture, it moves through approval cycles with fewer delays.

Survey data shows that more than 80% of parents believe child-specific privacy rules make online spaces safer. This has created a baseline expectation that if an app is designed for children, it must meet higher privacy standards to earn trust.

3. Data Misuse As a Competitive Weakness

Large-scale analysis of children’s mobile apps continues to show widespread non-compliance. One major study examining 20,195 child-focused Android apps found that 81.25% contained trackers, including those meant to be prohibited for younger audiences.

This gap between public claims and actual behaviour creates reputational and operational risk for new entrants. Enterprises must now demonstrate how they restrict unnecessary data flows, not simply assert compliance in their marketing materials.

4. Rewired Market Expectations

Oversight bodies have increased enforcement. The UK ICO recently issued information notices to companies that failed to meet required safeguards for young users.

This marks a shift from advisory guidance to formal intervention. For enterprises, this means privacy design influences partnerships, RFP outcomes, and long-term distribution strategies. Compliance is now a deciding factor in whether a platform is allowed into classrooms.

5. Parental Expectations Influence Monetisation Models

Families look closely at how apps generate revenue. Behavioural ads, cross-profile targeting, and third-party data networks often fail their risk test.

Parents prefer learning tools that minimise data use and rely on transparent, non-surveillance-based models. These expectations influence how enterprises choose analytics tools, monetisation frameworks, and external SDKs.

COPPA-aligned systems pave the way for faster adoption, stronger trust, and long-term positioning in a rapidly expanding market. With this foundation in place, it becomes easier to recognise where most learning apps fall short.

Common COPPA Violations in Kids’ Learning Apps

Most COPPA violations stem from excessive data capture, weak consent workflows, and unvetted SDKs that transmit identifiers to external vendors. Many learning apps also fail to provide accurate notices or complete data deletion. These issues create legal exposure and make districts hesitant to approve new platforms.

Below are the violations that surface most frequently during COPPA assessments and school-district procurement checks.

1. Hidden Data Trackers

Many apps rely on analytics or performance-tracking SDKs that quietly collect device identifiers, session details, and behavioural patterns. These trackers often transmit information even when the app does not need it for learning.

Without routine code reviews and static analysis, these behaviours go unnoticed and create serious compliance gaps.

2. Collecting Unnecessary Data

Some platforms gather precise location, device fingerprints, continuous microphone data, or full behavioural logs. Most of this information is unnecessary for delivering lessons or tracking progress.

When teams expand features without revisiting data scopes, collection patterns drift far beyond what COPPA describes as “reasonable and limited.”

3. Non-Verifiable Consent Workflows

COPPA requires strong verification that the consenting individual is the actual parent or guardian. Many apps still rely on email confirmations, simple pop-ups, or frictionless flows that do not meet regulatory standards.

As users move between mobile, web, and school devices, fragmented consent paths create mismatches that weaken the entire approval trail.

4. Vague Privacy Notices

Privacy pages often describe intended data behaviour, not actual behaviour. When a platform integrates new tools or adjusts its analytics pipeline, the notice rarely gets updated immediately.

During procurement, districts look for aligned documentation. Any mismatch reduces trust and triggers additional scrutiny from compliance teams.

5. Incomplete Data Deletion

Deletion must cover every layer of the system, including logs, caches, backups, and downstream vendor services. Many learning apps delete only the primary profile record and leave behind fragments across integrated tools.

These inconsistencies become violations when parents request removal or when auditors sample stored data.

6. Misuse of School-Based Consent

Schools can authorize data collection for classroom instruction. However, this allowance does not extend to analytics models, behavioural profiling, or marketing workflows. Some platforms stretch the definition of “educational purpose,” placing both the vendor and the school district at compliance risk during a review.

These recurring issues highlight how easily learning apps can slip out of alignment as systems evolve. The stakes become even higher when AI-driven features enter the ecosystem, which leads to an important discussion: Does COPPA apply to AI-powered learning apps?

Does COPPA Apply to AI-Powered Learning Apps?

COPPA applies fully to AI-powered learning apps, including models that process voice, images, behaviour patterns, or personalized learning data. Any AI feature that collects, stores, analyzes, or infers information about a child is treated as personal data. This requires verified consent, strict minimization, and transparent data handling.

Below are the areas where most AI features intersect with COPPA requirements.

1. Voice and Speech Input

Speech recognition models often store recordings or transcripts to improve accuracy. Even temporary storage falls under regulated data.

Teams must disclose this behaviour, limit retention, and obtain clear parental consent before activating any microphone-based feature.

2 Image and Visual Processing

Tools that use cameras for reading assessments, emotion detection, or engagement tracking handle highly sensitive information.

Image data must be minimized, secured, and deleted on request. Most parents are unaware of how these systems work, so transparency is essential.

3. Behavioural and Progress Analytics

Adaptive learning engines analyze performance patterns to adjust difficulty levels. These patterns can reveal identity-linked traits.

Enterprises must explain how analytics models use this information, where it is stored, and how long it remains accessible.

4. Model Training and Data Reuse

Many AI systems improve through exposure to real user data. This can violate COPPA if a child’s information is used to train general-purpose models.

Only purpose-limited, consent-backed data sets are allowed. Enterprises should maintain strict boundaries between operational and training data.

5. Vendor AI Models

Some learning platforms rely on third-party AI services to process speech, content, or insights. These services may store or reuse inputs by default. Without clear contracts and data restrictions, this becomes an immediate COPPA violation.

With AI features collecting richer and more sensitive data, the impact of non-compliance becomes much more severe. To understand the risk clearly, let’s break down what happens when a kids’ learning app fails to meet COPPA standards.

What Happens if Your Learning App Is Not COPPA-Compliant?

When a kids’ learning app violates COPPA, consequences include million-dollar fines, app-store sanctions, lost school contracts, and reputational damage. For example, one company paid a $500 k settlement for an SDK misconfiguration. These outcomes make compliance a strategic business necessity, and not just a legal checkbox.

Below are some concrete cases you will want to know.

1. Financial Penalties

In one case, Apitor Technology Co., Ltd. agreed to a $500,000 fine for integrating a third-party SDK that collected geolocation data from children’s apps without parental notice or consent.

Another prominent case is TikTok (via its predecessor Musical.ly) paying $5.7 million in 2019 for collecting personal info from children under 13 without verifying parental consent. These figures show how costly non-compliance becomes.

2. Distribution Restrictions

When compliance issues surface, platform owners take action. For example, apps that don’t label content appropriately or embed uncontrolled tracking may be removed from the Google Play or Apple App Store, slowing your rollout, reducing reach, and forcing architecture changes mid-deployment. Teams often underestimate this impact on scaling and monetisation.

3. Loss of School and District Contracts

When a learning app vendor fails to document consent flows or demonstrate audited deletion practices, school districts can terminate contracts or prohibit rollout across classrooms. Educational procurement policies demand compliance evidence. This makes remediation very expensive and disruptive for enterprise plans.

4. Regulatory Oversight Intensifies

Regulators are evolving fast. The Federal Trade Commission (FTC) updated COPPA rules in 2025 to include stricter data-retention limits and clearer obligations for third-party sharing.

When auditors detect weaknesses in consent verification, data deletion, or vendor controls, the whole feature pipeline may slow down due to legal intervention.

5. Market Reputation Damage

Any public enforcement, such as the $10 million penalty paid by The Walt Disney Company for the misclassification of children’s content, creates large-scale media coverage and parent/educator concern.

For a learning platform, trust is a core value. Once lost, user adoption, retention, and district endorsements all suffer.

6. Vendor and Supply-Chain Liability

In the Apitor case, the SDK provider’s misconfiguration triggered the fine. Your vendor contracts need to reflect that cost and oversight risk. Poor controls here add hidden cost and operational delay to product growth.

These examples show how quickly a learning app ecosystem can become exposed. With multiple control gaps and evolving regulatory definitions, the next step is to define what a compliant app looks like. Let’s move to the features you must build into a COPPA-ready kids’ learning platform.

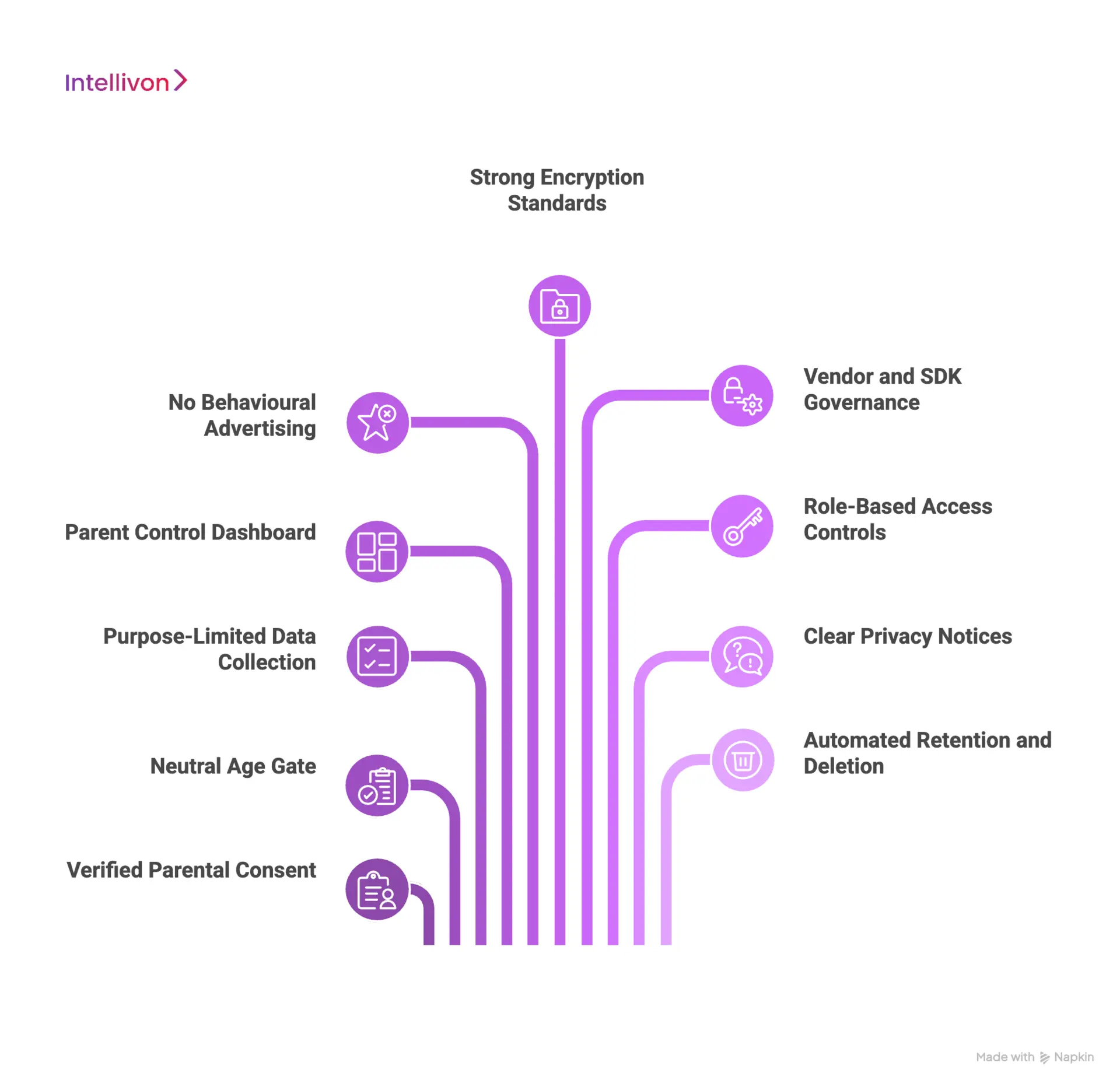

Key Features of A COPPA-Compliant Kids’ Learning App

A COPPA-compliant children’s app uses verified parental consent, minimal data collection, strong encryption, and controlled access to protect young users. It also enforces vendor governance, transparent notices, and automated deletion across all systems. These features form the foundation of a secure, trustworthy learning environment.

1. Verified Parental Consent

A compliant platform cannot collect personal data until a parent completes a verified approval flow. This includes clear disclosures, step-by-step confirmations, and a verifiable method such as ID checks, card verification, or school-admin approval.

Strong consent logs help teams resolve disputes, respond to audits, and maintain transparency throughout the relationship.

2. Neutral Age Gate

Age gating must remain neutral and avoid leading children to choose a higher age. The system uses a child’s response only to route them to the correct onboarding flow.

Users under 13 are directed to a parent-led setup, while older learners follow a simplified path. This structure prevents accidental misclassification and protects the platform from early compliance errors.

3. Purpose-Limited Data Collection

Every data field inside the product must support a clear instructional or operational purpose. The system should avoid capturing unnecessary identifiers, large text inputs, or behavioural signals unrelated to learning.

These limits keep risk low, reduce storage obligations, and simplify vendor audits. At the same time, purpose-limitation also helps teams scale safely as new features are added.

4. Parent Control Dashboard

Parents expect meaningful oversight of their child’s digital footprint. A strong dashboard shows what data the app stores, how long it remains available, and how it contributes to the learning experience.

Parents can adjust permissions, control visibility, and request deletion or export. This creates a transparent partnership between families and the platform.

5. No Behavioural Advertising

Behavioural ads introduce hidden risks. They track activity, profile behaviour, and often depend on cross-service identifiers. COPPA discourages these models, and parents increasingly reject them.

A compliant platform uses monetisation strategies that avoid targeted advertising and do not rely on personal tracking. This protects children and simplifies legal oversight.

6. Strong Encryption Standards

Security supports every privacy requirement. Encryption must protect data in transit and at rest. Internal systems should enforce strict access controls, secure APIs, and continuous monitoring.

Alerts help identify unusual activity early, reducing the chance that a small issue becomes a major incident. This level of defence builds resilience across the entire environment.

7. Vendor and SDK Governance

Most compliance failures trace back to third-party tools. SDKs may track behaviour, collect identifiers, or store data without clear disclosure.

A COPPA-ready platform reviews every vendor, validates their data practices, and removes tools that cannot meet child-safety requirements. Ongoing audits ensure no silent behaviours appear after updates or integrations.

8. Role-Based Access Controls

Only authorised staff should see child-level data. Role-based permissions enforce boundaries inside the organisation. Audit logs record every access event, helping teams trace actions during investigations or reviews.

These internal safeguards are essential for enterprise-level compliance and reduce the impact of human error.

9. Clear, Human-Readable Privacy Notices

Privacy information must make sense to families, and not to the lawyers. Notices should explain data use with plain language and practical examples.

The app should reinforce these explanations when features change or new permissions are needed. When parents understand the system clearly, trust grows and complaints decrease.

10. Automated Retention and Deletion

Child data cannot remain in the system indefinitely. Automated workflows remove information when it is no longer required for learning, security, or operations.

Deletion must cover every storage layer: primary databases, logs, caches, and backups. This reduces long-term exposure and ensures clean, auditable data hygiene.

Together, these features create a stable privacy foundation for any children’s learning platform. They reduce risk, improve trust, and support large-scale adoption. Next, we’ll break down the biggest challenges teams face when building COPPA-ready platforms and how to solve them effectively.

Challenges and Solutions To Building COPPA-Ready Kids’ Learning Platforms

Building a COPPA-ready learning platform involves challenges in consent verification, data minimization, vendor control, and secure storage. These issues grow with scale and feature expansion. Our team addresses these gaps through compliant architecture, unified consent systems, disciplined SDK governance, and automated data protections built for long-term trust.

Below are the most common challenges, paired with the solutions our platform design and engineering teams deliver.

1. Fragmented Consent Workflows

Consent becomes messy when apps run across multiple devices, classroom systems, or shared accounts. Parents complete approval on one device, but the app collects data on another. This creates inconsistent records and gaps that auditors quickly find.

Our Solution:

We build a unified consent engine that stores every approval, revocation, and update in one place. It syncs across mobile, web, and school environments, giving teams a single audit-ready source of truth. Parents also receive a cleaner onboarding experience.

2. Uncontrolled Data Collection

Learning apps often grow faster than their data maps. Behaviour analytics, open text fields, and AI features start pulling information the team never intended to store. This increases exposure and complicates compliance reviews.

Our Solution:

We design purpose-limited data schemas and enforce collection boundaries at the feature level. Automated checks flag unnecessary fields before deployment. Clear data-flow diagrams help teams understand what enters the system, where it travels, and how long it stays.

3. Hidden Tracking Inside Third-Party SDKs

Most COPPA violations originate from vendor tools. Some SDKs collect device identifiers or usage patterns without clear documentation. These behaviours remain hidden until regulators or security teams detect them.

Our Solution:

All vendor libraries go through strict review, static analysis, and compliance screening. Tools that fail child-data requirements are removed or replaced. Continuous monitoring ensures no unauthorised tracking appears after updates.

4. Weak Internal Access Controls

When internal teams grow, access levels become unclear. Engineers, analysts, and support staff often retain permissions they no longer need. Over time, this increases the risk of accidental data exposure.

Our Solution:

We implement role-based access controls with detailed audit logs. Permissions match job functions, and sensitive records stay accessible only to authorised personnel. These logs support internal audits and help teams track incidents quickly.

5. Inconsistent Data Deletion Across Systems

Deletion is one of the hardest COPPA requirements. Removing a child’s profile from the main database is simple, but cached copies, logs, and vendor backups can remain untouched.

Our Solution:

Our architectures include automated retention rules that clear data across every storage layer, including logs and third-party integrations. This ensures deletion requests are complete, consistent, and verifiable.

6. Outdated or Inaccurate Privacy Notices

Policies often fall behind actual data behaviour. When new features launch, notices don’t always update immediately. During procurement or audits, this becomes a visible mismatch.

Our Solution:

We help teams create human-readable notices tied directly to real data flows. When features change, the system triggers content updates and prompts for re-consent if required. Families and schools receive accurate information at every stage.

7. Lack of Region-Aware Compliance

Apps that serve both US and UK audiences must align with COPPA, GDPR-K, and local age-appropriate design codes. Many teams treat these mandates as identical, which leads to major compliance gaps.

Our Solution:

We build region-aware paths that adjust consent, data rights, and privacy prompts based on local regulations. This creates consistent protection across markets and reduces the burden on product teams.

These challenges show why compliance must be an engineering priority, not a final legal check. The right architecture, data governance, and vendor controls help learning apps stay safe as they grow.

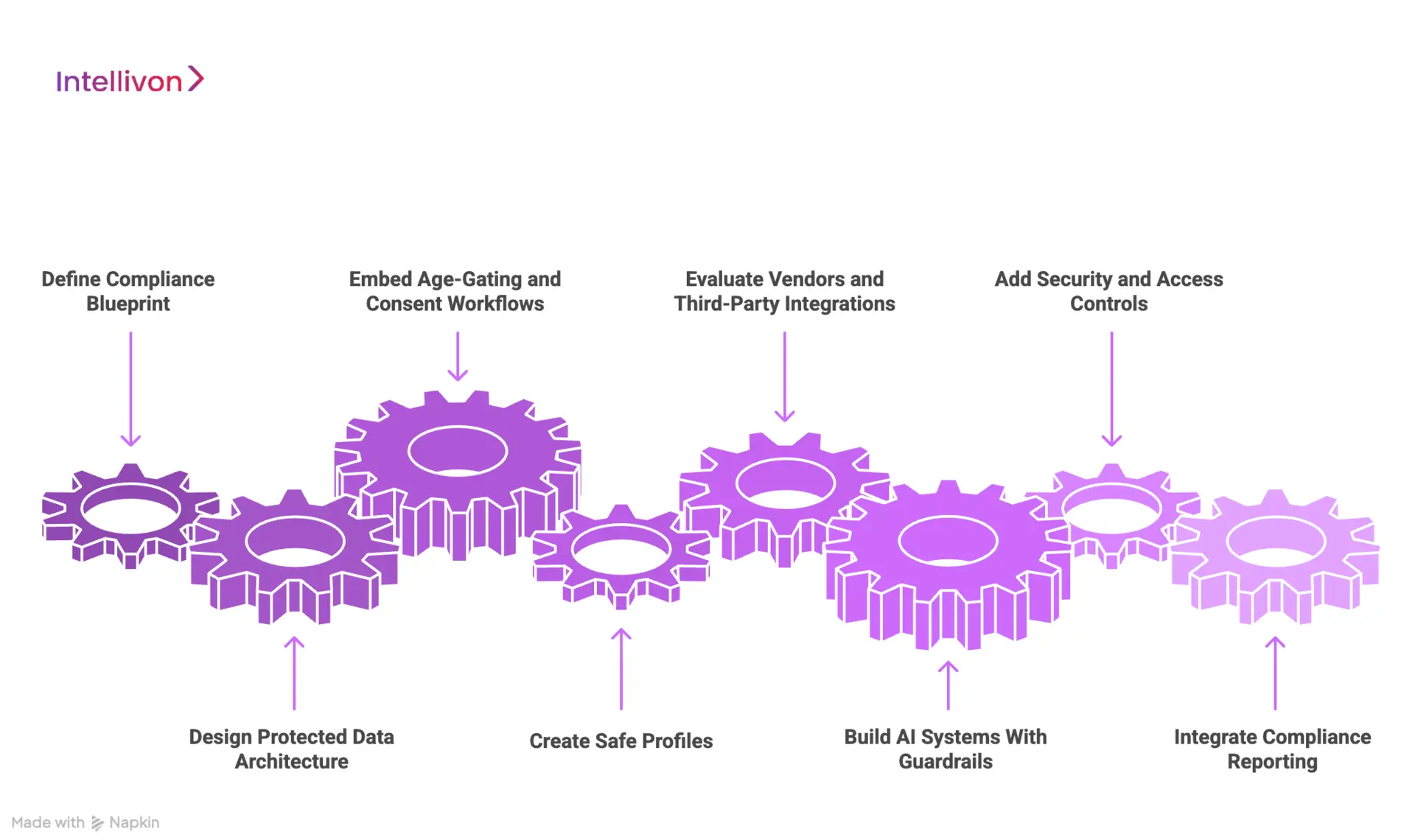

How Intellivon Builds COPPA-Ready Kids’ Learning Platforms

Creating a COPPA-ready learning platform requires the same discipline used in secure enterprise systems. Every interaction, data touchpoint, and internal dependency must align with clear privacy objectives. Our team designs these platforms from the ground up so parents feel safe, schools can verify compliance, and regulators receive the documentation they expect.

1. Define the Compliance Blueprint

Every project begins with a structured discovery phase. Product, legal, security, and data teams come together to identify essential features, the specific information those features require, and the data that must never enter the system.

This stage maps consent events, parental controls, and vendor interactions. The blueprint becomes the reference point for design, engineering, and future audits.

2. Design Protected Data Architecture

Children’s data receives its own protected pathway. Only information needed for learning outcomes or platform safety enters the system. It stays isolated from marketing tools, broad analytics trackers, and unnecessary storage layers.

This reduces exposure, simplifies governance, and helps the enterprise meet its compliance duties with less operational strain.

3. Embed Age-Gating and Consent Workflows

Age screening appears at the first interaction. If a user identifies as under 13, the system pauses personal data collection and shifts into a parent-controlled onboarding process.

Verified parental consent is captured through approved methods, and each step is recorded. These records support school-district reviews, regulatory inquiries, or internal audits.

4. Create Safe Profiles

Once approval is confirmed, the child’s profile is created with only essential fields. No precise locations, full names, or unnecessary identifiers circulate through the environment.

Parents and schools receive dashboards that show stored data, current permissions, and available controls. They can request updates, downloads, or deletion whenever they need.

5. Evaluate Vendors and Third-Party Integrations

Every external component, like SDKs, analytics tools, infrastructure services, and payment systems, undergoes a detailed review. The evaluation checks what information each tool touches, how it stores data, and whether that data is reused in any way.

Vendors that cannot meet COPPA or GDPR-K expectations are excluded. This ensures the platform does not leak information through hidden integrations.

6. Build AI Systems With Guardrails

AI can improve learning and on-platform safety, but it must operate inside tight boundaries. We design AI features that use minimal, purpose-specific data and avoid behavioural profiling. Models run on de-identified inputs, and outputs stay inside the platform.

Guardrails filter unsafe content, reduce bias, and maintain age-appropriate interactions. This lets enterprises benefit from AI without crossing regulatory lines.

7. Add Security and Access Controls

Security strengthens every compliance effort. Data is encrypted in transit and at rest, and only authorised team members can access sensitive records.

Role-based permissions and detailed logs show who interacted with what data. These logs provide clarity during incidents and help organisations demonstrate compliance during external reviews.

8. Integrate Compliance Reporting

After launch, our monitoring systems watch for unusual activity, consent errors, and changes in third-party behaviour. Alerts reach the right teams immediately.

Automated reports support school partners, district procurement teams, and regulators. This reduces manual work and ensures consistent oversight as the platform grows.

This structured process ensures every platform we build is COPPA-ready from the first release, not patched afterward. Enterprises gain a learning system grounded in trust, supported by disciplined governance, and designed for long-term success in regulated markets.

Cost to Build COPPA-Ready Children’s Apps

At Intellivon, we help enterprises build COPPA-ready kids’ learning platforms that are trusted by families, schools, and regulators. Costs vary based on scope, regulatory depth, market region (US, UK, or EU), and the level of privacy controls required. Our priority is to help clients launch platforms that protect children, minimise risk, and meet long-term compliance expectations.

When budgets are tight, we work with product, legal, and IT leaders to refine scope without compromising COPPA, GDPR-K, or Age-Appropriate Design Code alignment. Every build is planned for long-term stability, transparent data flows, and regulatory readiness. The goal is to create a platform that parents trust and institutions can adopt without hesitation.

Estimated Phase-Wise Cost Breakdown

| Phase | Description | Estimated Cost Range (USD) |

| Discovery & Compliance Alignment | Requirements mapping, risk analysis, COPPA/GDPR-K scoping, data minimisation rules, consent workflow planning | $5,000 – $10,000 |

| Privacy-First Architecture Design | Data isolation design, encryption planning, role-based access layers, and regional compliance flows | $7,000 – $14,000 |

| Age Gate & Consent Engineering | Neutral age-gating, parent onboarding, verified consent systems, audit logging | $6,000 – $12,000 |

| Learning Module & Content Engine | Progress tracking, safe personalisation, offline learning, content filters, child-friendly UI | $10,000 – $20,000 |

| Parent Dashboard & Controls | Permission management, data view/download/delete tools, alerts, and reporting access | $6,000 – $12,000 |

| Vendor & SDK Governance Setup | Third-party audits, safe analytics, secure SDK selection, and integration hardening | $5,000 – $10,000 |

| Security & Compliance Engineering | Encryption, tokenisation, access controls, audit logs, continuous monitoring | $8,000 – $15,000 |

| Platform UX & Interfaces | Child experience, parent dashboard UI, school admin tools, accessibility design | $8,000 – $16,000 |

| Testing & Validation | COPPA testing, GDPR-K checks, penetration tests, and content safety validation | $5,000 – $10,000 |

| Pilot & Rollout | Limited launch, feedback cycles, refinement of controls, support setup | $5,000 – $10,000 |

| Deployment & Scaling | Cloud deployment, regional routing, failover design, and observability | $6,000 – $12,000 |

Total Initial Investment Range:

$50,000 – $140,000

Ongoing Maintenance & Optimization (Annual):

15–20% of the initial build

Hidden Costs Enterprises Should Plan For

- Integration complexity: Schools and districts may use mixed systems requiring custom connectors.

- Compliance overhead: Documentation, audits, and periodic assessments create recurring effort.

- Data governance: Normalising formats and maintaining separation across regions needs steady work.

- Cloud usage: Learning content, analytics, and secure storage require active cost management.

- Change management: Training teachers, parents, and admins introduces measurable transition costs.

- AI safety tuning: If AI is used, guardrails and bias checks require periodic recalibration.

Best Practices to Avoid Budget Overruns

- Start lean: Launch with core features, validate demand, then scale modules.

- Embed compliance early: Avoid rebuilding flows later, which drives cost.

- Use modular design: Reuse content engines, dashboards, and consent systems across regions.

- Optimise cloud spending: Mix real-time and batch processing where possible.

- Enforce observability: Track uptime, data flows, and vendor behaviour continuously.

- Iterate for longevity: Update privacy notices, refine controls, and test new regulations.

Request a tailored proposal from Intellivon’s edtech compliance experts. We will align your COPPA-ready roadmap with your budget, regulatory obligations, and long-term growth strategy.

Conclusion

COPPA compliance has evolved into a strategic foundation for any children’s learning platform. It influences trust, procurement, adoption, and long-term scalability. When privacy is built into the architecture rather than added later, organisations gain a safer product, a stronger reputation, and a clearer path to market growth.

With the right design choices, governance, and ongoing oversight, a learning app can operate confidently in highly regulated environments. For enterprises that want to compete in this space, privacy is now a core product requirement, and not an optional layer.

Build Your COPPA-Compliant Learning Platform with Intellivon

At Intellivon, we develop COPPA-aligned children’s learning platforms that protect young users, support families, and help enterprises meet strict regulatory expectations. Our approach strengthens privacy, reduces audit complexity, and improves adoption across schools, districts, and global markets.

Why Partner With Intellivon?

- Compliance-First Architecture: Systems designed around COPPA, GDPR-K, and the UK’s Age-Appropriate Design Code with minimal data collection, safe defaults, and strict isolation.

- Verified Consent and Age Gates: Audit-ready parental verification, neutral age screens, and clear onboarding flows that prevent accidental non-compliance.

- Secure, Modern Infrastructure: End-to-end encryption, role-based access, strong API protections, and continuous monitoring across environments.

- Responsible AI and Personalisation: Intelligent learning features that avoid profiling, behavioural tracking, or unnecessary identifiers while staying age-appropriate.

- Vendor and SDK Governance: Thorough evaluation of third-party tools to eliminate hidden trackers, unauthorised collection, or unsafe integrations.

- Parent and School Dashboards: Transparent tools that allow families and educators to view data, adjust permissions, request deletion, and stay informed.

- Enterprise Delivery Expertise: Deep experience across edtech, privacy engineering, and complex compliance requirements for large organisations.

Connect with our team to learn how a COPPA-ready learning platform can increase trust, strengthen safety, and accelerate your product’s adoption in regulated markets.

FAQs

1. What makes a children’s learning app fully COPPA-compliant in 2025?

A1. A COPPA-compliant app verifies parental consent, limits data to essential learning use cases, isolates child information from marketing systems, and enforces strict vendor controls. It also provides clear privacy notices, safe defaults, and automated deletion across all storage layers.

Q2. How long does it take to build or upgrade a COPPA-ready learning platform?

A2. Timelines vary by feature complexity, data flows, and existing system architecture. Most enterprise-grade updates take 8–14 weeks to implement fully, including consent systems, SDK audits, age gates, and privacy redesign. Platforms with legacy data issues may need more time.

Q3. Can AI features be added without violating COPPA requirements?

A3. Yes. AI can support learning if it operates on minimal, purpose-specific data and avoids behavioural profiling. Models must rely on de-identified inputs, run within controlled environments, and never reuse child data for external training or analytics.

Q4. How do enterprises manage third-party SDKs that may collect restricted data?

A4. Every SDK requires a full privacy and technical review. Enterprises should run static analysis, confirm data boundaries, restrict optional tracking, and remove any tool that reuses or transmits identifiers. Continuous monitoring ensures no new behaviours appear after updates.

Q5. Why do schools and districts prefer COPPA-ready platforms during procurement?

A5. Districts choose compliant platforms because they reduce legal exposure, simplify internal reviews, and protect student privacy. Clear consent flows, transparent data practices, and audit-ready documentation help products move through procurement faster and gain broader approval across classrooms.