Children now learn, explore, and socialize using digital platforms from an early age. However, the systems built for them often collect much more data than needed, rely on unclear vendor connections, and expose children to risks that parents may not notice. The Children’s Online Privacy Protection Act (COPPA) aims to prevent this, but many learning apps still use weak consent processes, grant broad permissions, and have vague data practices. This results in regulatory exposure, reputational risk, and ongoing trust issues for any platform aimed at young users.

At Intellivon, we help companies create learning platforms for kids that meet the COPPA privacy standards from the start. Each platform is designed to handle sensitive data responsibly and to work in environments with strict regulations where regulators, school districts, and parents expect clarity. In this blog, we explain how we build these enterprise-grade, compliant EdTech learning platforms from the ground up.

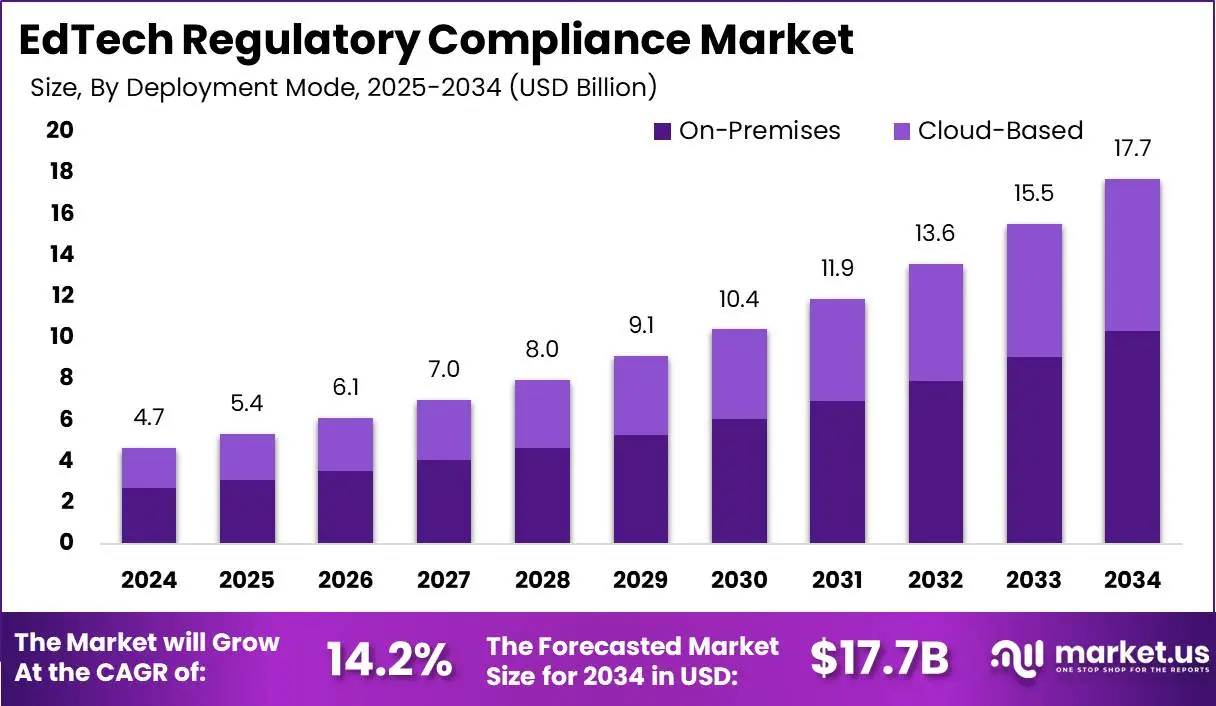

Key Takeaways of the Global EdTech Regulatory Compliance Market

The global EdTech regulatory compliance market is expanding rapidly. It is projected to reach USD 17.7 billion by 2034, up from USD 4.7 billion in 2024, growing at a 14.2% CAGR. North America leads with 42% market share, generating USD 1.9 billion in 2024.

Key Trends

- Broader digital learning adoption is also accelerating, with the U.S. market expected to hit USD 300 billion by 2033. Mobile learning, which is one of the primary channels for kids’ learning apps, will reach USD 110.42 billion by 2025.

- Regulators have increased enforcement. The FTC updated COPPA rules in April 2025 to restrict data retention, require explicit opt-in for targeted ads, and demand higher transparency from vendors.

- Schools and districts are prioritizing platforms that emphasize data minimization, transparency, and parental or institutional consent mechanisms.

- There is a market shift toward privacy-first alternatives and privacy-focused audit tools, with many schools preferring vendors who provide clear data handling and deletion practices.

- Deployment preference remains with on-premises solutions for higher control and security (over 58% of compliance tool deployments in 2024), though cloud adoption is growing due to scalability and accessibility.

Business Impact

- Noncompliance risks are severe: FTC can levy substantial fines for every child’s privacy violation, with recent enforcement actions resulting in multi-million-dollar penalties and suspended contracts.

- Successful platforms build market share by integrating privacy compliance into their core value proposition, fostering trust with both educational buyers and parents.

If more detail or a competitor breakdown is needed, it can be provided for enterprise-level context.

What is the Children’s Online Privacy Protection Act (COPPA)?

COPPA is the federal law that governs how digital platforms collect, store, and use data from children under 13. It sets strict expectations for consent, security, transparency, and vendor governance. Any kids’ learning platform operating in the U.S. must comply with these rules from the first line of design.

1. Data Collection Rules

COPPA limits what information a platform can collect from young users below the age of 13. This includes identifiers, device metadata, usage patterns, and any data that can be linked back to a child. Platforms must justify each data point and collect only what directly supports the learning experience.

2. Parental Consent Before Data Gathering

Platforms must obtain verifiable parental consent before capturing personal information. This applies to registration details, session activity, chat logs, or content uploads. Consent must be traceable, timestamped, and revocable at any time.

3. “Personal Information” Under COPPA

COPPA defines personal information broadly. It includes names, contact details, photos, audio, geolocation, device IDs, IP addresses, and behavioral signals. If the data can identify a child or track their activity, it falls under COPPA.

4. Required Security Standards

Platforms must use strong protection measures to secure children’s data. Encryption, access controls, audit logs, and safe storage are baseline expectations. COPPA requires reasonable safeguards to prevent unauthorized access, sharing, or misuse.

5. Data Minimization Requirements

COPPA enforces strict data minimization, and platforms may collect only the information needed to deliver the learning function. Anything beyond the educational purpose, such as broad analytics or behavior tracking, violates the regulation.

6. Rules for Third-Party Vendors

Third-party tools must follow COPPA, like analytics SDKs, content libraries, and personalization engines, which must avoid collecting identifiers or tracking children across apps. The platform remains responsible for all vendor activity, even if the vendor violates the rules.

7. Mandatory Disclosures and Parental Rights

COPPA requires platforms to explain their data practices clearly. Parents must know what is collected, why it is collected, who can access it, and how long it is stored. They must also have the ability to review, export, or delete their child’s data on request.

8. Restrictions on Geolocation

Sensitive data types carry additional restrictions. Voice recordings, webcam access, GPS signals, and behavioral patterns require explicit consent and strong justification. Platforms must avoid collecting these unless absolutely necessary for learning.

COPPA sets the foundation for how kids’ learning platforms must operate. Understanding these rules early helps teams design safer, compliant systems that parents and institutions can trust.

How AI Powers COPPA-Compliant Kids’ Learning Platforms

AI can transform how children learn, but it must operate inside strict privacy boundaries. Young users generate sensitive identifiers, behavioral cues, and usage patterns that COPPA treats as regulated data. This section explains how AI can enhance learning while staying fully aligned with COPPA’s expectations.

1. De-Identified or Synthetic Data

AI models must avoid training on raw child data because it exposes identities and increases regulatory risk. De-identified data removes personal identifiers while preserving learning patterns. Synthetic data goes a step further by fully simulating behavior without linking any data to real children.

These approaches allow teams to improve AI accuracy while protecting privacy and keeping training pipelines compliant from the start.

2. On-Device Processing

On-device AI reduces the amount of sensitive data that leaves a child’s device. It minimizes transmission, lowers reliance on external servers, and limits which vendors ever see the data. This approach is ideal for real-time feedback, adaptive learning paths, and voice-based interactions.

It also strengthens the platform’s security posture and simplifies compliance audits.

3. No Behavioral Profiling

Behavioral profiling is one of the biggest COPPA risks because it creates long-term patterns tied to individual children. AI must avoid tracking emotions, predicting attention spans, or nudging engagement through behavioral signals. Instead, platforms should use curriculum-based personalization that adapts lessons without collecting unnecessary identifiers.

This keeps the learning experience personalized while staying within safe regulatory boundaries.

4. Consent-Aware Workflows

Every AI-driven feature must verify parental consent before processing data. This includes audio tools, chat-based support, progress predictions, or adaptive recommendations. Automated, consent-aware workflows help ensure that AI never activates when consent is missing, revoked, or expired.

These checks reinforce trust and protect the platform from unintentional policy violations.

5. Real-Time Safety Filters

AI must include filters that protect children from unsafe content, prompts, or interactions. These filters should operate in real time and identify inappropriate language, unsafe questions, or harmful suggestions.

They must enforce safety without storing identifiable information or building long-term behavior logs. This ensures that every interaction remains age-appropriate and compliant.

6. Limited Third-Party Models

Many third-party AI APIs store inputs or reuse them for training, which creates direct COPPA violations. Platforms must restrict the use of such services or sign strict agreements guaranteeing zero storage, zero training, and zero cross-context tracking.

Even then, the safest approach is to keep sensitive inference on internal systems. This reduces exposure and keeps full control of the data lifecycle.

7. Transparent AI Logs

Parents and schools expect clarity around how AI influences a child’s learning experience. Platforms should provide simple, transparent logs explaining what the AI recommended, what data it used, and how decisions were made.

These logs must avoid revealing sensitive details but still offer enough context to build trust. This transparency strengthens compliance and reassures families that AI is used responsibly.

By using de-identified data, consent-aware workflows, restricted vendor models, and real-time safety filters, platforms can innovate with AI confidently while meeting COPPA expectations. This ensures AI enhances learning without compromising trust or compliance.

How 96% of “Educational” Apps Quietly Expose Children’s Data to Third Parties

Most learning apps still treat children as data sources, not protected users. Independent audits show widespread tracking, hidden data flows, and unsafe vendor integrations.

These patterns create risk for parents, regulators, and enterprises building kids’ platforms. A COPPA-aligned system must understand this reality before designing its architecture. Here are some numbers that will establish why kids’ learning platforms must follow strict compliance rules:

1. School Apps Share Student Information with External Entities

A major K-12 review found that 96% of school-used apps share student data with outside organizations. This includes identifiers, usage logs, and device metadata.

These transfers often occur through embedded SDKs that operate without parental visibility.

2. Learning Apps Can Access Location

Safety assessments show that 79% of children’s apps request location access, and 52% can read contacts or calendar data.

These permissions rarely support learning outcomes and increase re-identification risks.

3. Mobile Apps Send Data to Third Parties

A national audit found that 67% of school mobile apps share student information with third parties as part of their core setup. Many transmit data to analytics and marketing services.

Schools often have no visibility into downstream usage.

4. Kids Are Contacted by Strangers

A U.S. parent survey reported that 56% of children keep location sharing turned on across apps.

Even more concerning, 31% were contacted by strangers on their device, and many contacts referenced the child’s location.

Together, these findings show that the standard ed-tech ecosystem remains unsafe for children. If a platform inherits common SDKs, analytics defaults, or unnecessary permissions, it risks replicating these same failures.

A COPPA-compliant platform must counter these patterns with strict data minimization, consent orchestration, vendor governance, and transparent parent controls. This foundation sets the stage for building a trusted, enterprise-ready kids’ learning system.

Step-by-Step Architecture for a COPPA-Compliant Learning Platform

A COPPA-ready architecture must protect children’s data at every layer. It must validate consent before collection, isolate sensitive information, restrict vendor access, and prevent unnecessary data movement.

This section outlines a clear, practical architecture that supports safety, reliability, and long-term trust.

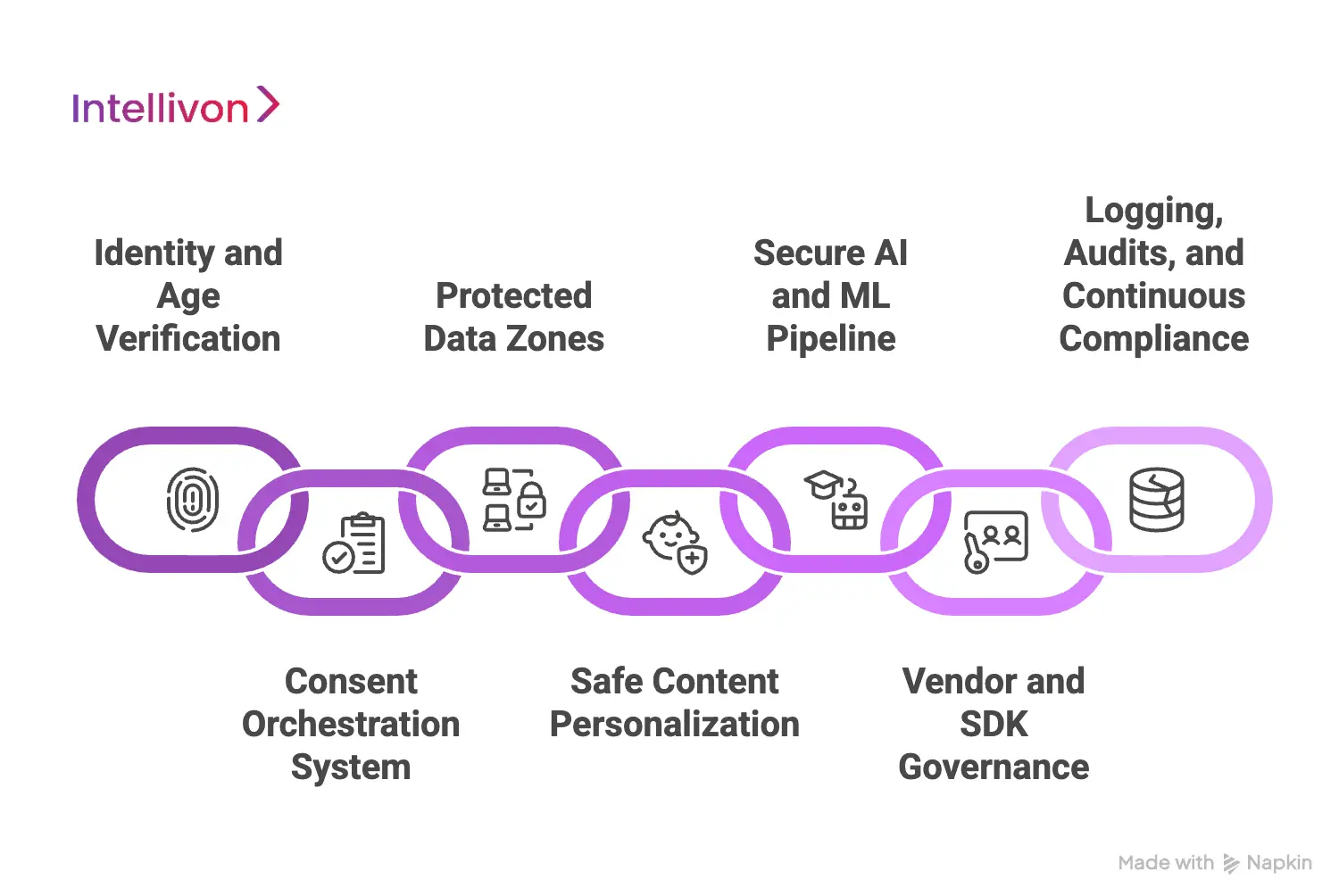

1. Identity and Age Verification Layer

Every child account must start with a verified parent or guardian. Platforms should use mechanisms like payment card checks, government ID validation, or secure parent email authentication. These methods create traceable evidence that consent came from an adult.

Verification logs must be stored securely and remain accessible for audits or regulatory inquiries.

2. Consent Orchestration System

A robust consent engine is essential for COPPA compliance. It must record consent states, handle revocation, track expiration, and block data collection until approval is confirmed.

Real-time consent checks ensure that no AI feature, service, or third-party tool runs without proper authorization. Parents should have an intuitive dashboard to update consent settings whenever needed.

3. Protected Data Zones

Child data must stay isolated from general user information. Segregated storage zones, strong access controls, and restricted data flows reduce exposure. Tokenization helps replace sensitive identifiers with secure, non-reversible placeholders.

All data must be encrypted in transit and at rest using modern standards like TLS 1.3 and AES-256.

4. Safe Content Personalization

Personalization must avoid behavioral tracking or predictive profiling. Platforms should rely on curriculum-based or age-based rules to deliver tailored experiences.

On-device processing can support real-time adjustments without exposing data externally. This ensures personalization remains effective without crossing regulatory boundaries.

5. Secure AI and ML Pipeline

AI models must operate in controlled environments with strict privacy safeguards. Training should rely on de-identified or synthetic data, not raw child information. Systems should perform fairness checks, maintain explainability logs, and block unsafe outputs.

Guardrails must protect chat tools, voice features, and interactive elements from generating inappropriate or harmful responses.

6. Vendor and SDK Governance Layer

Third-party tools often create the biggest compliance risks. Platforms must maintain an approved vendor list, restrict analytics SDKs, and ensure all partners follow COPPA requirements.

Vendor agreements must prohibit data storage, profiling, or cross-app tracking. All integrations should run through periodic audits to confirm continued compliance.

7. Logging, Audits, and Continuous Compliance

A COPPA-compliant system depends on continuous oversight. Tamper-proof logs record who accessed data, which tools processed it, and when actions occurred. Routine audits help identify gaps in permissions, retention, and vendor usage.

Safety tests must evaluate browsing features, voice tools, chat components, and adaptive content regularly.

A strong architecture is the foundation of a safe, compliant kids’ learning platform. This design approach keeps systems compliant, scalable, and trusted across schools, districts, and families.

Feature Checklist for a COPPA-Compliant Kids’ Learning App

A COPPA-compliant platform must deliver a safe, age-appropriate experience without collecting unnecessary data. The following checklist highlights the core features that help teams build learning tools that are both engaging and compliant:

1. Parent Dashboard

Parents need simple tools to understand and manage their child’s activity. A dashboard should display permissions, usage patterns, saved progress, and consent history.

It also must allow parents to adjust settings, revoke permissions, and request deletion at any time. This creates transparency and ensures parents maintain full control.

2. Safe Chat and Interactions

Any chat or interaction tool must follow strict safety rules. Pre-approved messages, filtered language models, and automated moderation help prevent unsafe or inappropriate communication.

AI-based filters must work in real time without storing identifiers or conversation history. This keeps communication age-appropriate and secure.

3. Anonymized Progress Tracking

A learning platform must avoid storing identifiable progress logs. Instead, it should track performance using anonymized, tokenized, or on-device records.

This protects the child’s identity while still enabling teachers and parents to understand learning patterns. It also reduces long-term data retention risk.

4. Secure Login for Kids

Children should never log in using personal emails, phone numbers, or social accounts. A secure, COPPA-friendly login system relies on parent-managed credentials or device-level authentication.

This prevents exposure to third-party login systems and reduces the chance of identity leakage. It also simplifies onboarding for families.

5. Offline-First Learning Modes

Offline access limits the amount of data transmitted across servers. It allows children to learn safely without generating unnecessary logs or exposing sensitive activity.

Syncing should occur only after verifying consent and applying data minimization rules. This reduces vendor touchpoints and strengthens privacy.

6. Parent-Controlled Notifications

All notifications must route through parents, not children. Platforms should allow parents to enable, disable, or customize alerts according to their preferences.

This prevents direct engagement tactics that can influence or pressure young users. It also aligns with COPPA’s restrictions on direct communication.

7. Ad-Free Experience

Kids’ learning apps must operate without advertising or behavioral targeting. Ads introduce trackers, cross-app identifiers, and unauthorized data flows that directly violate COPPA.

An ad-free model ensures children engage with content safely without manipulation. This also improves user trust and reduces compliance complexity.

These features form the core of a compliant and trustworthy kids’ learning experience. This checklist becomes even more powerful when combined with strong architecture and continuous compliance workflows.

How to Implement Verifiable Parental Consent (VPC)

Verifiable Parental Consent is the core requirement of COPPA and the foundation of every compliant kids’ learning platform. The system must confirm that a real parent has approved data collection before the platform stores or processes any information.

It must also allow parents to withdraw consent instantly, triggering automated restrictions across the platform. This section explains the safest and most reliable ways to implement VPC:

1. Payment Card Verification

Payment card verification is one of the most reliable VPC methods. A small, temporary charge confirms that the user is an adult with an active financial instrument. Platforms must store only confirmation metadata, not full card information, to reduce security exposure.

This method is widely accepted by regulators and is easy for parents to complete.

2. Government ID and Selfie Match

Some platforms use a secure ID upload paired with a real-time selfie check. This method verifies identity with high accuracy and reduces the chance of fraudulent approvals. The system should immediately delete raw images after verification to protect privacy.

This approach works well for enterprise-grade learning platforms with strict compliance needs.

3. Knowledge-Based Verification Questions

Platforms can confirm parent identity through personalized questions based on public or private records. These questions must be hard enough to deter children but simple enough for adults to answer quickly.

This method helps validate identity without collecting additional sensitive information. It works best when combined with other consent signals.

4. Signed Consent Forms with Proof of Identity

Some districts and institutions require written consent. Parents can sign a digital or physical form and upload a document for verification.

This method provides strong audit evidence and aligns with many school compliance workflows. It is slower than other methods but ideal for institutional rollouts.

5. API-Based Consent Platforms

Specialized consent platforms can automate the entire VPC flow. They validate identity, record timestamps, store consent states, and handle revocations in real time.

These systems help enterprises scale consent across multiple devices and users without increasing operational overhead. They also create unified logs for audits and compliance monitoring.

6. Consent Logging and Revocation Tracking

Every consent decision must be logged, timestamped, and tied to a verified parent. These logs help platforms prove compliance during audits or investigations.

Revocation must trigger immediate restrictions, including halting data collection and blocking access to features that rely on personal information. This ensures the platform stays compliant even as consent changes over time.

A strong VPC framework protects children and keeps the platform aligned with COPPA requirements. This foundation supports safer AI features, stronger privacy controls, and long-term trust.

How We Build A COPPA-Compliant Learning Platform

At Intellivon, we design kids’ learning platforms with privacy as the central architecture, not an afterthought. Every layer of the system is engineered to protect young users, enforce parental control, and eliminate unnecessary data exposure. We combine compliance-first engineering with scalable cloud infrastructure, secure AI pipelines, and strict vendor governance. Here is how we built it:

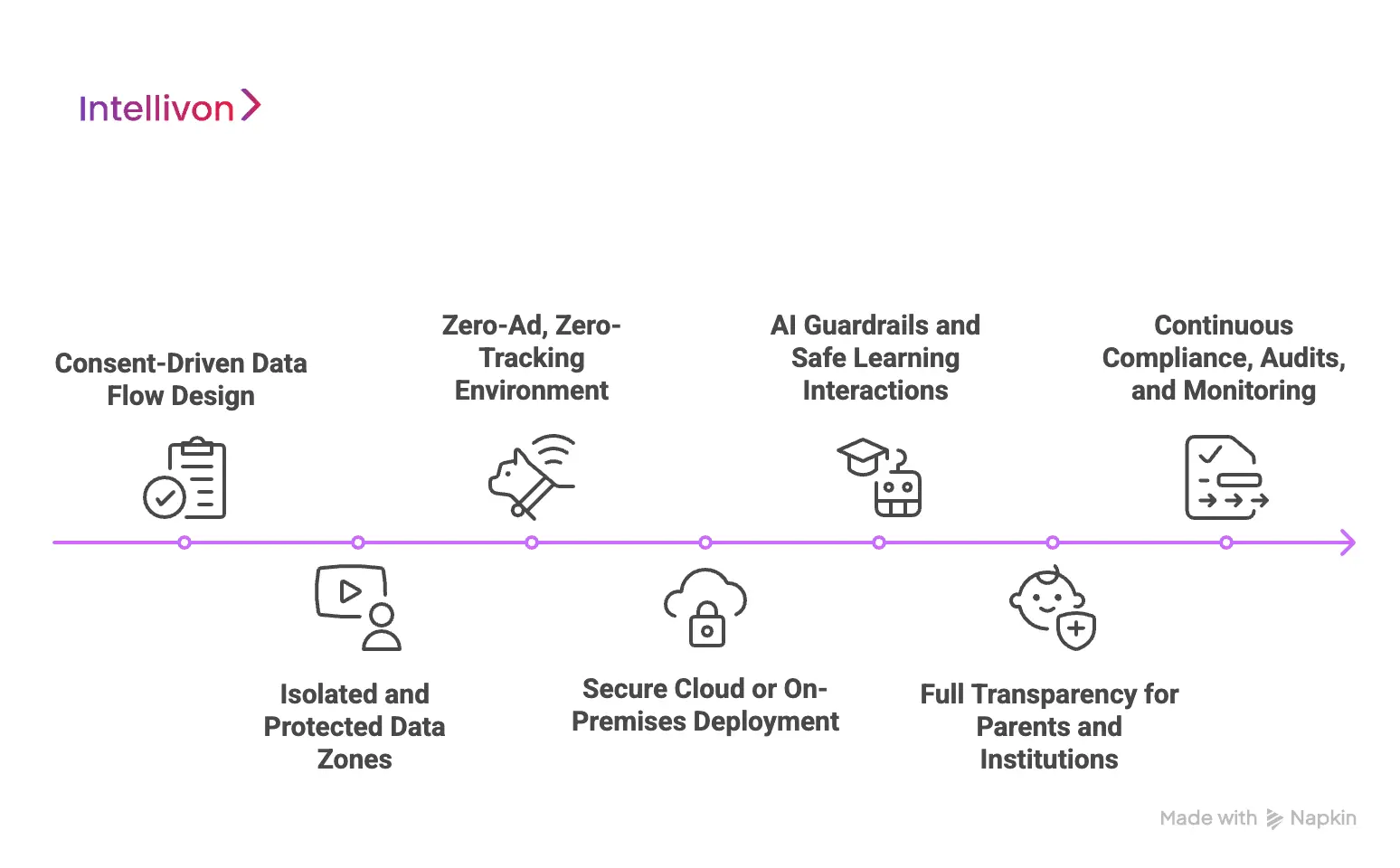

1. Consent-Driven Data Flow Design

We build data flows that activate only after verifiable parental consent is validated. Every service, AI workflow, and API checks consent status in real time before processing data.

This ensures the platform never stores or uses information without clear authorization. Consent remains the first gate for every interaction.

2. Isolated and Protected Data Zones

Our architectures isolate child data from general user data to reduce risk. Sensitive information stays in dedicated zones with restricted access, tokenized identifiers, and encryption at every stage.

This layered approach prevents accidental exposure and supports clean audit trails. It also ensures vendors never see data they do not need.

3. Zero-Ad, Zero-Tracking Environment

We eliminate behavioral trackers, third-party ads, and any SDK that profiles children. Intellivon maintains a strict vendor governance framework that verifies every partner’s compliance posture.

By controlling the ecosystem tightly, we prevent hidden tracking and unauthorized data flows. This keeps the platform safe, predictable, and compliant.

4. Secure Cloud or On-Premises Deployment

Enterprises can deploy Intellivon-built platforms on AWS, Azure, GCP, or secure on-prem infrastructure. Each deployment includes encrypted pipelines, hardened environments, and automated monitoring.

This flexibility helps schools and districts choose the setup that aligns with their internal policies. Compliance remains consistent across all environments.

5. AI Guardrails and Safe Learning Interactions

Intellivon’s AI models use de-identified or synthetic datasets and operate behind strict guardrails. We prevent behavioral profiling, log minimization, and external data transfers that could violate COPPA.

Real-time safety filters protect chat, audio, and personalized interactions without storing sensitive history. AI becomes a safe enhancement, and not a risk vector.

6. Full Transparency for Parents and Institutions

Parents and institutions expect clarity, and we design for it. Our platforms include dashboards showing consent states, data usage, access logs, and deletion requests.

This simplifies oversight and strengthens trust across families, districts, and regulators. Transparency becomes a core feature, not a compliance box.

7. Continuous Compliance, Audits, and Monitoring

We build automated auditing tools that monitor permissions, data flows, retention schedules, and vendor activity. Routine tests identify gaps early and keep the platform aligned with evolving COPPA standards.

Enterprises gain a continuously monitored environment that scales without increasing risk.

Intellivon builds kids’ learning platforms that combine privacy, safety, and scalable technology. By enforcing strict consent systems, isolating sensitive data, governing vendors, and securing AI workflows, we help enterprises meet COPPA standards confidently. This compliance-first foundation creates a trusted learning environment that serves children, families, and institutions responsibly.

Common Mistakes That Break COPPA Compliance In Kids’ Learning Platform

Many kids’ learning platforms break COPPA rules because they rely on default settings, permissive permissions, and unmonitored integrations. These issues often remain invisible until schools, districts, or regulators review the system. This section outlines the most common pitfalls and how our compliance-first engineering approach prevents them.

1. Using Default Firebase or Ad SDK Settings

Most analytics and ad SDKs collect identifiers automatically. They send device IDs, session behavior, and metadata to external servers, often without any visibility for the platform. These flows violate COPPA because they track children across apps and contexts.

Our teams audit every integration, remove unsafe components, and configure analytics so they operate only within strict, privacy-safe boundaries.

2. Allowing Ads from External Networks

Advertising networks introduce hidden trackers that operate outside the platform’s control. Even a simple embedded video player can load cookies, behavioral scripts, and unknown third-party domains. These mechanisms bypass consent and create silent compliance failures.

We replace ad-dependent components with closed, child-safe content systems that never rely on third-party tracking infrastructure.

3. Kids Signing Up Using Phone Numbers

Child-facing sign-up flows collect identifiers that COPPA classifies as highly sensitive. Email and phone numbers can be linked across platforms, creating exposure far beyond the learning app. Many systems still use these flows because they seem simple to implement.

Our approach routes all account creation through parents or guardians, eliminating the need for children to submit any personal contact details.

4. Storing Without Explicit Consent

Voice notes, audio fragments, and webcam captures can reveal far more than intended. Without explicit parental approval, storing or analyzing these files is a clear COPPA violation. Some platforms also feed these recordings into model-training pipelines, multiplying the risk.

We use de-identified pipelines, strict consent checks, and short-lived processing windows to ensure sensitive media is handled safely.

5. Collecting Geolocation Data

Precise geolocation is heavily restricted under COPPA, yet many apps still request access by default. Behavioral signals, like movement, emotional cues, or time-on-task, can also be treated as identifying data if linked to a child. These permissions rarely serve educational needs.

Our data-minimization framework disables non-critical permissions from the start and removes behavioral signals that are not necessary for learning.

6. Weak or Missing Data Deletion Workflows

Parents must be able to delete their child’s information quickly and completely. Many platforms cannot honor this because data is scattered across logs, backups, and third-party systems. Slow or incomplete deletion creates regulatory and reputational risk.

A unified deletion engine ensures that every record, whether internal or vendor-held, is removed consistently when families request it.

7. Unmonitored Third-Party Integrations

Most COPPA failures come from vendors running background processes that the platform does not fully control. Third-party tools often store inputs, reuse data for model training, or send information to external services. When this happens, regulators hold the platform responsible.

Strict vendor governance, tokenized access, and periodic audits keep integrations safe and prevent non-compliant data flows before they occur.

COPPA violations usually stem from hidden data flows, unsafe defaults, or unchecked vendors. With stronger controls, platforms can avoid these risks and deliver safer digital experiences for children. Intellivon helps enterprises build systems that enforce consent, reduce exposure, and operate within a secure, privacy-first ecosystem that families and institutions trust.

Top COPPA-Compliant Kids’ Learning Platforms

Several learning platforms have built strong privacy practices that align with COPPA requirements. These platforms minimize data collection, verify parental consent, and give families clear control over how information is used. They also follow strict vendor governance and maintain transparent documentation.

1. HelpKidzLearn Hub

HelpKidzLearn is a well-known example of transparent COPPA compliance. The platform limits the data it collects, avoids storing full names, and uses anonymized progress information to support learning. It also relies on school-based consent flows, which help ensure verified approval before any child data is processed.

Independent certifications, including iKeepSafe, strengthen its credibility and demonstrate ongoing privacy oversight.

2. Nessy

Nessy maintains clear COPPA-aligned policies for U.S. learners and limits the data it collects from children. The platform uses parent or school-based consent, ensuring only authorized adults approve data access.

It avoids collecting full names and relies on anonymized learning progress to support literacy development. These controls give schools and families predictable, privacy-safe learning environments.

3. Buddy.ai

Buddy.ai follows kidSAFE COPPA-certified standards and uses privacy-first workflows for its voice-based learning system. The app minimizes audio retention and avoids unnecessary sharing with external vendors.

All child interactions run within a controlled environment designed for young learners. This makes the conversational AI experience both engaging and compliant.

4. Kidzovo

Kidzovo holds kidSAFE+ COPPA certification and operates as an ad-free learning ecosystem. The platform collects only essential usage data and avoids identifiers that could expose children to tracking risks.

It also maintains strict review processes for content and interactions. This makes Kidzovo a strong example of how early-learning apps can stay safe without limiting creativity or engagement.

5. Seesaw

Seesaw protects children’s information through COPPA notices, school-based consent flows, and iKeepSafe certifications. The platform commits to collecting only what schools or guardians authorize and provides clear privacy documentation for educators and families.

It also uses encrypted environments and controlled access for student work. These features make Seesaw a trusted choice for K–12 classrooms.

Conclusion

Building a COPPA-compliant kids’ learning platform requires more than meeting regulatory checkboxes. It demands a design approach that protects children at every touchpoint—how data is collected, how consent is managed, how AI operates, and how vendors are governed. Platforms that embed privacy at the architecture level earn long-term trust from families, schools, and districts.

As expectations rise and enforcement strengthens, compliance becomes a competitive advantage rather than an obligation. By focusing on transparency, data minimization, consent-first workflows, and continuous oversight, learning platforms can stay safe, scalable, and future-ready. Privacy becomes the foundation for better digital learning experiences.

Build a COPPA-Compliant Kids’ Learning Platform With Intellivon

At Intellivon, we build privacy-first kids’ learning platforms that meet strict COPPA requirements from day one. Our systems protect children’s data, enforce verifiable parental consent, and minimize exposure to third-party risks, while delivering engaging, adaptive learning experiences. Every deployment is engineered to support compliance-heavy environments, institutional trust, and long-term platform scalability.

Why Partner With Intellivon?

- Compliance-Driven Architecture: Built to enforce consent-first workflows, isolate child data, eliminate unnecessary identifiers, and maintain strict data-minimization at every layer.

- Secure AI & Personalization: De-identified or synthetic training pipelines, on-device inference, and real-time guardrails deliver safe, age-appropriate intelligence without behavioral profiling.

- Vendor Governance & Zero-Tracking: A vetted ecosystem of SDKs, strict vendor controls, and zero-ad environments prevent hidden tracking, unauthorized data flows, and cross-app profiling.

- Consent Orchestration Engine: Automated, verifiable parental consent flows with live revocation controls, audit-ready logs, and institution-level management dashboards.

- Enterprise-Scale Deployment: Cloud or on-prem options with encryption across all layers, hardened environments, and continuous monitoring for schools, districts, and large EdTech providers.

- Privacy Transparency: Parent dashboards, activity views, and clear disclosure workflows strengthen trust with families and align with school and district expectations.

- Continuous Compliance Oversight: Automated audits, data-flow checks, retention enforcement, and vendor monitoring ensure the platform remains COPPA-aligned as it grows.

Book a strategy call with Intellivon to build a COPPA-compliant kids’ learning platform that protects children, meets regulatory expectations, and delivers safe, scalable digital learning experiences.

FAQs

Q1. What makes a kids’ learning platform COPPA-compliant?

A1. A COPPA-compliant platform collects only essential data and obtains verifiable parental consent before use. It avoids behavioral tracking, restricts vendor access, and provides parents with full visibility into data practices. Strong security and transparent disclosures complete the compliance framework.

Q2. What data can a COPPA-compliant platform collect from children under 13?

A2. Only information required to deliver the learning experience can be collected. This may include anonymized progress data, limited device metadata, or activity logs approved by parents. Anything beyond the core educational purpose is restricted.

Q3. How does parental consent work in a COPPA-regulated platform?

A3. Parents must verify their identity and approve data collection before a child uses the platform. Consent must be logged, timestamped, and revocable at any time. The platform must disable data processing immediately if consent is withdrawn.

Q4. Can AI be used safely in a COPPA-compliant learning platform?

A4. Yes. When AI operates through de-identified data, consent-aware workflows, and strict guardrails. The system must avoid behavioral profiling and limit third-party AI providers. Safe AI enhances learning without increasing privacy risk.

Q5. What happens if a kids’ learning platform violates COPPA?

A5. Noncompliance can lead to regulatory penalties, suspended school contracts, and reputational damage. Platforms may be required to delete data, shut down features, or rebuild consent systems. Strong documentation and audits help prevent these issues.