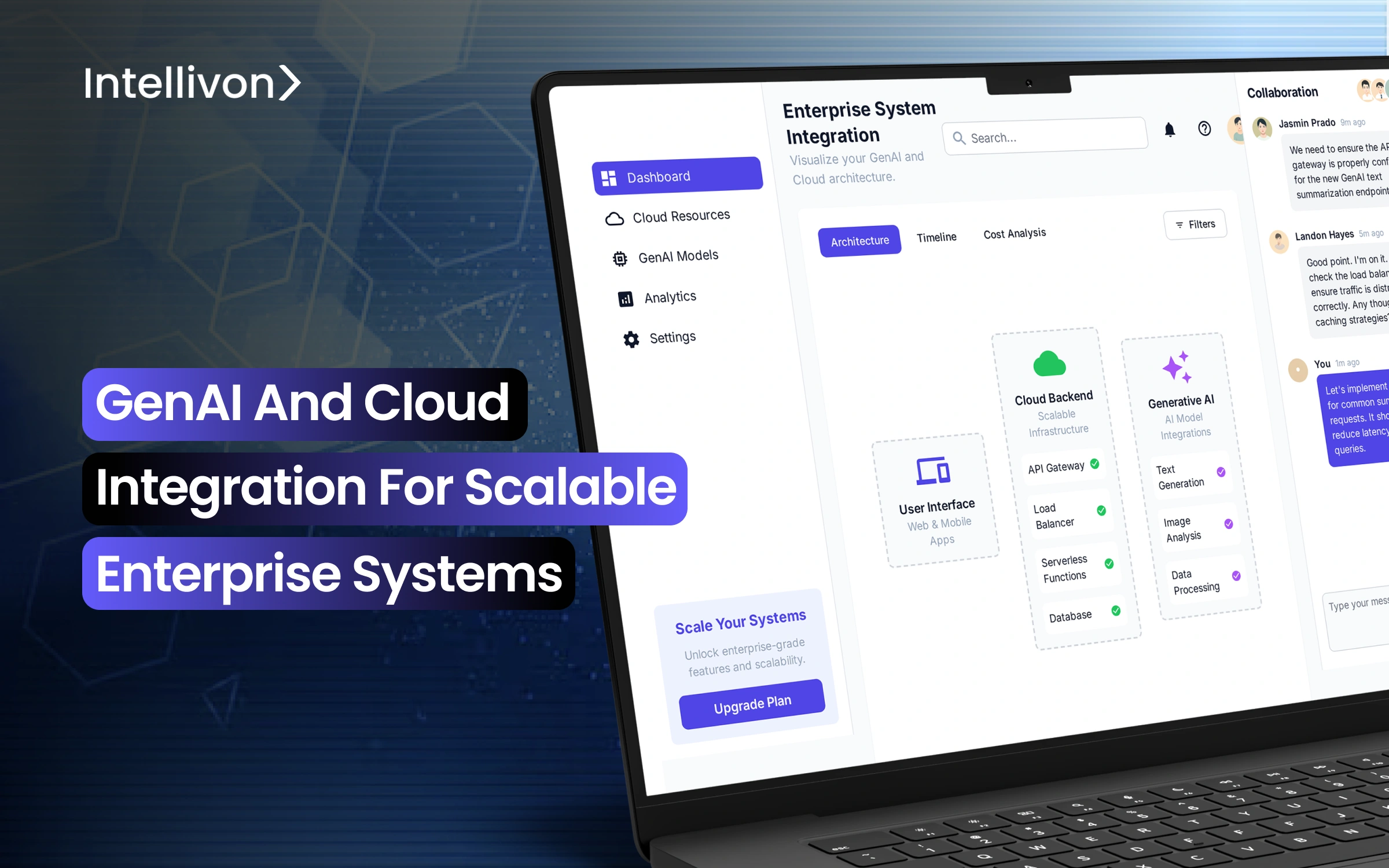

Gen AI and cloud have become inseparable forces in the recent enterprise AI transformation, functioning as an integrated foundation that determines whether AI initiatives succeed or fail. Enterprises integrating GenAI with cloud platforms achieve an ROI of 171–295% within three years.

The real challenge facing organizations today is not around AI adoption, but whether their systems can scale these models without breaking budgets, overloading infrastructure, or failing compliance checks. Traditional IT architectures struggle to keep up with workloads that grow unpredictably, with compute demands that spike from zero to massive in hours. Cloud environments provide the only viable path forward, which offers a cushion to absorb demand surges.

At Intellivon, we specialize in building scalable platforms that integrate Gen AI and cloud, bringing together high-performance compute, enterprise-grade security, and advanced data orchestration. In this blog, we’ll break down what GenAI–cloud-integrated systems are, how we build them from the ground up, and get them enterprise-ready.

What Are GenAI–Cloud Integrated Enterprise Systems?

Generative AI by itself is powerful, but it cannot deliver enterprise-wide value unless it is embedded in the right infrastructure. Cloud platforms bring the scalability, governance, and resilience needed to make AI practical at a business scale. When the two are combined, these systems can connect data, models, and workflows into a single, scalable framework.

A GenAI–cloud-integrated enterprise system is exactly that: a platform where generative AI models are tightly fused with cloud-native capabilities. Data flows in through secure cloud pipelines, models are trained and deployed with elastic compute, and outputs are delivered back into ERP, CRM, EHR, or supply chain systems without breaking compliance. Instead of AI experiments running in isolation, the system becomes part of the enterprise backbone.

What makes these systems unique is their ability to adapt. Sensitive workloads can stay in private clouds for regulatory reasons, while heavier tasks run on public cloud GPUs or TPUs. Multi-cloud setups allow workloads to shift across AWS, Azure, and GCP for resilience and performance. This flexibility turns generative AI from a departmental pilot into a governed, enterprise-wide capability that grows as the business grows.

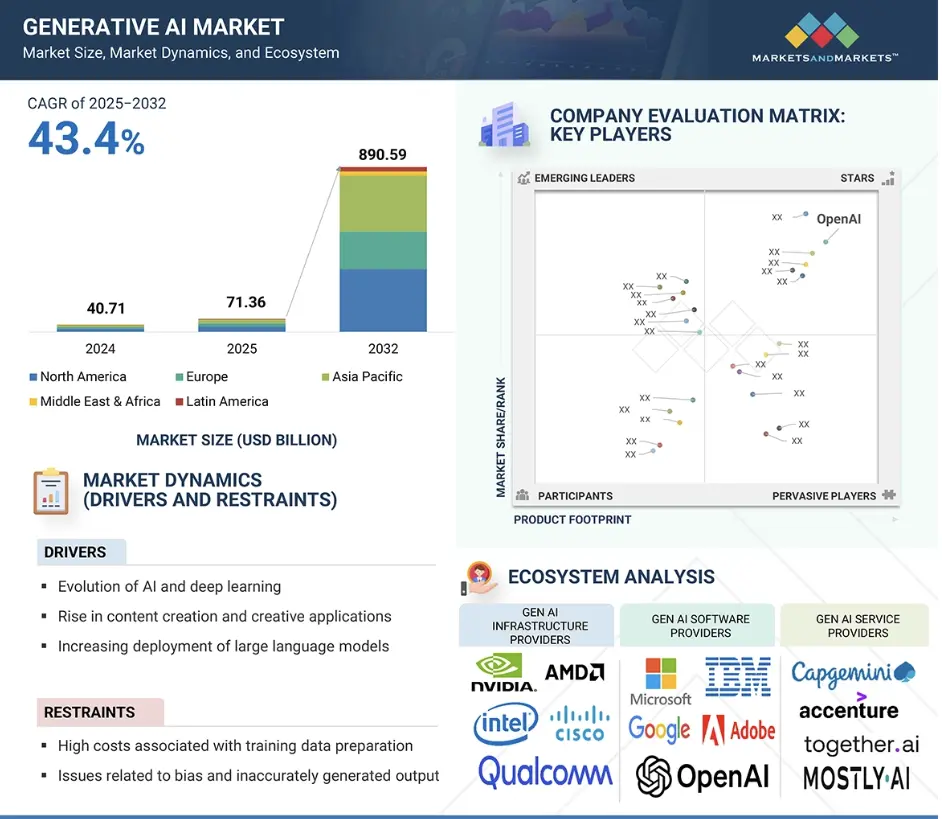

Key Takeaways of the Gen AI and Cloud Platform Market

GenAI and cloud integration are propelling scalable enterprise systems at a pace few technologies have matched. The global generative AI market is projected to surge from $71.36 billion in 2025 to $890.59 billion by 2032, powered by cloud-native deployments, embedded enterprise workflows, and expansion into non-AI-native industries.

- With over 89% of enterprises advancing GenAI initiatives in 2025 and 92% planning increased investment by 2027, adoption has become more strategic than experimental.

- Enterprises integrating GenAI with cloud platforms achieve an ROI of 171–295% within three years.

- Forrester reports a median payback of six months for modern integration platforms, driven by productivity gains and reduced infrastructure costs.

- IDC finds that companies average $3.50 in value for every $1 spent, with 9.3× ROI possible in best-fit workflows.

- 84% of organizations move GenAI projects from idea to production in under six months, while 74% achieve measurable ROI.

- Development teams using integrated GenAI-cloud solutions report 35–50% productivity gains, especially in customer support, predictive maintenance, analytics, and reporting.

- Embedding GenAI into core cloud software (Microsoft Copilot, Salesforce Einstein GPT, Adobe Firefly) drives immediate ROI and enterprise-wide adoption.

- Manufacturing, finance, and healthcare report up to 50% reduced downtime, faster workflows, and stronger compliance.

- Hybrid and multi-cloud deployments are expanding at a CAGR of 33% through 2030, ensuring cost control, regulatory compliance, and resilience.

- NLP and generative AI are the fastest-growing segments, driving automation across business processes.

- Pre-trained models and cloud APIs democratize AI, enabling faster deployment and time-to-value across functions.

- Asia-Pacific leads future growth, driven by sovereign AI initiatives and large-scale infrastructure investments.

Why Enterprises Need Gen AI and Cloud Integration

Generative AI has moved fast inside enterprises, but most adoption is still fragmented. Teams launch pilots in silos, with a chatbot here and an analytics assistant there. However, scaling them across the organization exposes gaps in governance, cost control, and compliance. To transform these experiments into lasting business systems, enterprises need GenAI and cloud integration working together as one foundation.

1. Moving Beyond Pilots

Isolated pilots provide proof of potential but fail to deliver at enterprise scale. Without integration into enterprise systems and cloud environments, AI workloads remain siloed, creating duplication and governance blind spots.

2. What Cloud Brings to GenAI

Cloud integration provides elasticity, performance, and resilience that traditional IT cannot. Enterprises can scale workloads on demand, secure data pipelines, and run GenAI models directly on top of ERP, CRM, and EHR data. Compliance frameworks built into cloud environments ensure that AI deployments remain regulator-ready from day one.

3. The Leadership Imperative

For decision makers, cloud integration turns generative AI from a tactical experiment into an enterprise-wide capability. Instead of scattered pilots, organizations gain copilots across business functions, unified data pipelines, and measurable ROI.

GenAI on its own is powerful, but without cloud integration, it struggles to scale, which is needed in enterprises. Together, they create systems that are elastic, secure, and business-ready, which is a foundation for growth that leaders can trust.

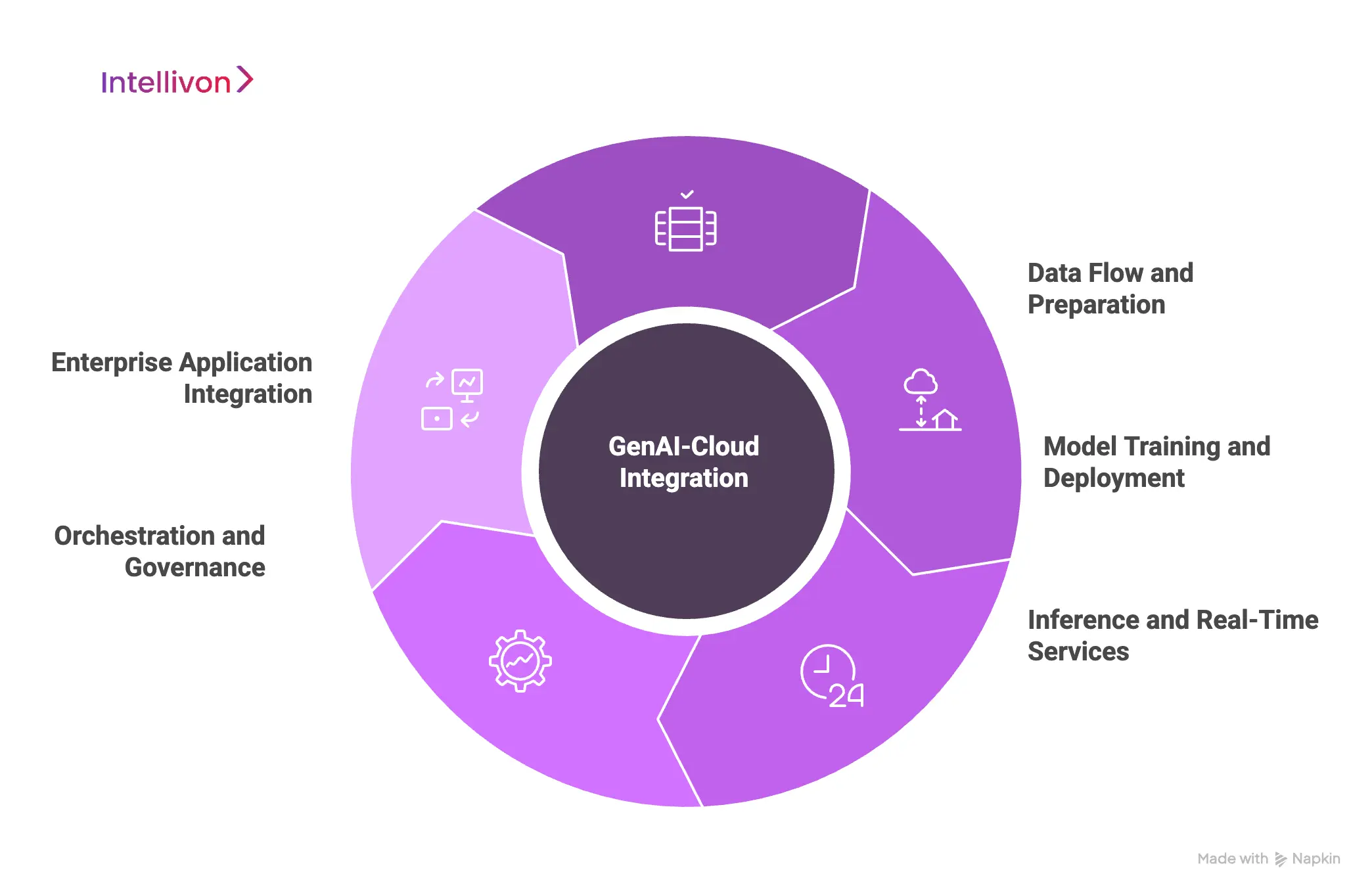

How These GenAI-Cloud Integrated Systems Work

Enterprises often see GenAI as a powerful engine, but without cloud integration, that engine has nowhere to run. What makes these systems effective is not just the models themselves, but the way data, infrastructure, and business applications are connected into a continuous cycle of intelligence and action.

1. Data Flow and Preparation

The process begins with enterprise data. Information from ERP, CRM, EHR, and knowledge repositories flows through cloud-native pipelines. Here, governance tools enforce lineage, compliance, and quality checks to ensure that what enters the system is trustworthy and usable.

2. Model Training and Deployment

Once prepared, the data powers generative AI models. These models may be fine-tuned for specific industries or workflows. Training and deployment happen in elastic cloud environments, where workloads can scale up or down depending on demand.

3. Inference and Real-Time Services

When the models generate outputs, they are delivered through cloud APIs. This allows enterprises to run copilots, analytics assistants, and automation tools in real time. Latency is reduced by routing workloads across hybrid or multi-cloud setups for resilience and performance.

4. Orchestration and Governance

At the center sits the orchestration layer, which is the integration platform that manages workflows, controls costs, and enforces compliance. Leaders gain dashboards to monitor model health, usage, and ROI, while IT teams automate retraining and updates through MLOps pipelines.

5. Enterprise Application Integration

Finally, outputs are pushed back into enterprise applications. Whether it is SAP for finance, Salesforce for customer engagement, or Epic for healthcare, GenAI is embedded directly into the tools employees already use. This ensures adoption without adding friction.

These systems operate as a closed loop, which includes data flows, intelligence generation, insights returning to workflows, and monitoring, driving continuous improvement. This integration is what transforms generative AI from a set of pilots into a true enterprise system.

How GenAI–Cloud Integrated Systems Are Used Across Enterprise Use Cases

Integrated systems prove their value only when tied to real workflows. Across industries, enterprises are embedding GenAI into cloud ecosystems to cut costs, accelerate decisions, and strengthen compliance. Here are the most impactful use cases today.

1. Customer Experience and Engagement

AI copilots integrated into cloud-based contact centers deliver real-time, context-aware support. They handle multilingual queries, analyze sentiment, and suggest next-best actions, reducing customer wait times while boosting satisfaction. These systems transform service centers from cost drivers into growth enablers.

2. Operations and Supply Chain

GenAI models running on cloud infrastructure improve demand forecasting, optimize inventory, and provide visibility across global logistics. Hybrid deployments safeguard sensitive supplier data, while multi-cloud setups enable scale and resilience. The result is fewer disruptions, reduced costs, and faster fulfillment.

3. Finance and Risk Management

Financial institutions use GenAI–cloud integration to analyze transactions, detect anomalies, and automate compliance workflows. By combining AI intelligence with cloud elasticity, organizations can process data in real time, improve risk scoring, and maintain regulator-ready reporting with greater accuracy.

4. Healthcare and Life Sciences

Healthcare providers and research institutions embed GenAI into diagnostics, patient engagement, and accelerated drug discovery. Hybrid cloud ensures compliance with strict data protection requirements, while elastic compute accelerates training and analysis. This integration reduces costs, shortens discovery cycles, and enhances care outcomes.

5. Legal and Compliance

Legal teams use integrated systems to review contracts, analyze case law, and automate compliance reports at scale. GenAI copilots embedded in cloud repositories make it possible to search millions of documents securely, ensuring speed without compromising governance.

6. Workforce and HR Management

Cloud-integrated copilots assist with recruitment, onboarding, policy training, and workforce analytics. Multi-cloud infrastructure enables consistent performance across regions while reducing administrative overhead. Employees benefit from faster onboarding and personalized learning paths, while HR leaders gain actionable insights.

7. Product Innovation and R&D

Manufacturers and product teams apply GenAI–cloud systems to simulate designs, test materials, and accelerate prototyping. Elastic compute enables faster iterations, while hybrid deployments protect intellectual property. This shortens innovation cycles and helps enterprises bring products to market more competitively.

8. IT Operations and Cybersecurity

Integrated systems support IT and security teams by monitoring logs, detecting anomalies, and automating responses. Orchestration across cloud providers ensures resilience, while GenAI copilots surface insights faster than traditional tools. This reduces downtime and strengthens enterprise defenses against evolving threats.

These use cases highlight how integration makes GenAI practical at scale. By embedding intelligence into existing workflows, enterprises achieve measurable outcomes, which include faster decisions, lower costs, and greater resilience across every major business function.

Enterprise Examples of GenAI and Cloud System Adoption

1. Pfizer

Pfizer has adopted hybrid and multi-cloud architectures to accelerate its R&D operations with generative AI. By combining on-premises compliance controls with elastic cloud compute, the company shortens discovery timelines, improves research accuracy, and scales analysis globally without regulatory compromise.

2. Walmart

Walmart leverages Azure and GCP-based copilots integrated into its supply chain systems. These platforms help forecast demand, optimize logistics, and streamline inventory management across thousands of stores and suppliers. The result is faster replenishment cycles, reduced costs, and improved customer availability.

3. Goldman Sachs

Goldman Sachs has deployed hybrid cloud copilots focused on compliance and analytics. These systems monitor transactions in real time, reduce false positives in fraud detection, and generate regulator-ready reports. The integration of GenAI with cloud infrastructure strengthens both resilience and governance.

4. Philips Healthcare

Philips Healthcare uses cloud-integrated GenAI copilots for diagnostic imaging and patient care. Hybrid models safeguard sensitive health data, while public cloud elasticity supports large-scale image analysis. This integration improves diagnostic accuracy, reduces turnaround times, and enhances patient outcomes.

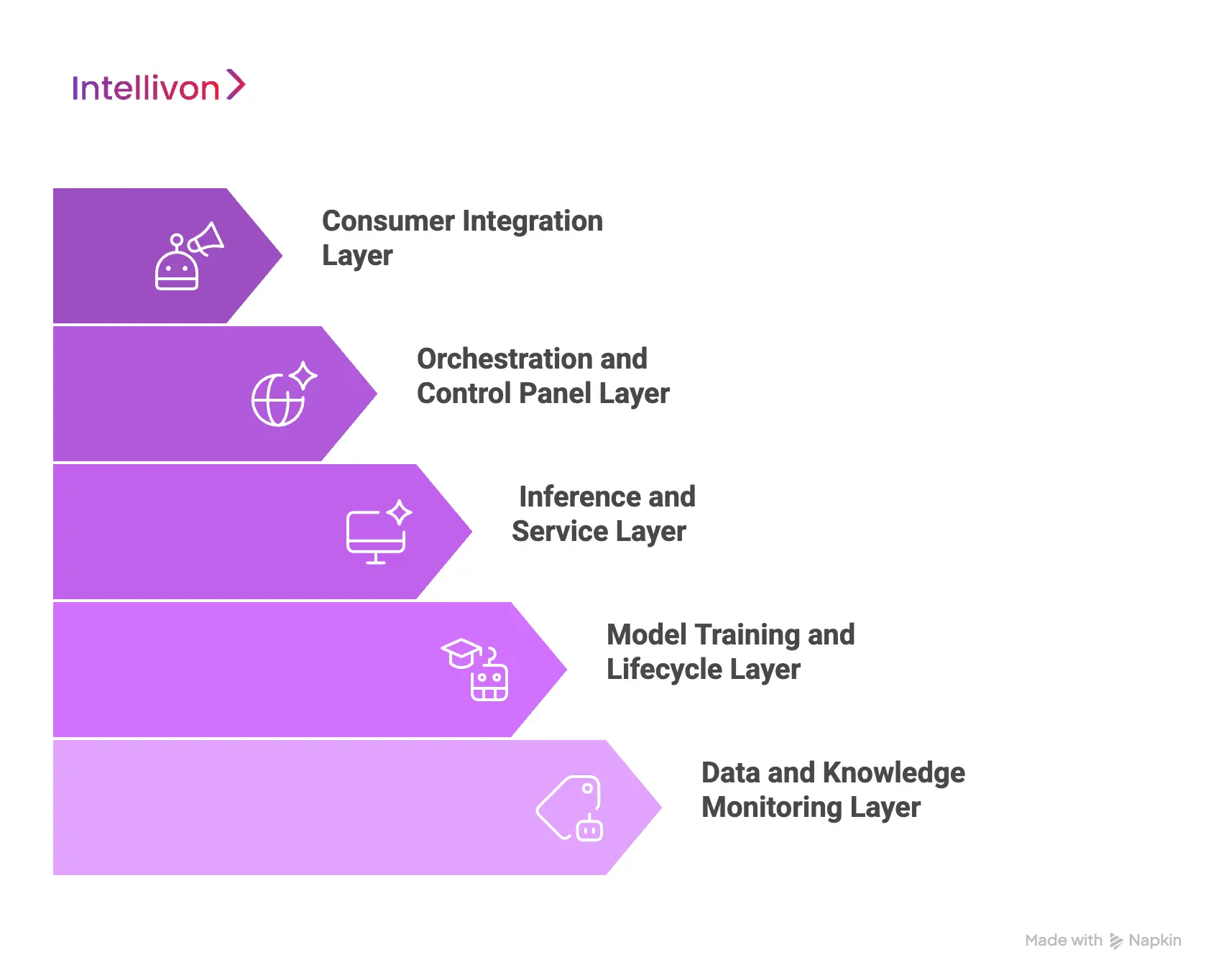

Our Enterprise Architecture of GenAI–Cloud Systems

Scaling generative AI in an enterprise demands more than models and APIs. It requires an architecture where data, AI models, cloud infrastructure, and business applications work as one system. At Intellivon, we design this architecture around five layers, each powered by hybrid and multi-cloud environments to balance compliance, elasticity, and resilience.

1. Data and Knowledge Monitoring Layer

The foundation begins with enterprise data flowing from ERP, CRM, EHR, and data lakes into cloud-native pipelines. Governance, lineage, and quality checks run automatically, ensuring the data is auditable and regulator-ready. Hybrid deployments keep sensitive workloads in private clouds, while public clouds manage large-scale storage and processing.

2. Model Training and Lifecycle Layer

This layer manages fine-tuning, retraining, and monitoring of GenAI models. Additionally, MLOps pipelines in AWS, Azure, or GCP automate drift detection and rollback. At the same time, hybrid strategies allow enterprises to train models on high-performance cloud GPUs while protecting restricted datasets in private environments.

3. Inference and Service Layer

Here, models generate outputs delivered through APIs and cloud services. In this layer, real-time requests are routed across multiple providers for performance, while hybrid setups enforce compliance by keeping regulated data within private systems. This ensures consistent, low-latency performance across regions and use cases.

4. Orchestration and Control Panel Layer

At the center is the orchestration layer, which is the integration platform that connects AI workloads with business processes. Cloud-based dashboards track usage, cost, and compliance in real time. Workflow automation and role-based access ensure every output is secure, traceable, and aligned with enterprise policies.

5. Consumer Integration Layer

The final layer embeds GenAI directly into enterprise tools. Copilots, dashboards, and APIs connect seamlessly with SAP, Salesforce, Epic, or BI platforms through cloud-enabled integration. Employees engage with AI inside familiar systems, driving adoption while ensuring governance remains intact.

This architecture makes generative AI both powerful and practical. By embedding cloud at every layer, Intellivon delivers systems that are elastic, secure, and scalable, and ready for enterprise-wide deployment and measurable business impact.

Key Features of GenAI–Cloud Integrated Systems

The strength of GenAI–cloud systems lies in how they combine scale, intelligence, and governance into a unified framework. Each feature is designed to address a specific enterprise need, but together they create the foundation for sustainable and secure AI adoption across industries.

1. Elastic AI Scaling

AI workloads are unpredictable, where one week, inference demands surge, and the next, training pipelines consume GPU cycles.

Elastic scaling ensures enterprises don’t overprovision or underdeliver. Compute resources expand automatically to meet peak demand and contract when workloads ease, balancing performance with cost efficiency. This flexibility allows enterprises to scale AI initiatives across departments without being constrained by infrastructure limits.

2. Data Governance and Observability

Enterprises succeed with AI only if their data can be trusted. With cloud-native AI governance, every dataset is tracked from entry to output, enforcing lineage, audit logs, and quality checks.

Observability ensures data pipelines feeding GenAI models remain consistent, compliant, and bias-free. This integration transforms raw enterprise data from ERP, CRM, and EHR systems into a governed knowledge fabric ready for AI consumption.

3. Continuous Model Lifecycle Management

Generative AI models do not remain static, and they evolve as business conditions change. Lifecycle management automates retraining, drift detection, and rollback using cloud-based MLOps pipelines.

This keeps outputs aligned with current realities, whether it’s changing regulations, market dynamics, or customer behaviors. Enterprises gain confidence that their AI systems remain accurate, relevant, and regulator-ready over time.

4. Compliance by Design

Regulation cannot be an afterthought in enterprise AI. By embedding frameworks like HIPAA, GDPR, SOC2, and the EU AI Act into the system architecture, compliance becomes proactive rather than reactive.

Every interaction, from data ingestion to inference, follows predefined security and governance policies. This reduces the risk of penalties while freeing teams to innovate within safe, regulator-approved boundaries.

5. FinOps Cost Management

AI projects fail when cloud costs spiral out of control. FinOps dashboards give enterprises a transparent view of training and inference spend in real time, directly linked to business KPIs.

Leaders can pinpoint which workloads generate value and which require optimization. With this visibility, enterprises can prevent “AI bill shock” and make smarter investment decisions as they scale adoption.

6. Multi-Agent Orchestration

Enterprises don’t operate in silos, and neither should their AI. Multi-agent orchestration platforms allow different GenAI copilots to collaborate across functions, whether forecasting demand, assisting legal teams, or supporting HR.

The orchestration layer coordinates tasks, manages dependencies, and enforces governance across workflows. This creates an ecosystem where intelligence flows across the enterprise, rather than remaining locked within one department.

7. Retrieval-Augmented Generation (RAG)

Generic AI models are only as useful as the data they access. With RAG, systems retrieve information from enterprise knowledge bases and combine it with model reasoning.

This ensures responses are contextual, accurate, and auditable. By grounding outputs in organizational data, enterprises reduce hallucinations and provide decision makers with insights they can trust.

8. Zero-Trust Security

Security risks increase as AI integrates with more systems. A zero-trust approach secures every access point with encryption, authentication, and continuous monitoring.

Role-based controls prevent unauthorized use, while real-time alerts detect anomalies such as prompt injection or data leakage attempts. Enterprises can deploy GenAI confidently, knowing sensitive data is protected across hybrid and multi-cloud environments.

9. Explainability and Auditability

AI adoption stalls when users and regulators cannot see how outputs are generated. Explainability features provide dashboards that break down model reasoning and highlight key data inputs.

Audit logs track every decision, enabling enterprises to demonstrate accountability during internal reviews or compliance checks. This transparency builds trust with employees, customers, and regulators alike.

10. Seamless Enterprise Application Integration

For GenAI to succeed, it must live inside the tools employees already use. Pre-built APIs and connectors integrate Copilots directly into SAP, Salesforce, Epic, or BI platforms.

This reduces friction, speeds adoption, and ensures AI augments workflows instead of disrupting them. Enterprises move from isolated experiments to full-scale adoption without retraining employees on entirely new systems.

These ten features allow generative AI to scale responsibly, delivering measurable impact while remaining governed, secure, and embedded in daily business operations.

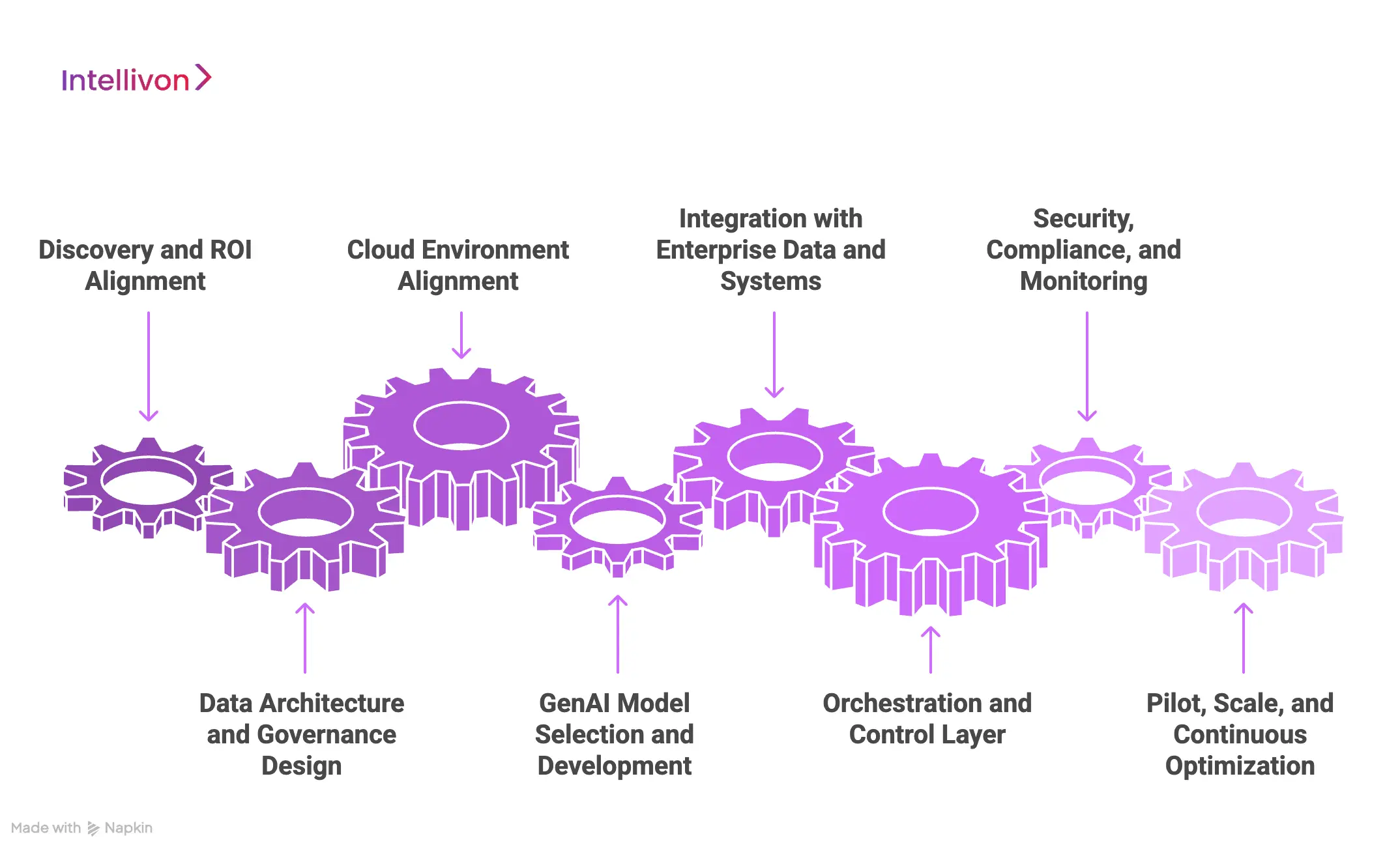

How We Integrate Gen AI and Cloud into Enterprise Systems

Building a GenAI–cloud system is not about spinning up a model or adding APIs. It is about designing an enterprise-ready process where cloud infrastructure, AI models, data pipelines, and business workflows are fully integrated. At Intellivon, we follow a structured eight-step approach that ensures scalability, compliance, and measurable ROI.

Step 1: Discovery and ROI Alignment

The process begins with identifying high-value workflows, such as claims automation, compliance reporting, or supply chain forecasting. Each use case is mapped to measurable KPIs so leadership can clearly see the business case.

At this stage, we also audit the enterprise’s current cloud providers (AWS, Azure, GCP, hybrid/private) to align with existing contracts and compliance requirements.

Step 2: Data Architecture and Governance Design

Data is the backbone of any AI system. In this step, ingestion pipelines are designed to draw from ERP, CRM, EHR, and knowledge repositories.

Governance is embedded early through lineage tracking, audit logs, and compliance checks (HIPAA, GDPR, SOC2), ensuring that AI systems work only with secure and high-quality data.

Step 3: Cloud Environment Alignment

Not all workloads belong in the same place. Sensitive data may remain in private clouds for regulatory reasons, while high-performance training runs in public cloud GPU/TPU clusters.

This step establishes hybrid and multi-cloud strategies, optimizing performance while avoiding vendor lock-in. It ensures that the enterprise can scale AI globally while remaining compliant locally.

Step 4: GenAI Model Selection and Development

Models are selected and fine-tuned for enterprise-specific needs, whether that means LLMs, copilots, or multi-agent systems. Retrieval-augmented generation (RAG) pipelines are implemented to ground models in organizational knowledge.

Observability tools are deployed to track drift, automate retraining, and enable rollbacks. This makes models reliable, auditable, and continuously aligned with evolving business contexts.

Step 5: Integration with Enterprise Data and Systems

The real challenge is integration, which is connecting AI outputs with existing enterprise platforms like SAP, Salesforce, Epic, or ServiceNow. Secure APIs and connectors are built to bridge these systems.

Knowledge bridges link structured and unstructured data, allowing GenAI to generate outputs that reflect real enterprise context. Domain experts validate outputs during this phase to build trust before scaling.

Step 6: Orchestration and Control Layer

This step introduces the orchestration platform that manages AI agents, workflows, and costs. Dashboards provide visibility into model usage, compliance status, and financial impact.

Role-based access controls and workflow automation ensure that AI integrates into daily operations while remaining governed and auditable. It is here that enterprises gain the ability to monitor and scale AI intelligently.

Step 7: Security, Compliance, and Monitoring

No system is enterprise-ready without robust security. In this step, zero-trust architecture is applied across APIs, pipelines, and endpoints.

Continuous monitoring detects anomalies, prevents data leakage, and guards against prompt injection. Compliance frameworks, from HIPAA to the EU AI Act, are enforced at every level, ensuring the system is regulator-ready at launch.

Step 8: Pilot, Scale, and Continuous Optimization

Deployment begins with a controlled pilot in one business unit. Performance is measured against ROI benchmarks, workflows are refined, and adoption challenges are addressed.

Once validated, the system is scaled across the enterprise, with continuous retraining and orchestration keeping models accurate and responsive to changing needs. This step ensures the platform grows alongside the business.

By following this eight-step process, enterprises avoid the pitfalls of ad-hoc AI adoption. The result is a GenAI–cloud system that is scalable, compliant, and tightly integrated into business operations, not just a pilot, but a platform for enterprise-wide transformation.

Overcoming Challenges in Building and Deploying GenAI–Cloud Systems

Adoption of GenAI in the cloud is accelerating, but large-scale deployment still presents hurdles that many enterprises underestimate. These issues range from fragmented data and runaway costs to compliance risks and cultural resistance. Without careful integration, most pilots stall before they create a meaningful business impact.

1. Data Fragmentation Across Systems

Enterprises generate massive amounts of information, but it is usually trapped in disconnected systems like ERP, CRM, and EHR. When data remains siloed, AI models cannot see the full picture, leading to incomplete insights and outputs that lack reliability.

Our approach solves this by building governed data pipelines that unify structured and unstructured information across hybrid and multi-cloud environments. By connecting every source into a single, auditable knowledge layer, we ensure AI has the context it needs to deliver accurate, trusted outcomes.

2. Latency and Performance Bottlenecks

Generative AI copilots are only effective if they respond quickly, but inference workloads can overwhelm traditional infrastructure. Slow response times frustrate users, limit adoption, and undermine the promise of intelligent automation.

With optimized cloud routing and elastic scaling, the platforms we design balance performance with cost. Workloads are dynamically distributed across environments, ensuring sub-second responsiveness while maintaining compliance and governance.

3. Vendor Lock-In Risks

Relying on one cloud provider may seem convenient, but it creates long-term inflexibility. Enterprises locked into a single ecosystem face higher costs, slower innovation, and greater risk if that provider experiences downtime or policy changes.

By architecting for hybrid and multi-cloud, we make workloads portable across AWS, Azure, GCP, and private clouds. This protects enterprises from dependency while giving them resilience, cost control, and freedom to innovate without restrictions.

4. Compliance and Regulatory Gaps

Highly regulated industries such as healthcare, finance, and government cannot afford non-compliance. A single oversight with HIPAA, GDPR, or the EU AI Act can result in fines, reputational damage, and stalled projects.

With compliance frameworks embedded at the architectural level, our systems are regulator-ready from day one. Every data flow, model interaction, and output is monitored against required standards, reducing both risk and operational overhead.

5. AI Model Drift and Bias

Over time, models lose accuracy as data shifts or bias accumulates. If left unchecked, this erodes user trust and undermines decision-making at scale.

Drawing on mature MLOps practices, we implement continuous retraining, drift detection, and explainability dashboards. This keeps models aligned with evolving business realities, ensuring reliability and accountability over time.

6. Runaway Cloud Costs

AI systems are resource-intensive, and poorly monitored workloads can generate massive bills within weeks. Without cost visibility, ROI calculations quickly collapse.

Through integrated FinOps dashboards, leaders gain real-time visibility into training and inference costs, tied directly to business KPIs. This ensures AI adoption delivers measurable value without destabilizing IT budgets.

7. Legacy System Integration

Many enterprises still run critical operations on decades-old ERP or CRM platforms. Integrating these with GenAI–cloud systems is complex and risky, often requiring significant custom development.

We overcome this by building secure APIs and orchestration layers that bridge modern AI capabilities with legacy platforms. This allows enterprises to modernize incrementally while keeping essential processes running smoothly.

8. Security Vulnerabilities

Expanding AI across cloud environments introduces new risks such as prompt injection, data leakage, and exposed APIs. Enterprises cannot afford breaches in sensitive areas like finance or healthcare.

Our security-first architecture applies zero-trust principles across endpoints, APIs, and data flows. Encryption, role-based access, and continuous monitoring protect sensitive information, keeping systems secure across hybrid and multi-cloud deployments.

9. Talent and Change Management

Even with strong architecture, AI adoption falters if employees resist change or lack the necessary skills. Cultural and organizational barriers often stall projects more than technical ones.

With over a decade of enterprise expertise, we provide adoption roadmaps, training programs, and human-in-the-loop governance. These initiatives help employees embrace AI confidently, driving organization-wide adoption and impact.

These challenges explain why so many GenAI pilots never scale. Success requires more than cloud resources; it demands integration expertise, governance, and cultural readiness. By combining deep AI engineering with enterprise-grade architecture, we turn potential roadblocks into a foundation for secure, scalable, and ROI-driven adoption.

Conclusion

GenAI–cloud integration is becoming the backbone of modern enterprise systems. By uniting scalable cloud infrastructure with intelligent AI models, organizations gain a platform that delivers measurable ROI, ensures compliance, and accelerates innovation across every business function. The challenge lies not in adopting AI or cloud separately, but in integrating them into a governed, secure, and scalable system.

That is where expertise matters. With over a decade of enterprise deployments and proven frameworks, we design platforms that help businesses move from pilots to production at scale. The future of enterprise growth belongs to those who can harness this integration effectively, and now is the time to act.

Build Your Enterprise GenAI–Cloud Platform with Intellivon

At Intellivon, we design enterprise-grade GenAI–cloud integration platforms that are secure, compliant, and tailored to the way global organizations operate. Our approach combines advanced generative AI models, hybrid and multi-cloud architectures, and governance frameworks to ensure intelligence is scalable, explainable, and aligned with your business priorities.

Why Partner with Us?

- Tailored Architectures: Every platform is aligned with your unique workflows, compliance rules, and industry standards.

- Compliance-First Design: Built to meet GDPR, HIPAA, SOC2, and EU AI Act requirements from day one.

- Proven Enterprise Expertise: 500+ successful deployments across industries with measurable cost savings and ROI.

- Scalable Orchestration: Elastic, multi-cloud integration with SAP, Salesforce, Epic, and other enterprise platforms.

Book a discovery call and turn GenAI pilots into enterprise-grade, governed, scalable platforms.

FAQs

Q1. What is a GenAI–cloud-integrated system?

A1. A GenAI–cloud integrated system combines generative AI models with cloud infrastructure and enterprise workflows into a unified platform. Instead of operating as isolated pilots, these systems connect data pipelines, AI models, orchestration layers, and end-user applications. The result is intelligence that is scalable, secure, and embedded directly into the tools employees already use.

Q2. Why can’t enterprises just run GenAI directly in the cloud?

A2. Running GenAI directly in the cloud provides raw compute power but does not solve integration, compliance, or workflow orchestration challenges. Enterprises need systems that connect AI to ERP, CRM, and EHR applications while enforcing governance and cost controls. Without integration, cloud deployments remain experiments rather than enterprise-ready solutions.

Q3. How much does it cost to build one?

A3. The cost depends on factors such as data volume, model complexity, regulatory requirements, and integration scope. Typical enterprise-grade deployments range from $100,000 – $150,000, covering cloud infrastructure, AI model customization, orchestration, and compliance frameworks. With proper ROI alignment, many enterprises recover costs within the first 12–24 months.

Q4. How do these systems ensure compliance (HIPAA, GDPR, SOC2)?

A4. Compliance is built into the architecture through automated data governance, audit trails, and role-based access controls. Every data flow and model output is monitored against regulatory frameworks, ensuring regulator readiness from day one. This proactive approach reduces risk while enabling faster, more confident innovation.

Q5. How does Intellivon integrate GenAI into existing ERP/CRM/EHR systems?

A5. Our integration approach focuses on building secure APIs, orchestration layers, and domain-specific copilots that plug directly into existing enterprise applications. Data pipelines connect structured and unstructured sources to GenAI models, while outputs flow back into tools like SAP, Salesforce, and Epic. This ensures adoption without disrupting core workflows or requiring employees to learn entirely new systems.